Posted 08/15/15

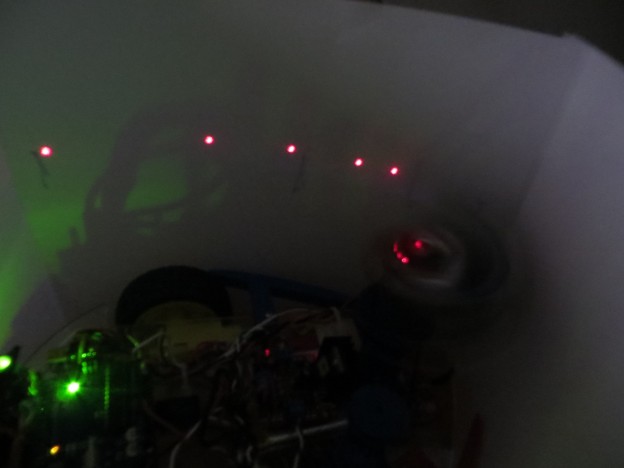

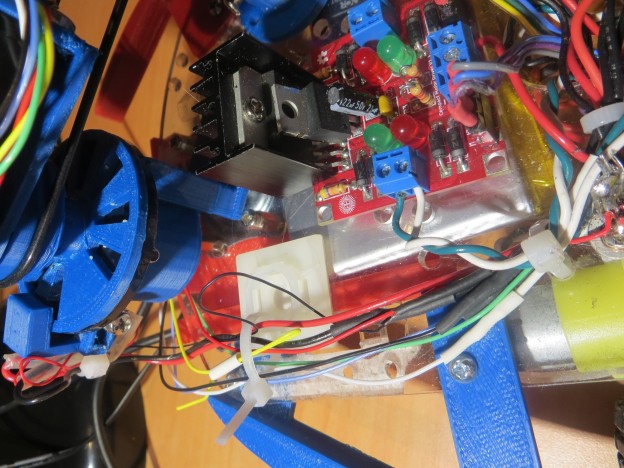

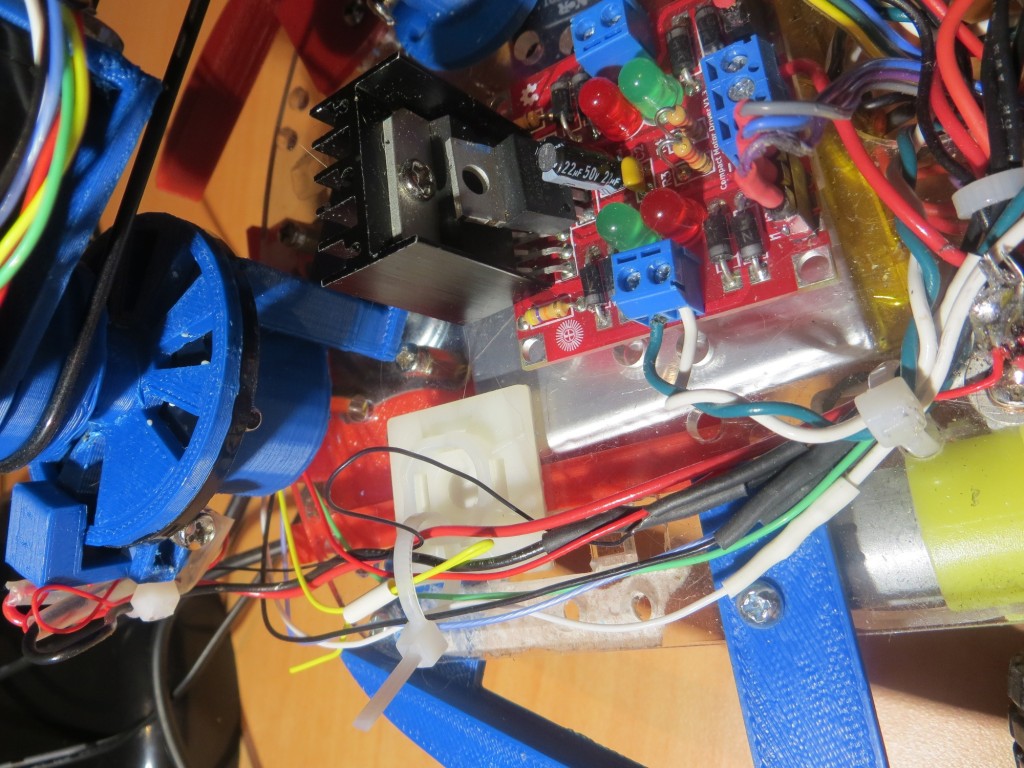

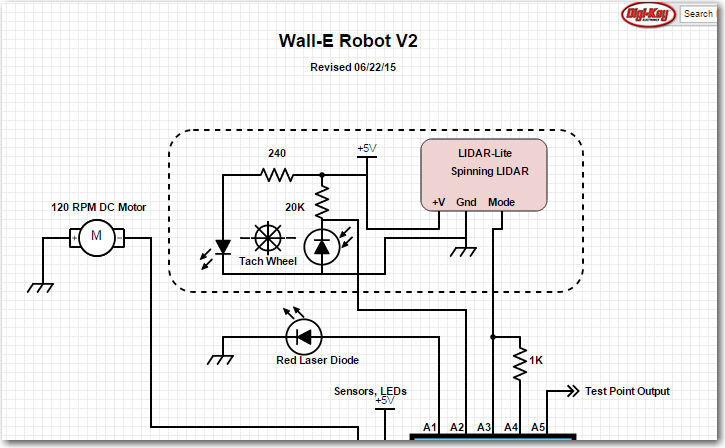

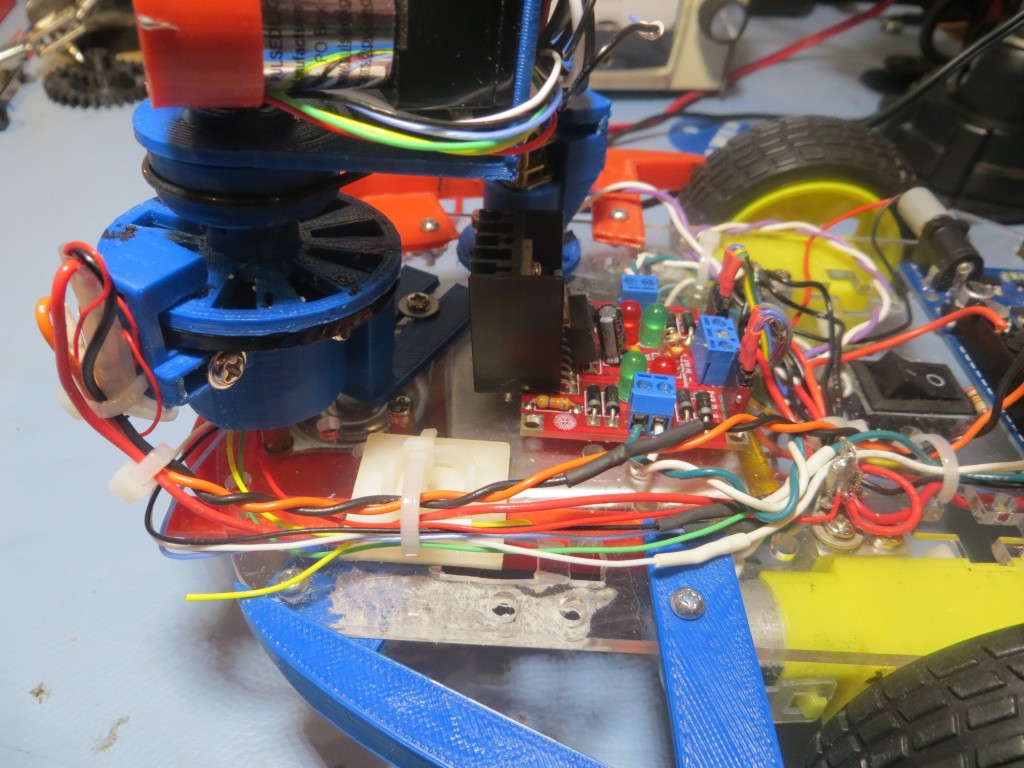

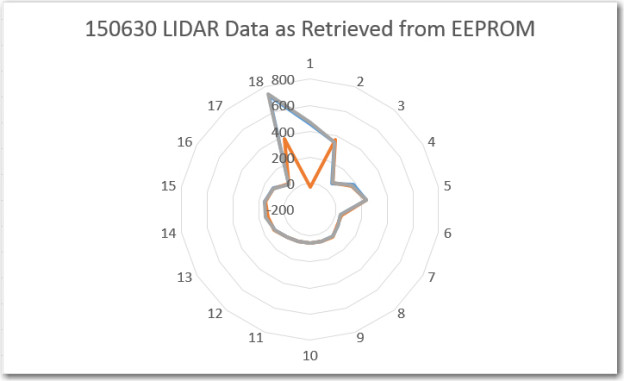

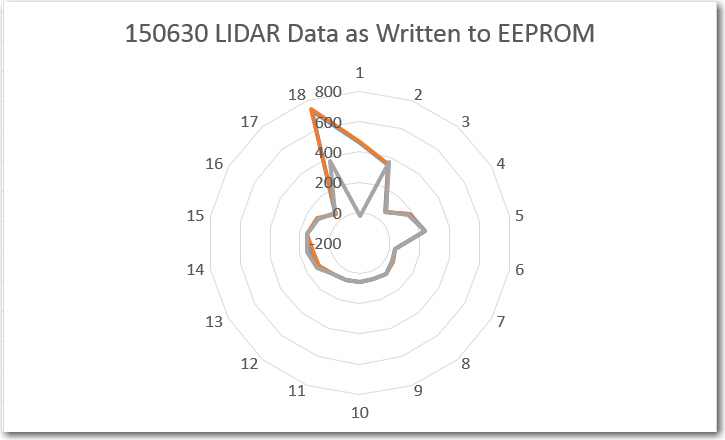

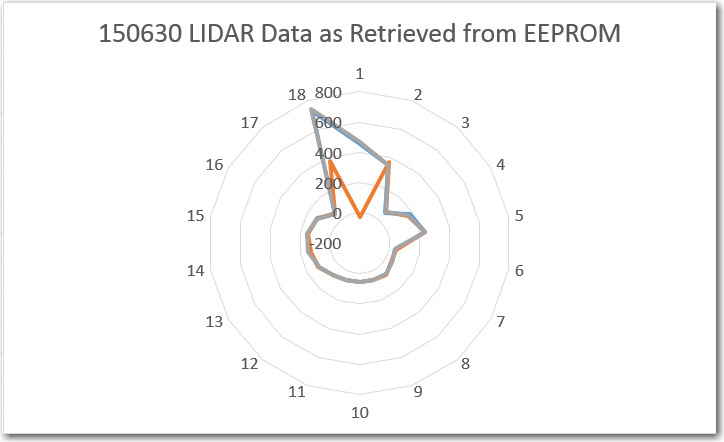

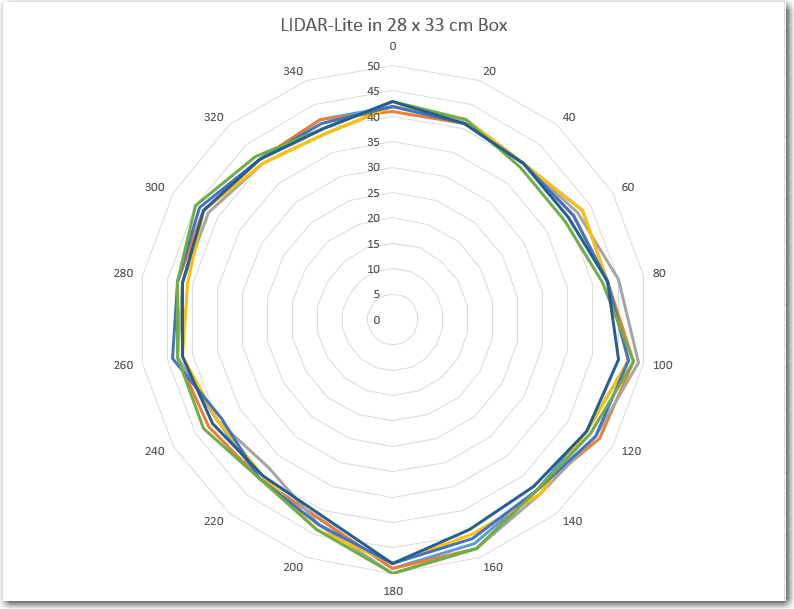

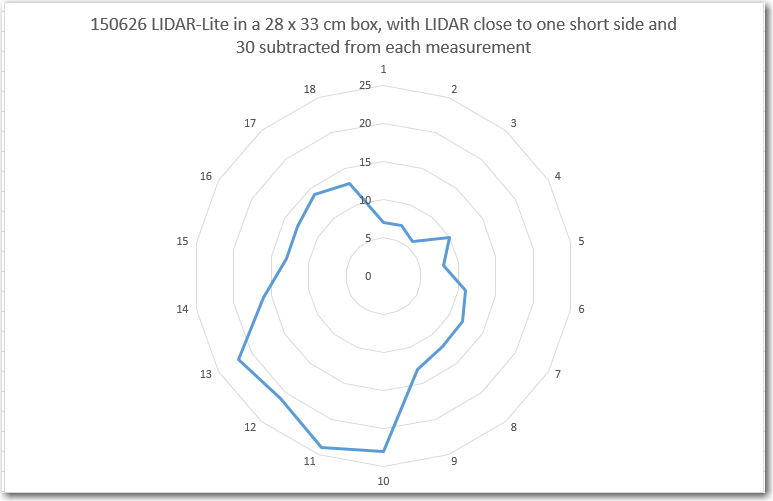

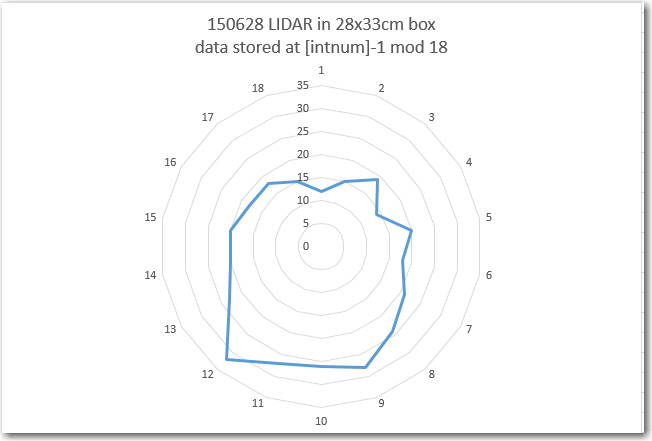

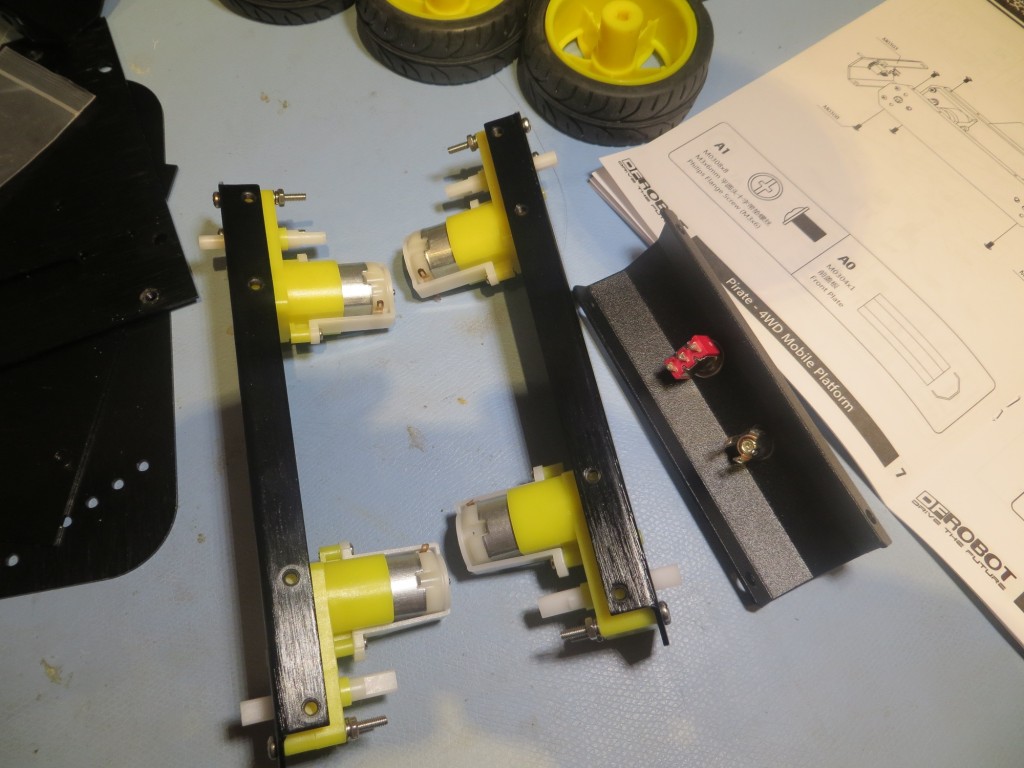

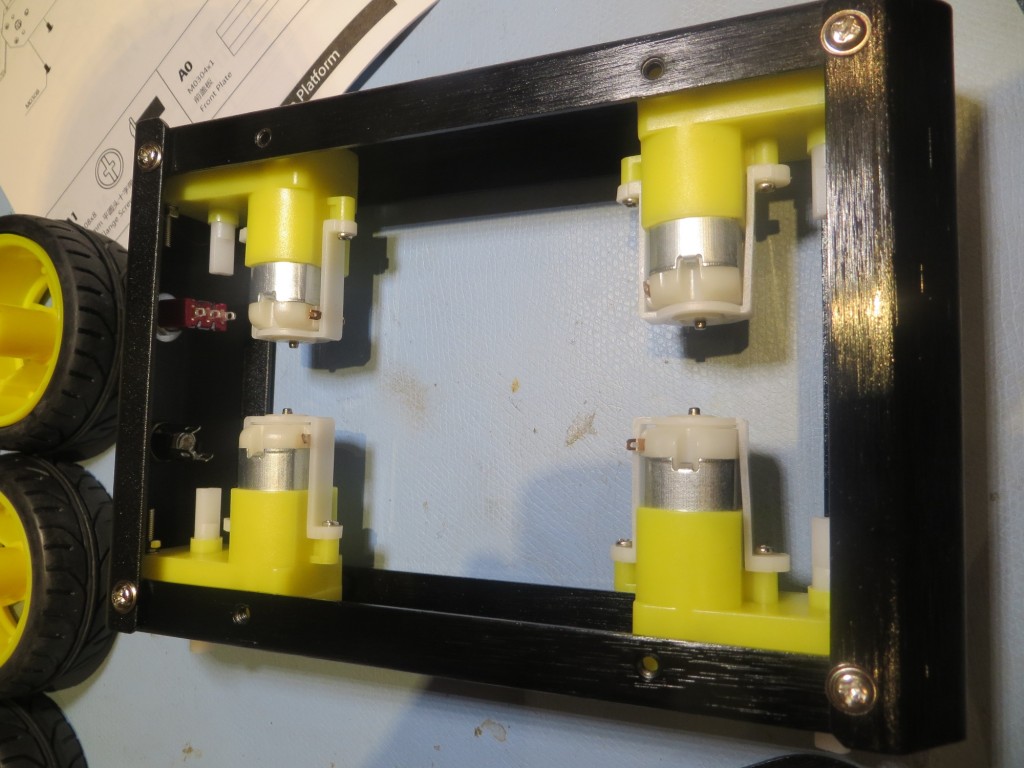

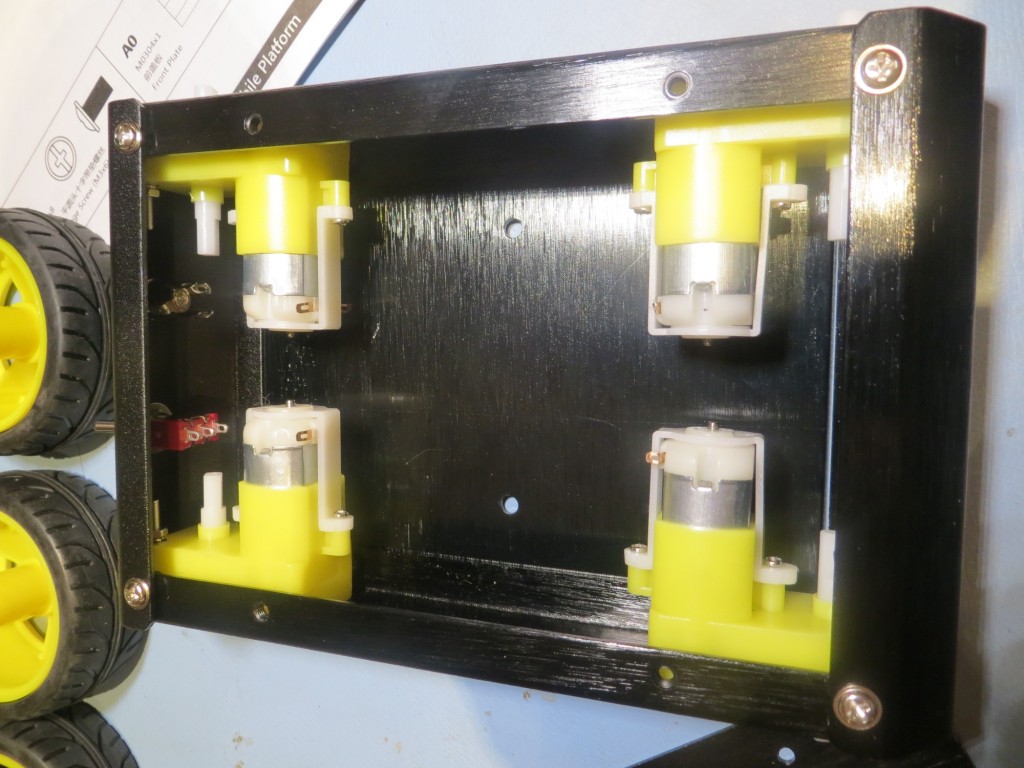

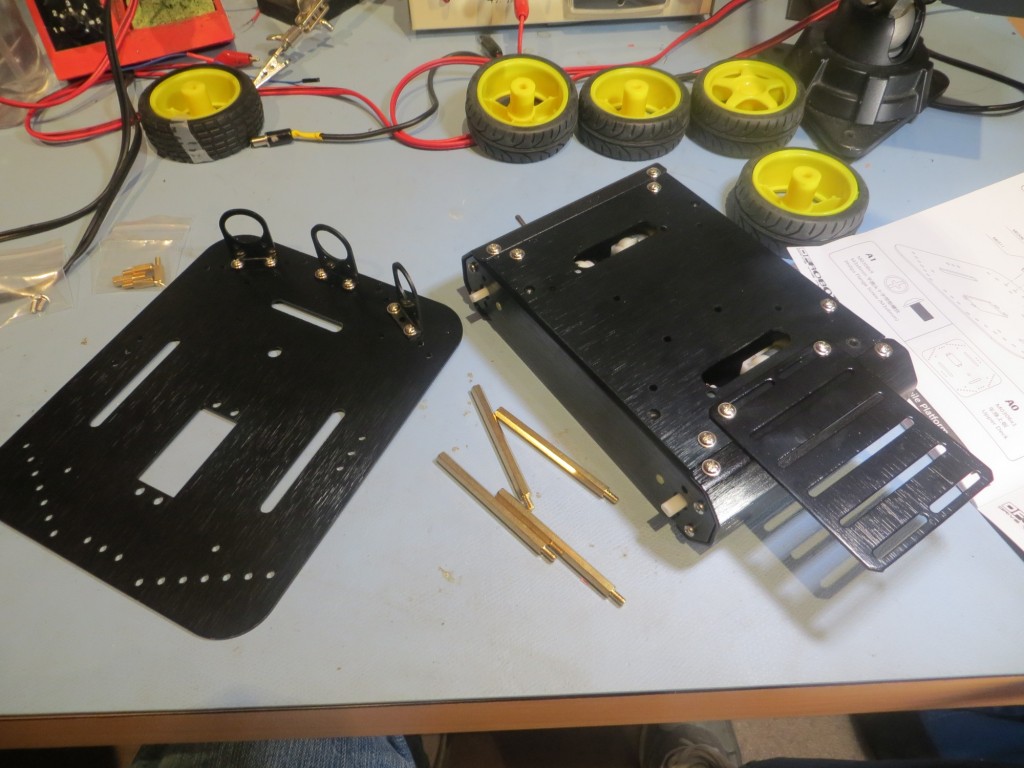

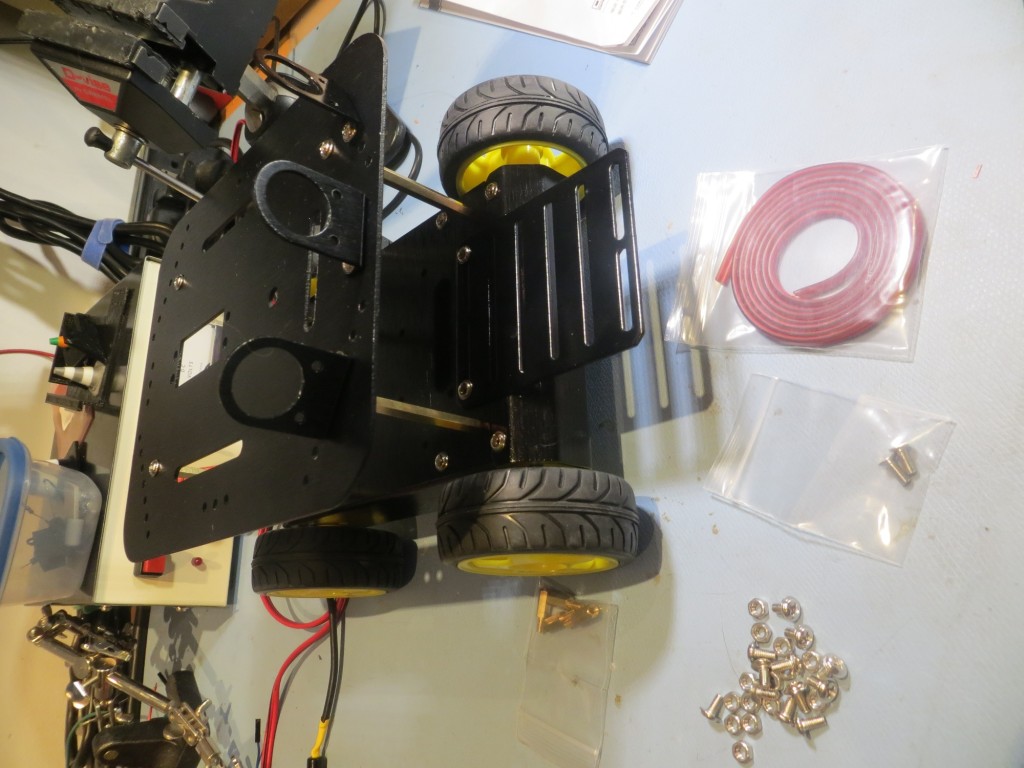

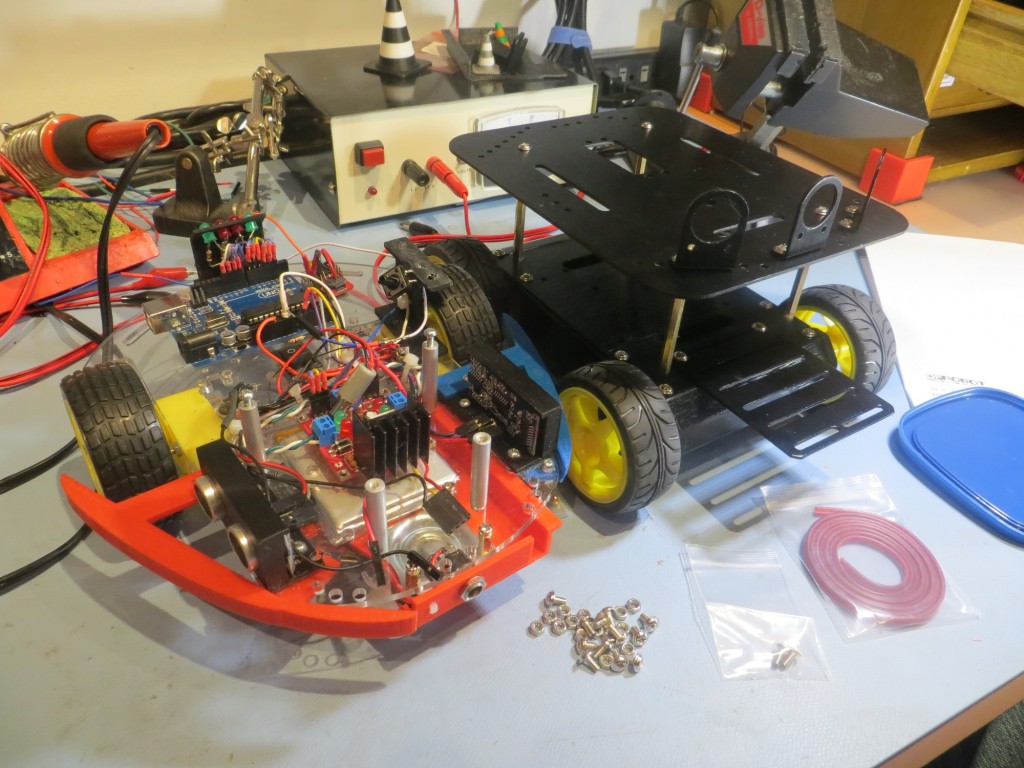

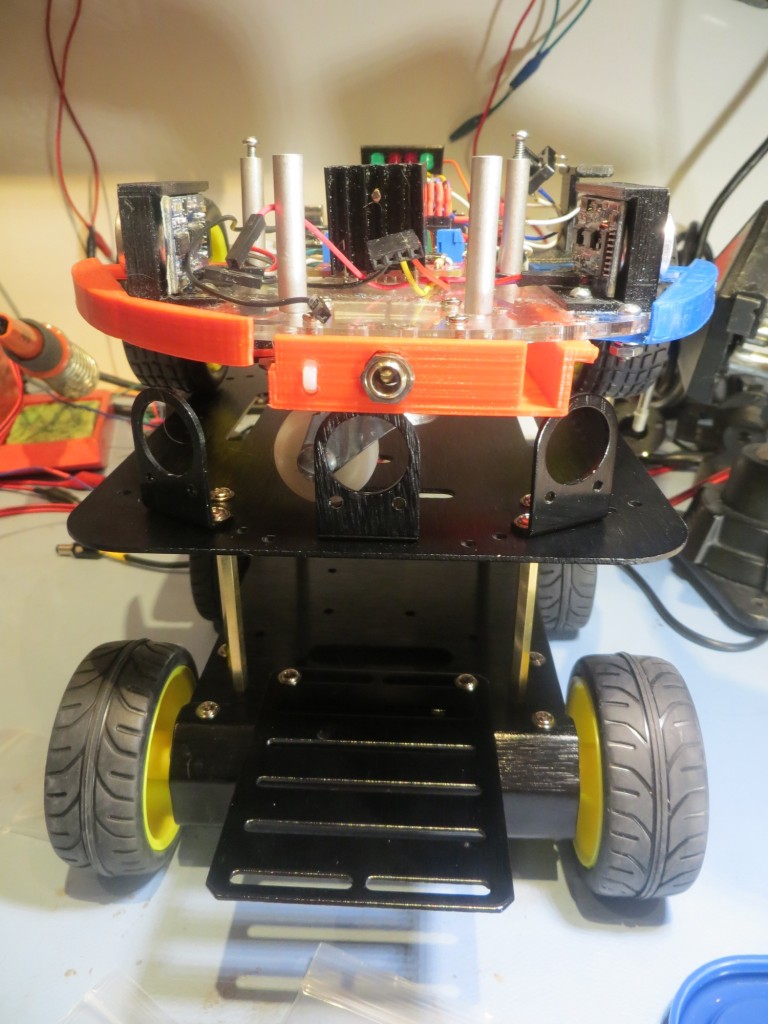

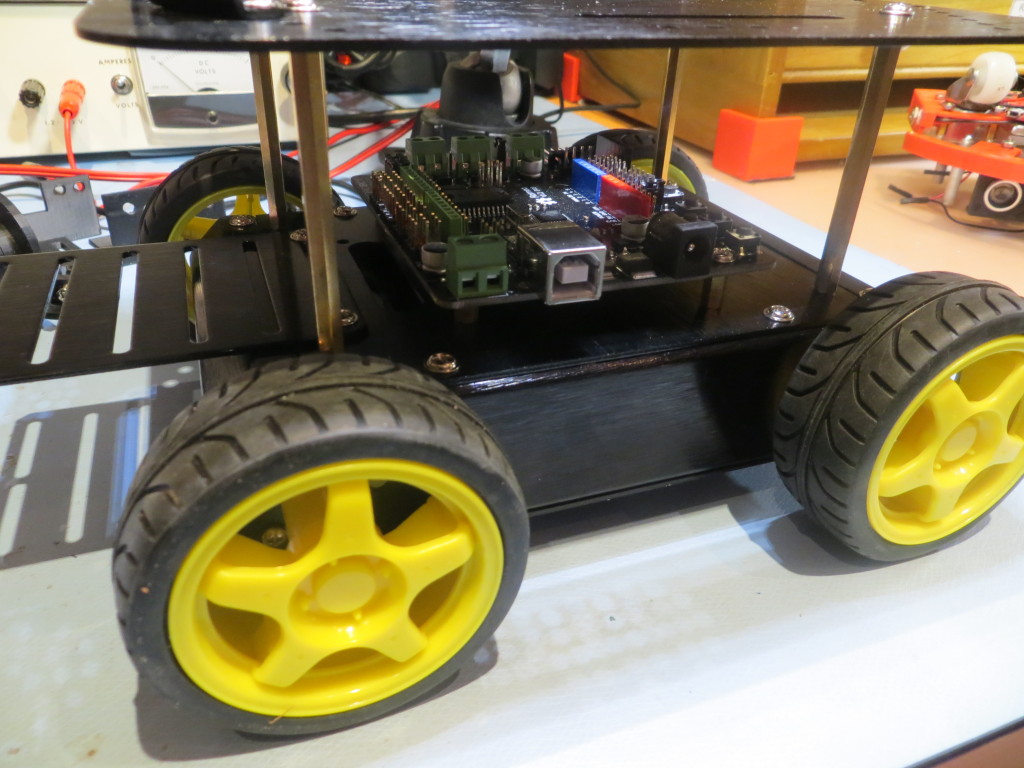

In my ongoing Sisyphean effort to get Wall-E (my wall-following robot) to actually follow walls, I recently replaced the original three (actually four at one point) acoustic distance sensors with a spinning-LIDAR system using the Pulsed Light LIDAR-Lite unit. While this effort was a LOT of fun, and allowed me to also get some good use from my 3D printers, I wasn’t able to reach the goal of improving Wall-E’s wall-following performance. In fact, wall-following performance was much WORSE – not better. As described in previous posts, I finally tracked the problem down to too-slow response from the LIDAR unit – it couldn’t keep up with the interrupts from my 10-tooth tach sensor that provides then necessary LIDAR pointing-angle information. I tried changing the LIDAR over from MODE control to I2C control (see previous posts), but this led to other issues as described, and although I saw some glimmers of success, I’m still not there.

So, when I noticed that Pulsed Light was advertising their new ‘Blue Label’ (V2) version of their LIDAR-Lite unit, with a nominal 5x response time speedup, I immediately ordered one, thinking that was the solution to all my problems. A 5x speedup should easily be fast enough to enable servicing interrupts at the 25-30 msec time frame required for my spinning LIDAR setup. I would be home FREE! ;-).

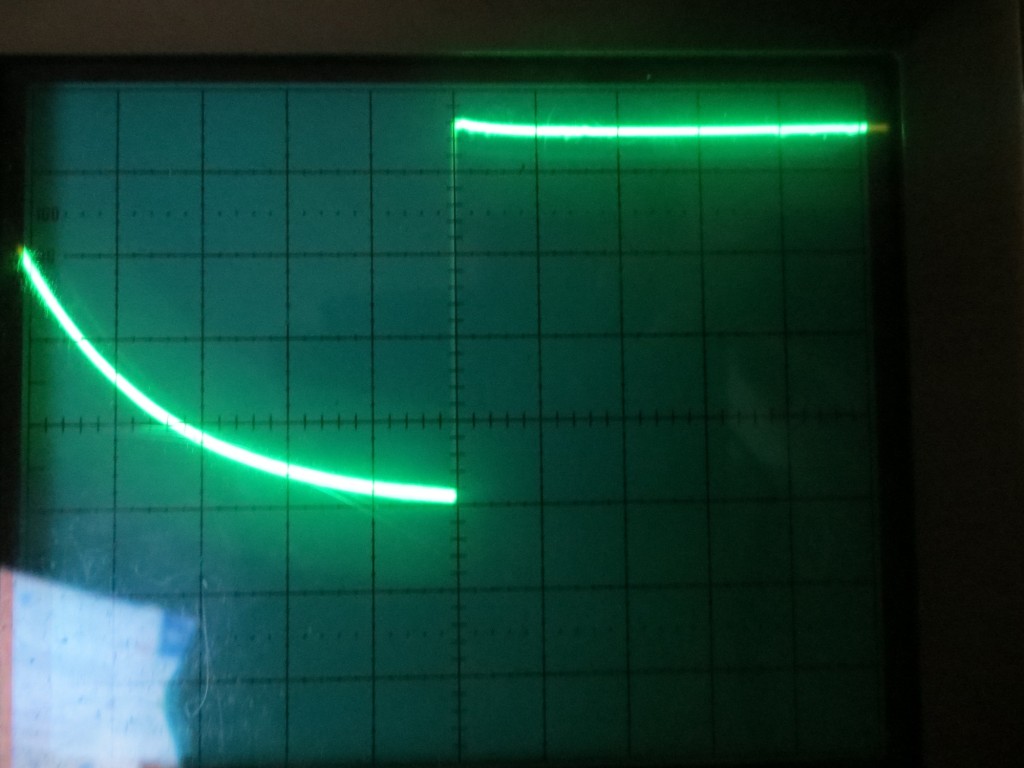

Well, as it turns out, I wasn’t quite home free after all. As often happens, the reality is a bit more complicated than that. When I first received my V2 ‘Blue Lable’ unit and made some initial tests, I immediately started having ‘lockup’ problems of one sort or another, even using the Arduino example sketches provided by Pulsed Light, and with a 470 uF BAC (big-assed capacitor) installed (Pulsed Light operating recommended 680 uF, but 470 was the biggest I had readily available).

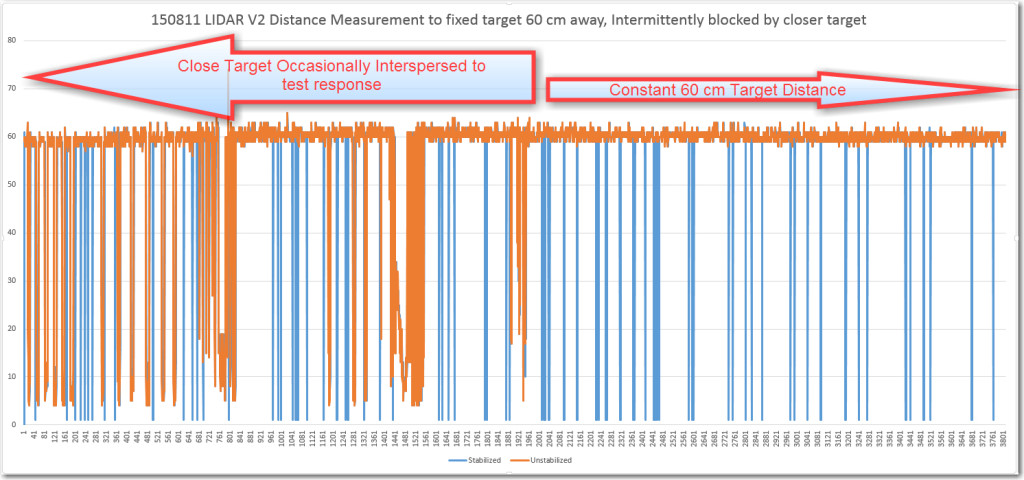

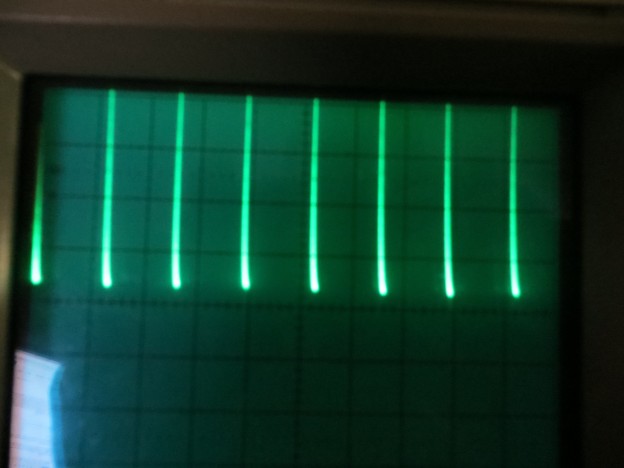

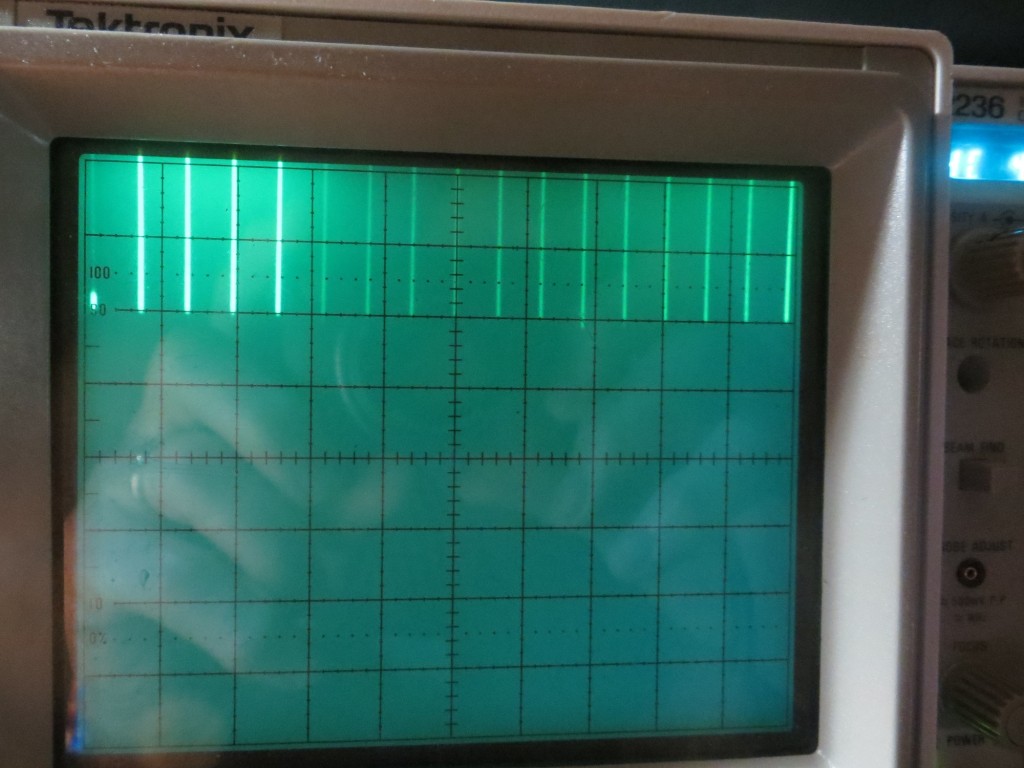

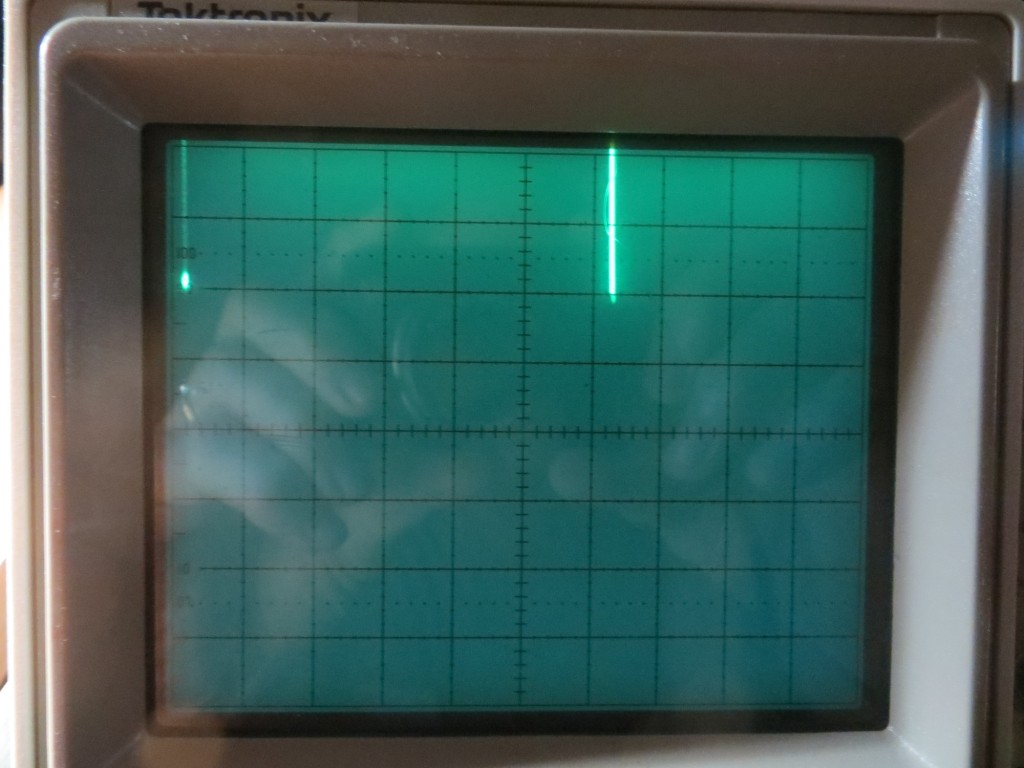

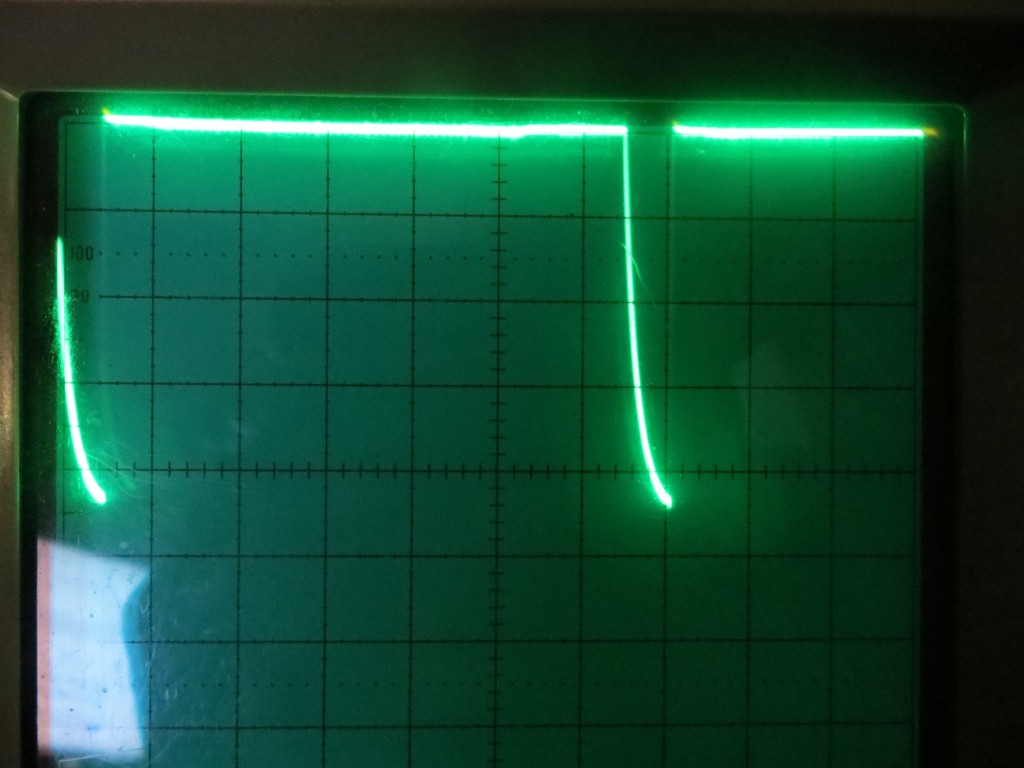

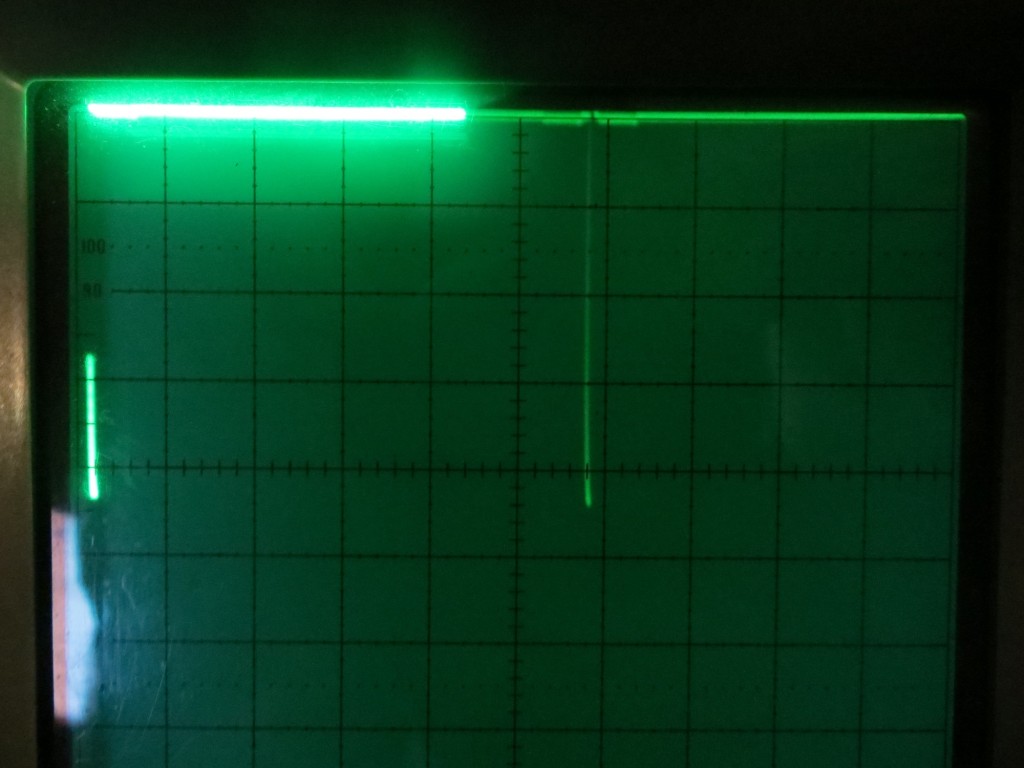

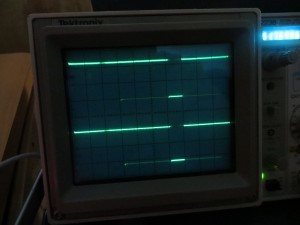

The Pulsed Light supplied ‘Distance as fast as Possible’ Arduino sketch makes a single call to the V2 measurement routine with ‘stabilization’ enabled, and then makes another 99 calls to the routine with ‘stabilization’ disabled. The idea is that the extra time required for the stabilization process is only necessary every 100 measurement or so. The provided test sketch implements a Serial.println() statement for every measurement, but this can quickly overload the serial port and/or PC buffers. So, I modified the sketch to print the results of the single ‘stabilized’ measurement plus only the last (of 99) ‘unstabilized’ measurements. This seemed to work *much* better, but then I noticed that the ‘stabilized’ measurement was showing occasional ‘drop-outs’ where the measurement to a constant 60cm distant target was 1 cm instead of 60 cm – strange.

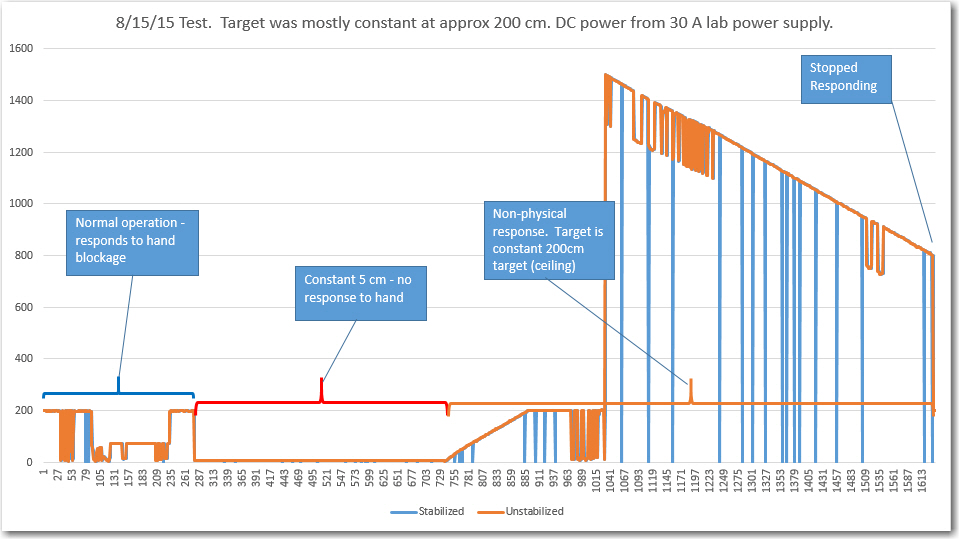

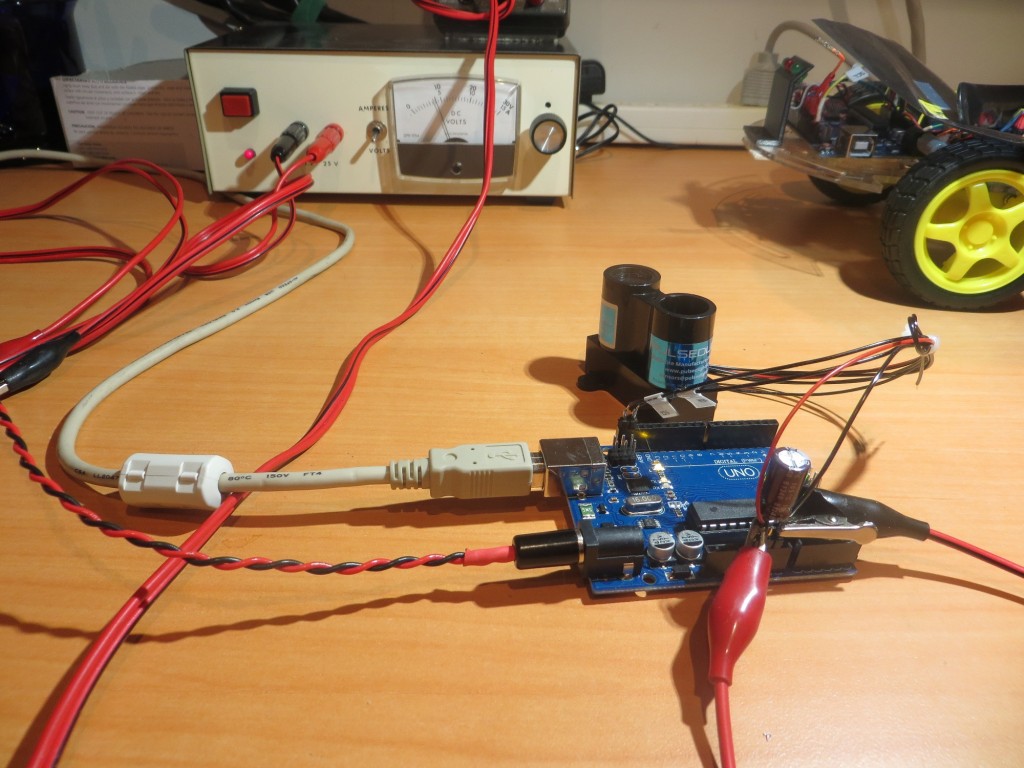

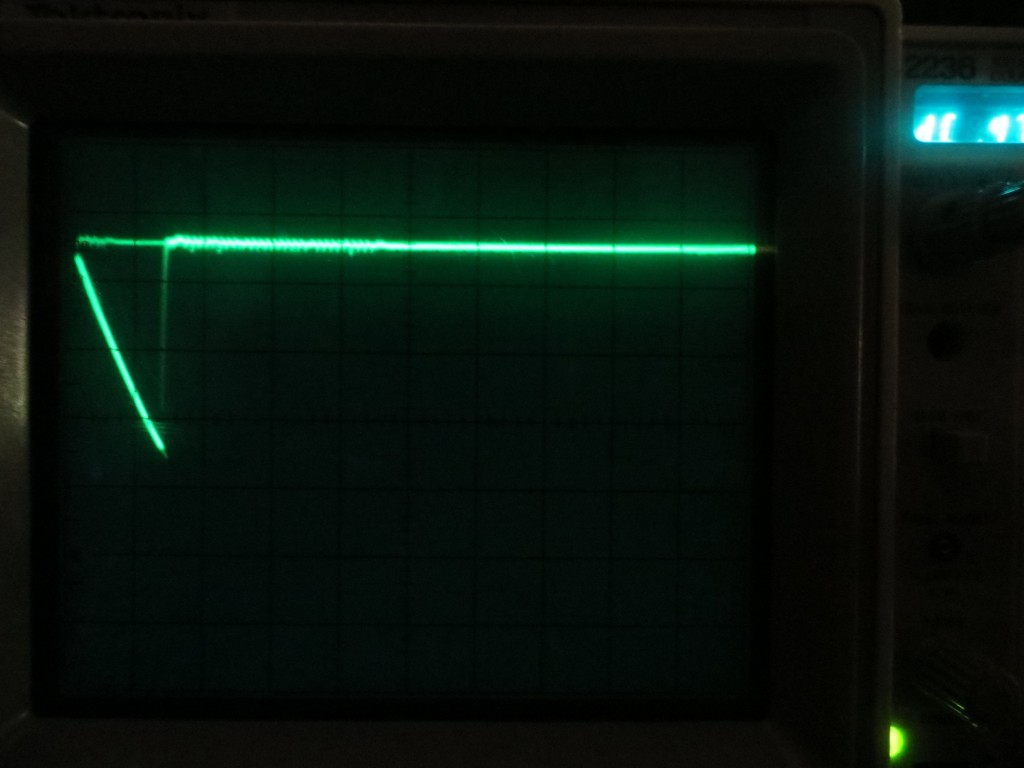

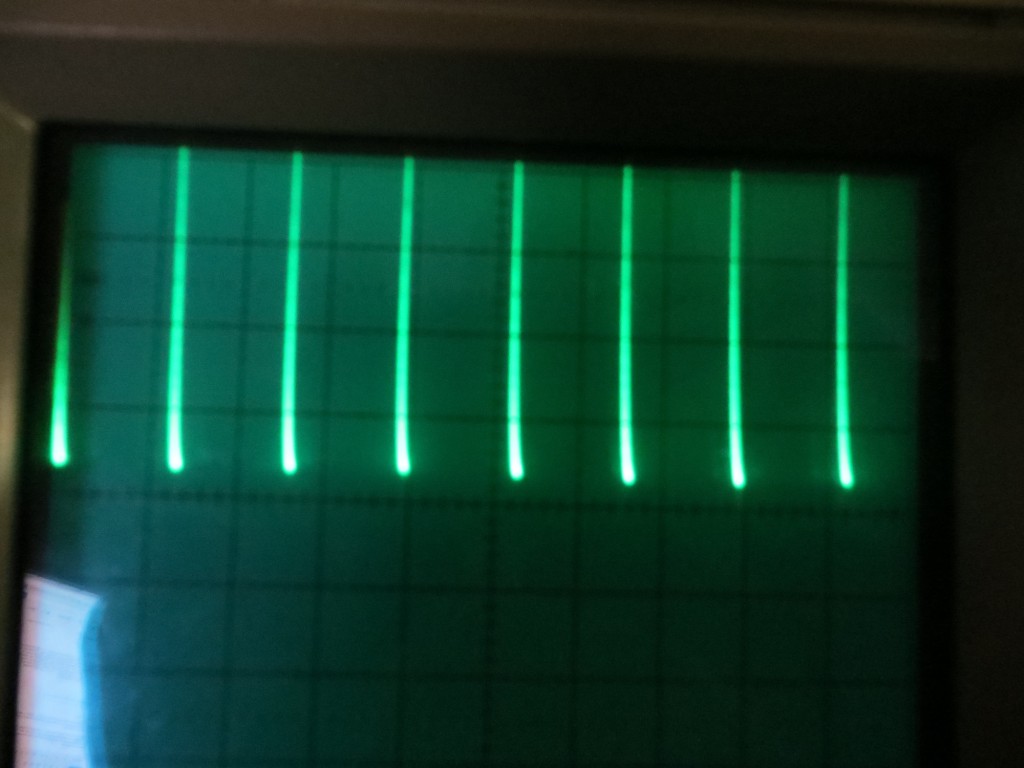

I passed this all along to the Pulsed Light folks (Austin and Bob, who have been very responsive the entire time). They suggested that the smaller cap might be the problem, so I ordered replacements from DigiKey. When they arrived, I ran the same test again, but this time I not only had the ‘stabilized measurement dropout’ problem, but now the unit was consistently hanging up after a few minutes as well. More conversation with Austin/Bob indicated that I should try using an external power supply for the Arduino rather than depending on the USB port to supply the necessary current. So, I made up a power cable so I could run the Uno from my lab power supply and tried again, with basically the same result. The V2 unit will run normally for a while (with ‘stabilization drop-outs’ as before) and then at some point will go ‘ga-ga’ and start supplying obviously erroneous results, followed at some point by a complete lack of response that requires a power recycle to regain control.

All this went back to Austin/Bob for them to cogitate on, and hopefully they will be able to point out what I’m doing wrong. In the meantime, I have ordered a couple of ‘Genuine’ Arduino Uno boards to guard against the possibility that all these problems are being caused by some deficiency associated with clone Uno’s that won’t be present in ‘genuine’ ones. A guy can hope, anyways! ;-).

Stay Tuned!

Frank