Posted 08 December 2023

WallE3 went with us last month when we travelled to St. Louis for Thanksgiving with family, and I showed off his autonomous wall following skills. WallE3 actually did great for quite a while – that is until he found himself staring at the side of a floor-mounted wine cooler (wine ‘safe’?). As can be seen in the following short video, WallE3 fell in love with the cooler, and showed his love by repeatedly head-butting it – oops!

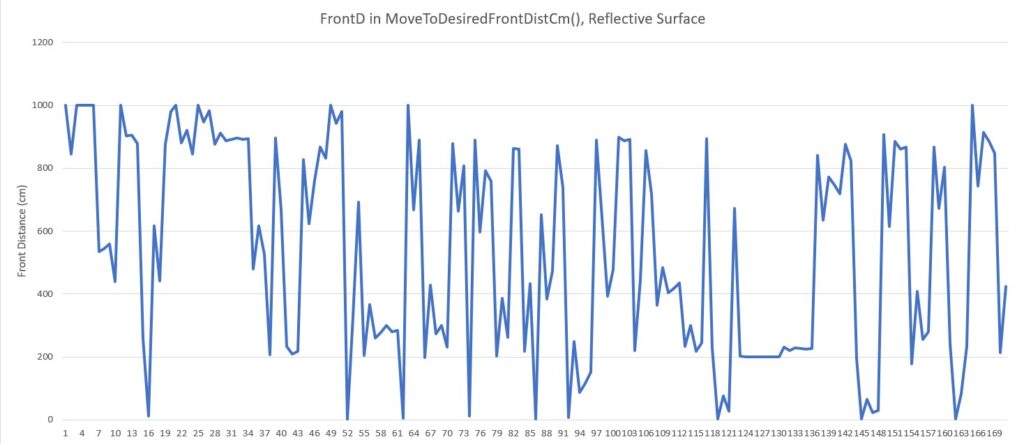

After looking at the telemetry data for the run, I saw that WallE3 was measuring much larger front distances – like several hundred centimeters – when it was only a few centimeters from the object. It appears he was backing up to a defined front distance in response to a ‘WALL_OFFSET_DISTANCE_AHEAD’ anomaly, but somehow convinced himself that instead of 20cm from the wall, he was actually more like 100cm away. Of course, since he wanted to be at 30cm (the desired wall offset distance), he drove forward to lessen the distance, thereby bonking into the wall. Then, when he hit the wall, the front LIDAR line of sight geometry changed enough to produce a true measurement of just a few centimeters, which then sent WallE3 running backwards to open up the distance. Lather, rinse, repeat. Here’s an Excel plot of a representative (but not exact – I somehow lost the actual telemetry data for this run).

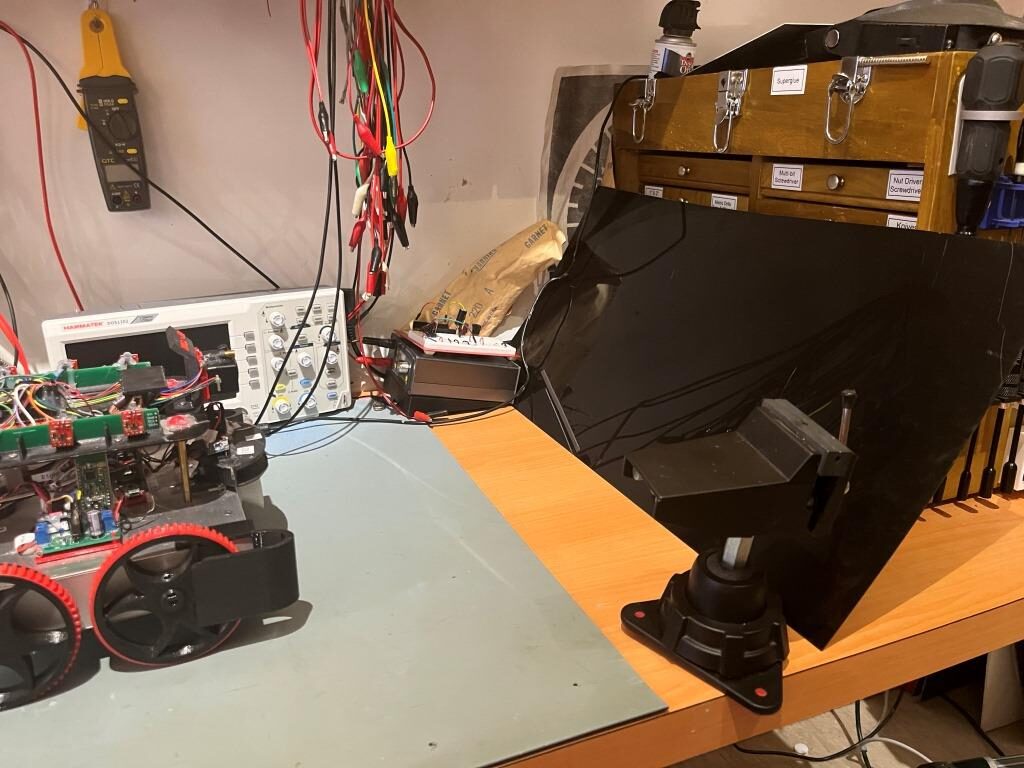

As can be seen from the above the measured distance oscillates between the maximum measurable distance of 1000cm to nearly zero. Here’s a photo of the experimental setup that produced the above data.

The ‘reflective surface’ is a piece of glossy black translucent plastic, oriented at an angle to reflect WallE3’s LIDAR beam upward to the ceiling and then the reflected signal from the ceiling back to WallE3.

I’ve been thinking about this issue ever since first seeing it in St. Louis, but hadn’t come up with any firm ideas about how to solve it. I tinkered with the idea of generating two running averages of the front distance when in the ‘MoveToDesiredFrontDistCm()’ function, with the two averages separated in time by some amount. When approaching a normal non-reflective surface, the two averages would closely track each other, but when approaching a reflective surface that produced the above dramatic distance shifts, then the two averages would be dramatically different around the transitions. This could then be detected, and something done to recover. Then last night while falling asleep, I wondered whether or not the STMicro VL53LXX infra-red LIDAR sensors would have the same problem – hmm, maybe not! If that were the case, then I could probably run one in parallel with the Garmin LIDAR unit, and use it instead of the Garmin for all ‘MoveToDesiredFront/RearDistCm()’ calls.

I tried this experiment using the currently installed rear distance sensors by calling ‘MoveToDesiredRearDistCm()’ with the same reflective surface setup as before, as shown in the following photo:

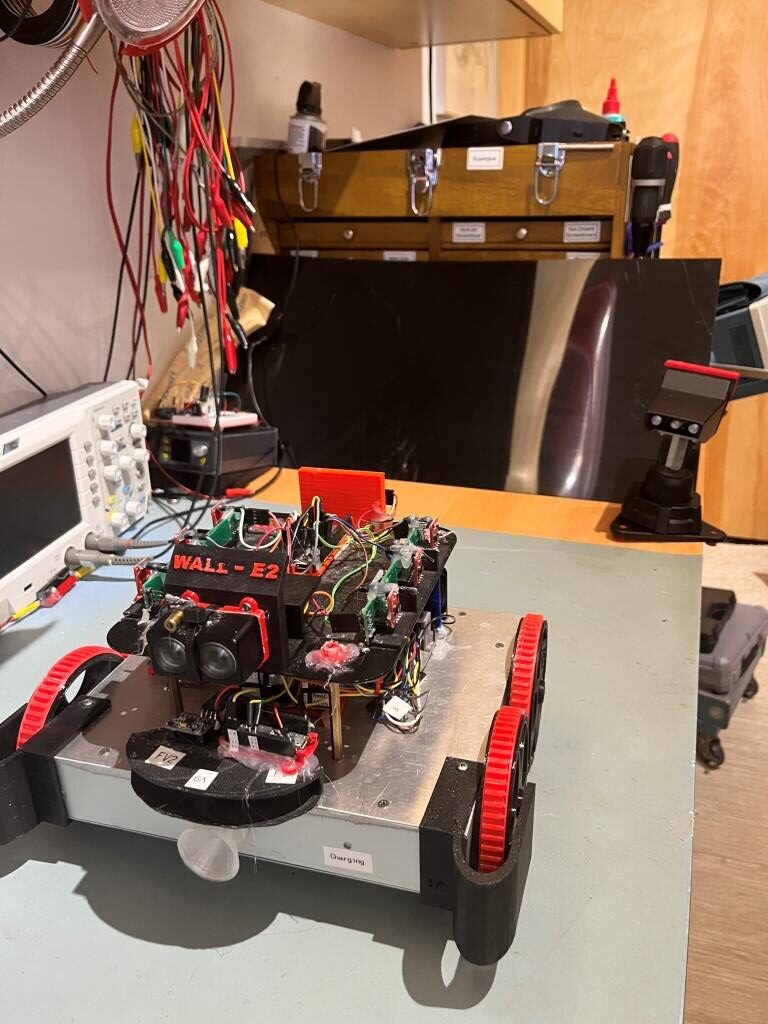

Here’s an excel plot of the rear distance run:

As can be seen in the above plot, the rear distance run was completely normal, so the VL53LXX infra-red LIDAR sensors don’t have the same problem – at least not with this translucent glossy plastic material.

10 December 2023 Update:

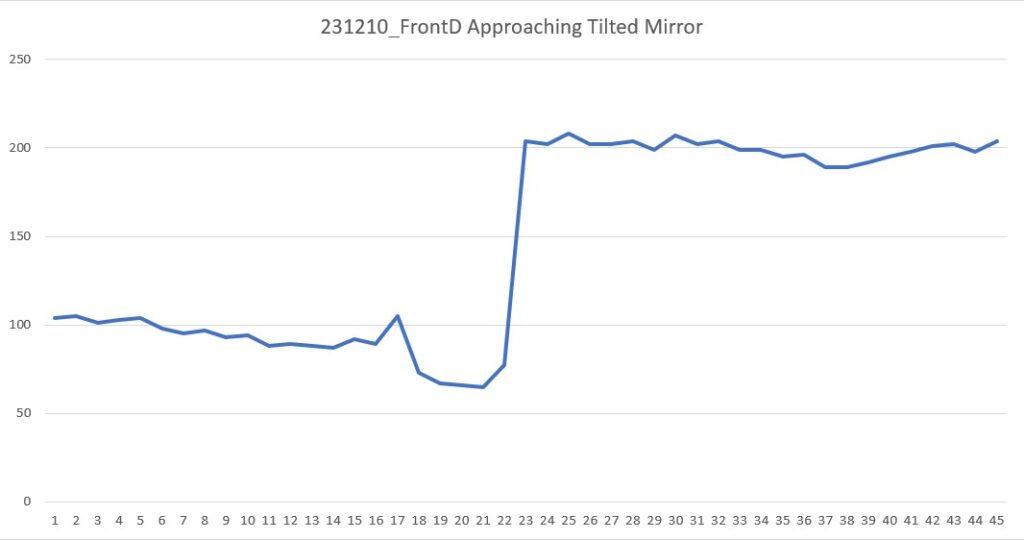

I got to thinking that maybe the reason the rear distance sensor worked so well with the shiny black material is that it could be IR transparent, meaning that while the front sensor (an LED LIDAR system) would see a ‘mirror’, the rear sensor would just see the toolbox. So, I jumped up on Amazon and got a cheap mirror square so I could answer that question. Here is a short video and Excel plot showing a ‘MoveToDesiredFrontDistCm()’ run with the new mirror square.

As can be seen from the video, WallE was perfectly happy to drive right through the mirror, but I had visions of mirror pieces all over the bench, the floor, and me, so I manually prevented that from happening. Here’s an Excel plot showing the same run:

As the Excel plot shows, the robot did OK for the first 20 measurements (about 1sec) but immediately thereafter started a steady 200cm, and it stayed that way until I stopped it at about 2sec

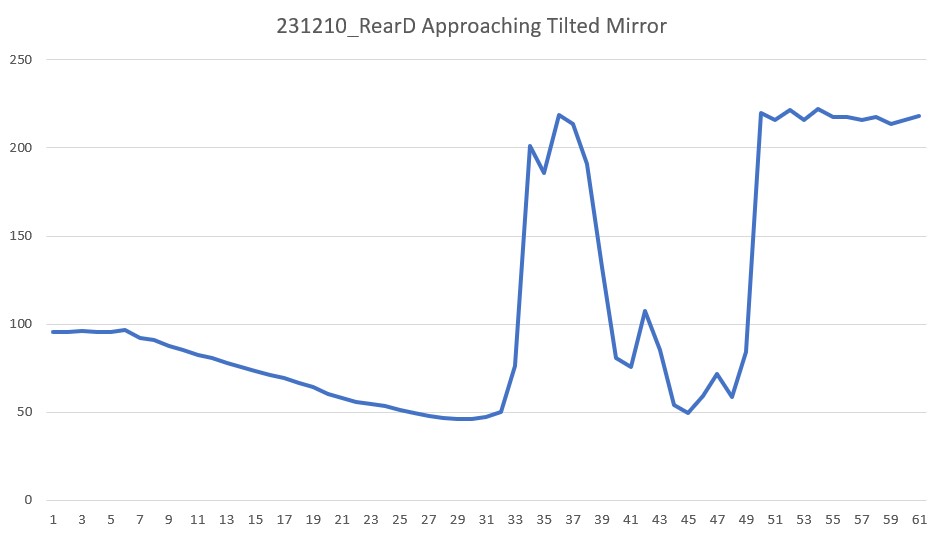

Then I tried the same experiment, but this time utilizing the STMicro VL53LXX IR LIDAR sensor on the rear of the robot, as shown in the following short video:

As the video shows, the IR LIDAR behaved pretty much the same as the LED LIDAR (no real surprise, as they are both LIDAR technology, but still a bummer!

Here’s the Excel plot for this run:

As the Excel plot shows, the distance decreased monotonically for the first 30 points (about 1.5sec) but then shot up to 200 due to the mirror. I believe the lower distances after about point 40 (2sec) were due to me interfering with the IR beam.

So, unfortunately my theory about the rear IR sensor doing better with the shiny black plastic ‘mirror’ because it appeared transparent at that wavelength seems to be bolstered, so I now think that using the VL53LXX sensor instead of the Garmin LIDAR LED sensor for ‘MoveToFrontDistCm()’ operations is NOT going to work. Back to the drawing board :(.

23 December 2023 Update:

I’ve been thinking about this problem for a while now, and have not come up with a good answer; WallE3’s perception of the world around it depends entirely on LIDAR-type distance sensors, so if those sensors produce ‘false’ distance reports due to mirror or mirror-ish surfaces, WallE3 has no way to know the ‘true’ distance – bummer!

So, what I decided to do is to simply detect the symptoms of the ‘mirrored surface’ situation, halt the robot and yell for help. The detection algorithm uses the knowledge that when the robot is moving forward toward a ‘normal’ object, the front distance should decrease monotonically with time. Similarly when the robot is moving backwards toward a ‘normal’ object, the rear distance should decrease monotonically with time. Conversely, when approaching a ‘mirror-like’ object, the measured distance tends to be unstable, with distance increasing instead of decreasing.

The detection algorithm calculates a three-point average each time through the action loop, and compares the result to the last time through the loop. If the new average is greater than the old average, a ‘mirrored surface detection’ is declared and the robot calls ‘YellForHelp()’ which stops the motors and emits an audible Morse code ‘SOS’.

Here’s the full code for ‘DoOneMoveToFrontDistCm()’ function that actually moves the robot and checks for ‘mirrored surface’ error conditions

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 |

bool DoOneMoveToFrontDistCm(uint16_t offsetCm, float Kp, float Ki, float Kd) { float lastError = 0; float lastInput = 0; float lastIval = 0; float lastDerror = 0; uint16_t oldAvgDist = GetAvgFrontDistCm(); //added 12/19/23 MsecSinceLastDistUpdate = 0; //01/08/22 not using ISR anymore MsecSinceLastFrontDistUpdate = 0; //added 10/02/22 to slow front LIDAR update rate gl_LastAnomalyCode = ANOMALY_NONE; //12/23/23 display ten distances from front dist array before start gl_pSerPort->printf("In DoOneMoveToFrontDistCm() at top, displaying 10 front dist entries\n\n"); gl_pSerPort->printf("idx\tFrontD[idx]\n"); for (int i = 9; i >= 0; i--) { gl_pSerPort->printf("%d\t%d\n", FRONT_DIST_ARRAY_SIZE - 1 - i, aFrontDistCM[FRONT_DIST_ARRAY_SIZE-1-i]); } gl_pSerPort->printf("\n"); gl_pSerPort->printf("Msec\tFrontD\tTgtD\toldAvgD\tnewAvgD\tErr\tIval\tOutput\tspeed\tFrontVar\tRearVar\n"); while (abs(gl_FrontCm - offsetCm) > 1 && gl_LastAnomalyCode != ANOMALY_STUCK_AHEAD && gl_LastAnomalyCode != ANOMALY_STUCK_BEHIND) { CheckForUserInput(); if (MsecSinceLastDistUpdate >= MSEC_PER_DIST_UPDATE) { MsecSinceLastDistUpdate -= MSEC_PER_DIST_UPDATE; UpdateAllEnvironmentParameters(); //10/01/23 this can change anomaly code uint16_t newAvgDist = GetAvgFrontDistCm(); //avg of last three front dist readings OffsetDistOutput = PIDCalcs((double)gl_FrontCm, (float)offsetCm, lastError, lastInput, lastIval, lastDerror, Kp, Ki, Kd); int16_t speed = (int16_t)OffsetDistOutput; //PIDCalcs out can be negative, must handle both signs if (speed >= 0) { speed = (speed > MOTOR_SPEED_QTR) ? MOTOR_SPEED_QTR : speed; } else //speed < 0 { speed = (speed < -MOTOR_SPEED_QTR) ? -MOTOR_SPEED_QTR : speed; } gl_pSerPort->printf("%lu\t%d\t%d\t%d\t%d\t%2.1f\t%2.1f\t%2.1f\t%d\t%2.0f\t%2.0f\n", millis(), gl_FrontCm, offsetCm, oldAvgDist, newAvgDist, lastError, lastIval, OffsetDistOutput, speed, gl_Frontvar, gl_Rearvar); RunBothMotorsBidirectional(-speed, -speed); delay(100); //11/17/23 added to zero out lastIval anytime Kp*Err > max speed (MOTOR_SPEED_QTR here) //if (abs(gl_FrontCm - offsetCm)*OffsetDistKp > MOTOR_SPEED_QTR) if (abs(gl_FrontCm - offsetCm) * Kp > MOTOR_SPEED_QTR) { lastIval = 0; } //12/19/23 rev to return false if the average distance starts going the wrong way // (i.e. going up if the robot is moving forward, or down if the robot // is moving backwards) //12/24/23 Just '>' wasn't enough to prevent false positives, so Added '*1.1' factor if ((AreBothMotorsForward() && newAvgDist > oldAvgDist * 1.1) || (AreBothMotorsReversed() && newAvgDist * 1.1 < oldAvgDist) && abs(speed) > MOTOR_SPEED_MIN) { gl_pSerPort->printf("\nIn DoOneMoveToFrontDistCm() in 'mirrored sfc' detection block, displaying 10 front dist entries\n\n"); //12/23/23 display last 10 distances from front dist array gl_pSerPort->printf("idx\tFrontD[idx]\n"); for (int i = 9; i >= 0; i--) { gl_pSerPort->printf("%d\t%d\n", FRONT_DIST_ARRAY_SIZE - 1 - i, aFrontDistCM[FRONT_DIST_ARRAY_SIZE - 1 - i]); } gl_pSerPort->printf("\n"); return false; } oldAvgDist = newAvgDist; } } return true; } |

02 January 2024 Update:

I wasn’t really happy with my previous attempt at ‘mirrored surface’ detection as it seemed pretty to produce false positives. After thinking about the problem some more, I thought I might be able to use a distance variance calculation as a more robust detection method. The idea is that a normal monotonic increase or decrease in distance measurements would have a pretty low variance, while a distance reversal would generate a much larger value.

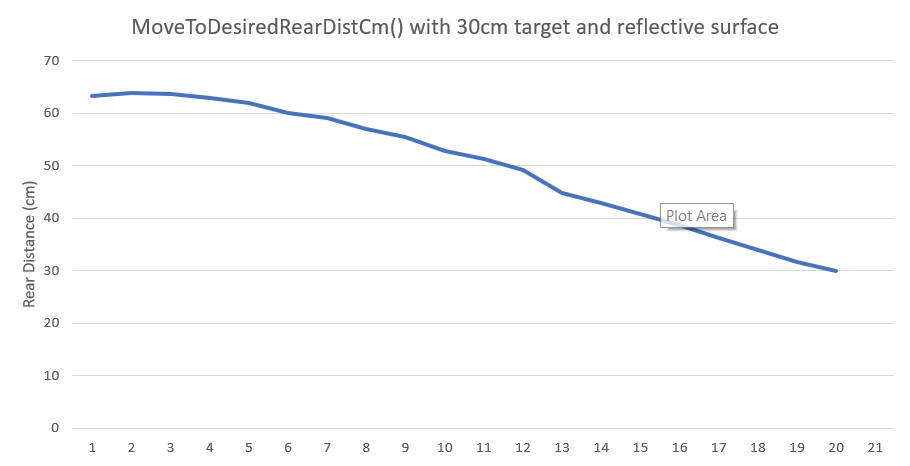

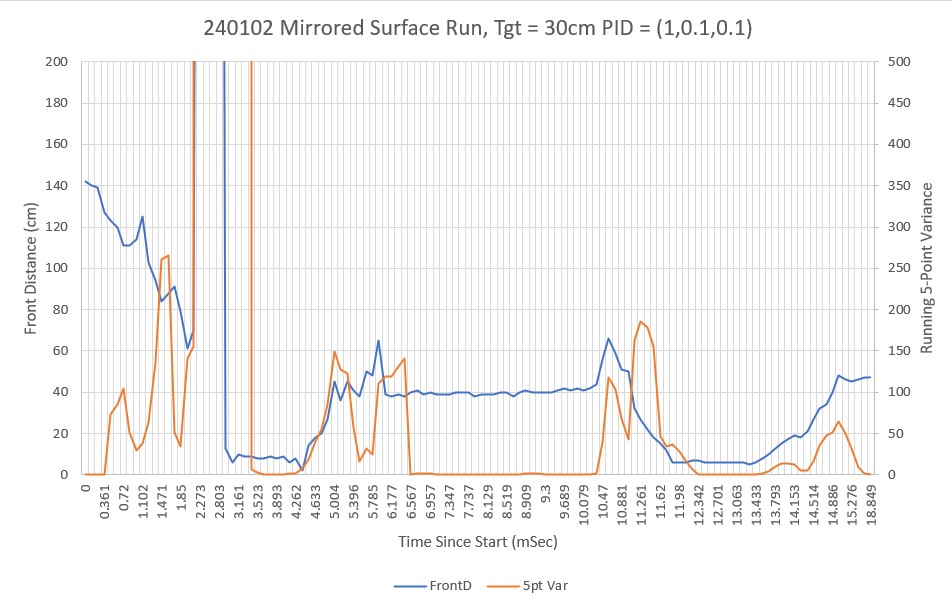

So, I ran some simulations in Excel using a 5-point running variance calculation, and the results were encouraging. Then, with the help of my lovely lab assistant, I set up an experiment in my office sandbox to see if I could capture a representative mirrored surface ‘screwup’, as shown below:

And here is an Excel plot showing the results (both the distance and 5-pt variance vertical scales have been truncated to show the smaller scale variations)

As can be see in the video and the Excel plot, the robot undergoes a number of distinct front/back oscillations, but then eventually settles down. It is clear from the plot that the 5-pt variance calculation is a good indicator of a ‘mirrored surface’ condition. Just looking at the plot, it appears that a variance threshold of 40-60 should provide for robust detection without much of a risk of false positives.

One other note about this experiment. To get multiple oscillations as shown in the video and plot, the mirrored surface had to be slanted slightly up toward the ceiling. If the surface was oriented vertically like a normal wall, the robot would often miss the first distance and hit the wall, but then would typically back off to the correct distance. I think this indicates that in the vertical configuration, there is enough backscatter from the floor and/or the robot itself to get a reasonable (if not entirely accurate) LIDAR distance measurement.

07 January 2024 Update:

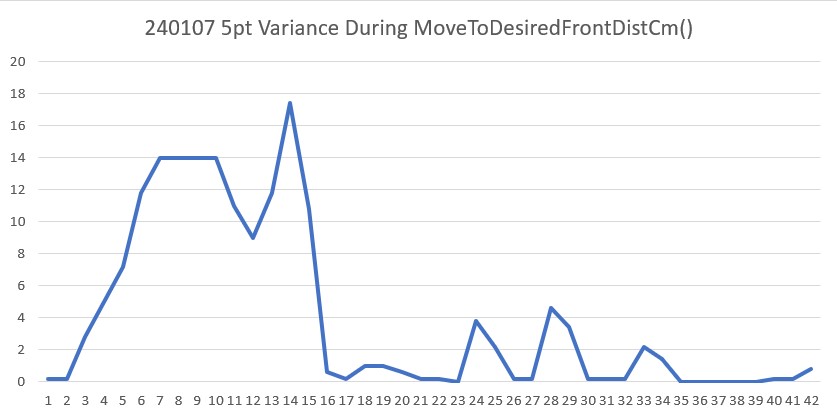

After playing around some more with this issue, I think the above 5pt variance calculation for ‘mirrored surface’ detection will work, so I revised my current well-tested ‘CalcBruteFrontDistArrayVariance()’ function to take a integer argument denoting the number of elements to use, starting at the end (most recent data) and working backwards.

Then I ran another test in my lab, but this time on a non-mirrored surface, to verify that the 5pt variance calc would still work properly and to settle on a good threshold for ‘mirror’ detection. The Excel plot below shows the results of a run where the robot moved backwards (still using the front LIDAR sensor) to 90cm from the wall.

As can be seen, the variance starts out in the 10 to 20 range during the initial ‘coarse’ distance movement, but then drops into the 0 to 5 range during the second ‘fine tuning’ movement. Comparing this to the previous ‘mirrored surface’ plot leads me to believe that a threshold of 40 would almost certainly (eventually) detect a ‘mirrored surface’ condition.

24 January 2024 Update:

I added the ‘CalcFrontNPointVar(uint16_t N)’ function to the robot code so I could obtain the front variance using just the last N front distances instead of the entire 100 point array. This turned out to work very well for detecting the ‘Mirrored Surface’ anomaly condition. Then I added ‘ANOMALY_MIRRORED_SFC’ to the list of anomaly codes, and added a ‘case’ block in ‘HandleAnomalousConditions()’ to deal with this condition. The handler function is:

|

1 2 3 4 5 6 7 |

case ANOMALY_MIRRORED_SFC: //01/21/24 added gl_LastAnomalyCode = ANOMALY_NONE; //rev 09/30/23 to clear anomaly flag gl_pSerPort->printf("ANOMALY_MIRRORED_SFC case detected with tracking case %s\n", WallTrackStrArray[trackcase]); gl_pSerPort->printf("In ANOMALY_MIRRORED_SFC case just before call to RunToDaylightV2() with last anomaly code = %s\n", AnomalyStrArray[gl_LastAnomalyCode]); gl_CurTrackingCase = RunToDaylightV2(); //added 10/2/23 gl_pSerPort->printf("In ANOMALY_MIRRORED_SFC case: RunToDaylightV2 returned = %s\n", WallTrackStrArray[gl_CurTrackingCase]); break; |

At the moment, all it does is call the ‘RunToDaylightV2()’ function, which does a 360º search for the best direction in which to move next. Here’s the telemetry and a short video showing the action.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 |

DoOneMoveToFrontDistCm() returned false with gl_LastAnomalyCode == ANOMALY_MIRRORED_SFC MoveToDesiredFrontDistCm returning false FrontBackMotionTest(): MoveToDesiredFrontDistCm() failed with anomaly code = MIRRORED_SURFACE - Quitting! In HandleAnomalousConditions(NEITHER) with last anomaly code = MIRRORED_SURFACE ANOMALY_MIRRORED_SFC case detected with tracking case NEITHER In ANOMALY_MIRRORED_SFC case just before call to RunToDaylightV2() with last anomaly code = NONE In RunToDaylightV2() Idx Hdg FrontD LeftD RightD LSteer RSteer Avail 0 62.6 380 82.0 246.7 0.7 -1.0 0 1 75.9 526 95.4 318.2 0.2 0.5 1 2 86.4 142 101.3 294.9 0.3 -0.6 0 3 97.9 181 107.4 241.4 0.3 0.1 0 4 109.2 168 89.7 242.8 0.7 -0.7 0 5 119.3 143 102.2 209.9 1.0 1.0 0 6 129.3 123 179.5 162.2 0.1 0.3 0 7 139.8 379 170.0 149.3 0.2 -0.2 0 8 150.4 443 149.5 171.7 0.0 -0.1 0 9 160.8 390 126.1 103.9 0.2 0.7 0 10 170.9 315 143.8 88.6 0.0 -0.1 1 11 -178.9 340 148.7 168.4 -0.1 1.0 0 12 -169.2 227 170.1 86.5 0.2 0.5 1 13 -158.0 249 160.3 94.0 1.0 -1.0 0 14 -148.8 192 130.1 112.6 1.0 0.7 0 15 -138.8 147 127.7 83.6 1.0 0.7 0 16 -128.4 147 189.4 85.8 0.5 -0.4 1 17 -118.2 108 312.0 97.3 1.0 0.2 1 18 -108.6 84 204.5 97.5 1.0 -0.1 1 19 -98.4 137 293.3 101.8 -0.1 -0.1 0 20 -88.5 182 307.3 109.3 -0.6 -0.2 0 21 -78.9 75 242.9 102.3 -0.3 0.6 0 22 -67.9 116 238.6 90.6 1.0 -1.0 0 23 -57.1 79 178.8 167.9 1.0 -0.3 0 24 -47.3 61 151.2 177.9 0.3 0.2 0 25 -37.7 95 152.4 166.7 -0.4 0.2 0 26 -28.2 95 164.2 144.0 -0.3 0.9 0 27 -18.5 98 100.3 147.4 -0.1 -1.0 0 28 -8.6 101 89.1 148.9 0.5 -0.0 0 29 1.1 101 174.3 149.4 -1.0 -0.3 0 30 11.5 69 85.2 172.5 -0.3 -0.2 1 31 21.3 69 87.2 183.7 0.7 1.0 0 32 31.1 182 112.1 138.9 -0.3 0.1 0 33 40.5 170 76.6 137.6 0.7 -1.0 0 34 50.2 162 80.0 248.1 0.4 -1.0 1 35 61.6 362 94.8 352.2 0.1 -0.6 1 In RunToDaylightV2() RunToDaylightV2(): After REV sort, arrays are Idx Hdg FrontD LeftD RightD LSteer RSteer Avail 0 75.9 526 95.4 318.2 0.2 0.5 1 1 150.4 443 149.5 171.7 0.0 -0.1 0 2 160.8 390 126.1 103.9 0.2 0.7 0 3 62.6 380 82.0 246.7 0.7 -1.0 0 4 139.8 379 170.0 149.3 0.2 -0.2 0 5 61.6 362 94.8 352.2 0.1 -0.6 1 6 -178.9 340 148.7 168.4 -0.1 1.0 0 7 170.9 315 143.8 88.6 0.0 -0.1 1 8 -158.0 249 160.3 94.0 1.0 -1.0 0 9 -169.2 227 170.1 86.5 0.2 0.5 1 10 -148.8 192 130.1 112.6 1.0 0.7 0 11 31.1 182 112.1 138.9 -0.3 0.1 0 12 -88.5 182 307.3 109.3 -0.6 -0.2 0 13 97.9 181 107.4 241.4 0.3 0.1 0 14 40.5 170 76.6 137.6 0.7 -1.0 0 15 109.2 168 89.7 242.8 0.7 -0.7 0 16 50.2 162 80.0 248.1 0.4 -1.0 1 17 -128.4 147 189.4 85.8 0.5 -0.4 1 18 -138.8 147 127.7 83.6 1.0 0.7 0 19 119.3 143 102.2 209.9 1.0 1.0 0 20 86.4 142 101.3 294.9 0.3 -0.6 0 21 -98.4 137 293.3 101.8 -0.1 -0.1 0 22 129.3 123 179.5 162.2 0.1 0.3 0 23 -67.9 116 238.6 90.6 1.0 -1.0 0 24 -118.2 108 312.0 97.3 1.0 0.2 1 25 -8.6 101 89.1 148.9 0.5 -0.0 0 26 1.1 101 174.3 149.4 -1.0 -0.3 0 27 -18.5 98 100.3 147.4 -0.1 -1.0 0 28 -37.7 95 152.4 166.7 -0.4 0.2 0 29 -28.2 95 164.2 144.0 -0.3 0.9 0 30 -108.6 84 204.5 97.5 1.0 -0.1 1 31 -57.1 79 178.8 167.9 1.0 -0.3 0 32 -78.9 75 242.9 102.3 -0.3 0.6 0 33 21.3 69 87.2 183.7 0.7 1.0 0 34 11.5 69 85.2 172.5 -0.3 -0.2 1 35 -47.3 61 151.2 177.9 0.3 0.2 0 FrontD Hdg LeftD LSteer RightD RSteer at index = 0, FrontD = 526, bWallTrackAvailable = 1 Trackable Wall found at index = 0 FrontD Hdg LeftD LSteer RightD RSteer 526 75.9 95.4 0.2 318.2 0.5 Turning to heading = 75.9 Just after turning to 75.9 FrontD Hdg LeftD LSteer RightD RSteer 526 75.9 95.4 0.2 318.2 0.5 In RunToDaylightV2() with trackable wall on left side: trkdir = LEFT RunToDaylightV2() returning LEFT In ANOMALY_MIRRORED_SFC case: RunToDaylightV2 returned = LEFT |

This experiment led to one of those “Well, DUH!! moments, as the robot’s preferred ‘RunToDaylight()’ heading was right back at the mirrored surface!

After recovering from my ‘face-palm’ moment, I realized I needed to modify the ‘RunToDaylight() function to take heading-start & heading-end parameters to exclude the mirrored surface sector from the ‘RunToDaylight()’ search for an appropriate recovery heading.

28 January 2024 Update:

I modified ‘RunToDaylightV2()’ to take two float parameters denoting the start and ending headings for the search. In the normal non-mirrored surface case, ‘RunToDaylightV2()’ is called with no arguments. The no-argument overload simply calls ‘RunToDaylightV2(startHdg, endHdg)’ with startHdg = endHdg. In the mirrored surface case, the two-parameter version of ‘RunToDaylightV2()’ is called from the ‘ANOMALY_MIRRORED_SFC’ case block of ‘HandleAnomalousConditions()’

Here’s a short video and the telemetry from a test run toward a mirrored surface.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 |

MTFD: at start, tgt = 30cm, curr_dist = 518, PID = 1.00, 0.10, 0.10 In DoOneMoveToFrontDistCm() at top, displaying 10 front dist entries Msec FrontD 5ptVar abs(gl_FrontCm - offsetCm) * Kp = 497.0 -- Zeroing lastIval! 227898 527 186.8 Warning! Mirrored surface detected in DoOneMoveToFrontDistCm()! Setting anomaly code to ANOMALY_MIRRORED_SFC idx FrontD 0 99 527 1 98 527 2 97 504 3 96 504 4 95 538 DoOneMoveToFrontDistCm() returned false with gl_LastAnomalyCode == ANOMALY_MIRRORED_SFC MoveToDesiredFrontDistCm returning false FrontBackMotionTest(): MoveToDesiredFrontDistCm() failed with anomaly code = MIRRORED_SURFACE - Quitting! In HandleAnomalousConditions(NEITHER) with last anomaly code = MIRRORED_SURFACE ANOMALY_MIRRORED_SFC case detected with tracking case NEITHER In ANOMALY_MIRRORED_SFC with IMUHdgValDeg = -0.3 In ANOMALY_MIRRORED_SFC with startdeg = 19.7, enddeg = -20.3 In RunToDaylightV2(19.7, -20.3) GetHdgDiff(1, 19.7, -20.3) GetHdgDiff - Else block: starthdg = 19.7, endhdg = -20.3 GetHdgDiff - Else block: CCW_hdg_diff = 40.0, CW_hdg_diff = 320.0 GetHdgDiff: hdg_diff = 320.0 RunToDaylightV2: spindeg 8.0 RunToDaylightV2: turning 320.0deg CW from 19.7 to -20.3 turning to startHdg 19.7 Idx Hdg FrontD LeftD RightD LSteer RSteer Avail 0 16.1 162 109.2 283.6 0.1 1.0 0 1 24.6 171 110.7 292.3 0.1 0.3 0 2 34.9 208 115.9 309.9 0.2 0.7 0 3 44.7 195 127.8 264.0 0.3 0.5 0 4 54.3 226 123.2 200.4 0.6 1.0 0 5 65.1 192 198.4 150.5 0.6 0.4 0 6 73.3 159 205.1 136.7 0.1 0.2 0 7 82.9 410 196.8 122.4 -0.1 -0.3 0 8 93.8 607 165.8 54.0 0.1 0.9 0 9 101.8 313 149.6 50.3 0.2 -0.1 1 10 112.6 279 171.9 64.2 -0.1 -1.0 0 11 122.1 331 182.5 87.0 -0.0 -1.0 0 12 132.9 202 202.6 150.5 0.6 1.0 0 13 141.1 199 199.4 72.9 1.0 0.2 1 14 149.1 151 205.0 72.9 1.0 -0.8 0 15 158.3 119 178.5 104.8 1.0 -0.9 0 16 167.7 104 175.2 104.9 1.0 0.8 0 17 176.6 127 205.8 84.2 1.0 -0.3 1 18 -175.5 46 270.5 93.6 0.1 -1.0 0 19 -166.1 69 289.7 108.2 -1.0 -0.1 0 20 -158.2 72 302.1 110.8 -1.0 -0.1 0 21 -150.3 118 297.2 115.7 -1.0 -0.3 0 22 -141.0 165 285.9 127.1 1.0 -0.5 0 23 -131.8 67 193.4 134.6 1.0 -0.4 0 24 -122.6 66 160.3 139.1 1.0 -1.0 0 25 -113.3 108 137.1 204.3 0.7 0.1 0 26 -104.4 102 120.0 205.7 0.3 -0.3 0 27 -95.2 68 118.4 195.8 -0.9 0.6 0 28 -86.1 96 50.9 163.2 -0.6 1.0 0 29 -77.2 104 47.9 172.6 0.1 -0.1 1 30 -68.0 110 57.8 178.1 1.0 0.1 0 31 -58.8 120 80.4 194.0 1.0 0.2 0 32 -49.1 123 147.1 208.7 -0.2 -0.1 0 33 -39.2 103 72.1 214.0 -0.1 0.0 1 34 -29.5 184 69.7 205.2 0.2 0.8 1 35 -21.1 205 101.0 176.7 0.0 0.9 0 RunToDaylightV2(): After REV sort, arrays are Idx Hdg FrontD LeftD RightD LSteer RSteer Avail 0 93.8 607 165.8 54.0 0.1 0.9 0 1 82.9 410 196.8 122.4 -0.1 -0.3 0 2 122.1 331 182.5 87.0 -0.0 -1.0 0 3 101.8 313 149.6 50.3 0.2 -0.1 1 4 112.6 279 171.9 64.2 -0.1 -1.0 0 5 54.3 226 123.2 200.4 0.6 1.0 0 6 34.9 208 115.9 309.9 0.2 0.7 0 7 -21.1 205 101.0 176.7 0.0 0.9 0 8 132.9 202 202.6 150.5 0.6 1.0 0 9 141.1 199 199.4 72.9 1.0 0.2 1 10 44.7 195 127.8 264.0 0.3 0.5 0 11 65.1 192 198.4 150.5 0.6 0.4 0 12 -29.5 184 69.7 205.2 0.2 0.8 1 13 24.6 171 110.7 292.3 0.1 0.3 0 14 -141.0 165 285.9 127.1 1.0 -0.5 0 15 16.1 162 109.2 283.6 0.1 1.0 0 16 73.3 159 205.1 136.7 0.1 0.2 0 17 149.1 151 205.0 72.9 1.0 -0.8 0 18 176.6 127 205.8 84.2 1.0 -0.3 1 19 -49.1 123 147.1 208.7 -0.2 -0.1 0 20 -58.8 120 80.4 194.0 1.0 0.2 0 21 158.3 119 178.5 104.8 1.0 -0.9 0 22 -150.3 118 297.2 115.7 -1.0 -0.3 0 23 -68.0 110 57.8 178.1 1.0 0.1 0 24 -113.3 108 137.1 204.3 0.7 0.1 0 25 167.7 104 175.2 104.9 1.0 0.8 0 26 -77.2 104 47.9 172.6 0.1 -0.1 1 27 -39.2 103 72.1 214.0 -0.1 0.0 1 28 -104.4 102 120.0 205.7 0.3 -0.3 0 29 -86.1 96 50.9 163.2 -0.6 1.0 0 30 -158.2 72 302.1 110.8 -1.0 -0.1 0 31 -166.1 69 289.7 108.2 -1.0 -0.1 0 32 -95.2 68 118.4 195.8 -0.9 0.6 0 33 -131.8 67 193.4 134.6 1.0 -0.4 0 34 -122.6 66 160.3 139.1 1.0 -1.0 0 35 -175.5 46 270.5 93.6 0.1 -1.0 0 FrontD Hdg LeftD LSteer RightD RSteer at index = 0, FrontD = 607, bWallTrackAvailable = 0 at index = 1, FrontD = 410, bWallTrackAvailable = 0 at index = 2, FrontD = 331, bWallTrackAvailable = 0 at index = 3, FrontD = 313, bWallTrackAvailable = 1 Trackable Wall found at index = 3 FrontD Hdg LeftD LSteer RightD RSteer 313 101.8 149.6 0.2 50.3 -0.1 Turning to heading = 101.8 Just after turning to 101.8 FrontD Hdg LeftD LSteer RightD RSteer 313 101.8 149.6 0.2 50.3 -0.1 In RunToDaylightV2() with trackable wall on right side: trkdir = RIGHT RunToDaylightV2() returning RIGHT In ANOMALY_MIRRORED_SFC case: RunToDaylightV2 returned = RIGHT |

Stay tuned!

Frank