Posted 08/18/2015

Well, I have to say that the Pulsed Light tech support has been fantastic as I have been trying to work my way through ‘issues’ with both the V1 ‘Silver Label’ and V2 ‘Blue Label’ LIDAR systems (see my previous posts here and here). I know they must be thinking “how did we get stuck with this guy – he seems to be able to break anything we send to him!” I keep expecting them to say “Look – return both units and we’ll give you twice your money back – as long as you promise NOT to buy anything from us ever again!”, but so far that hasn’t happened ;-).

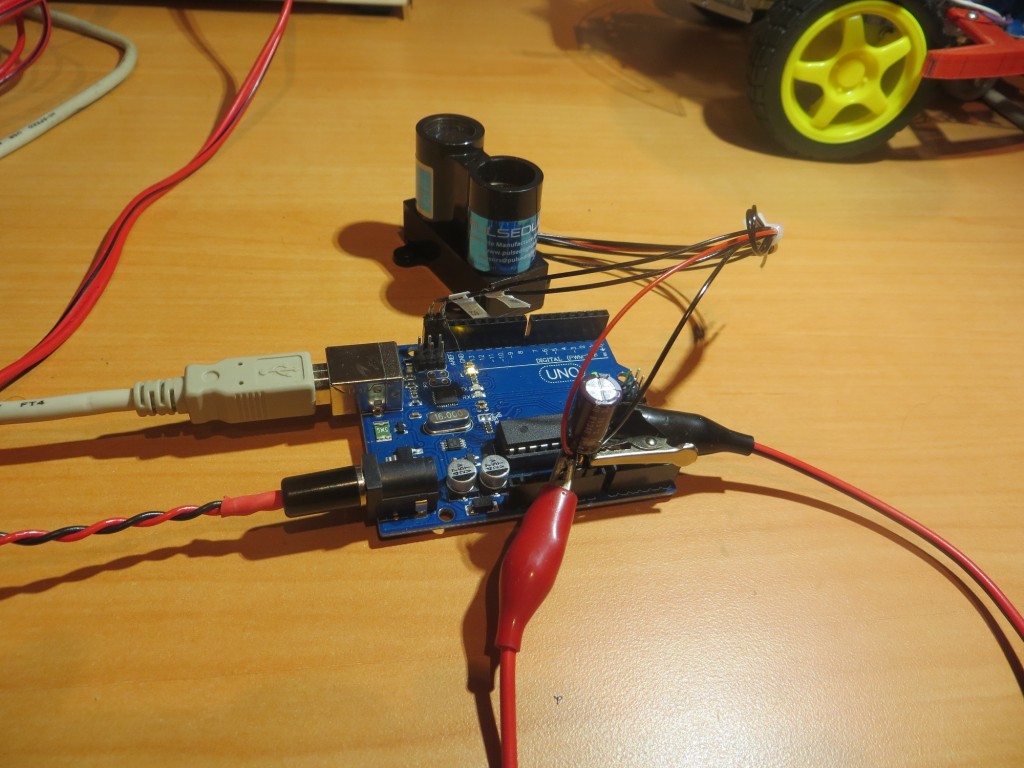

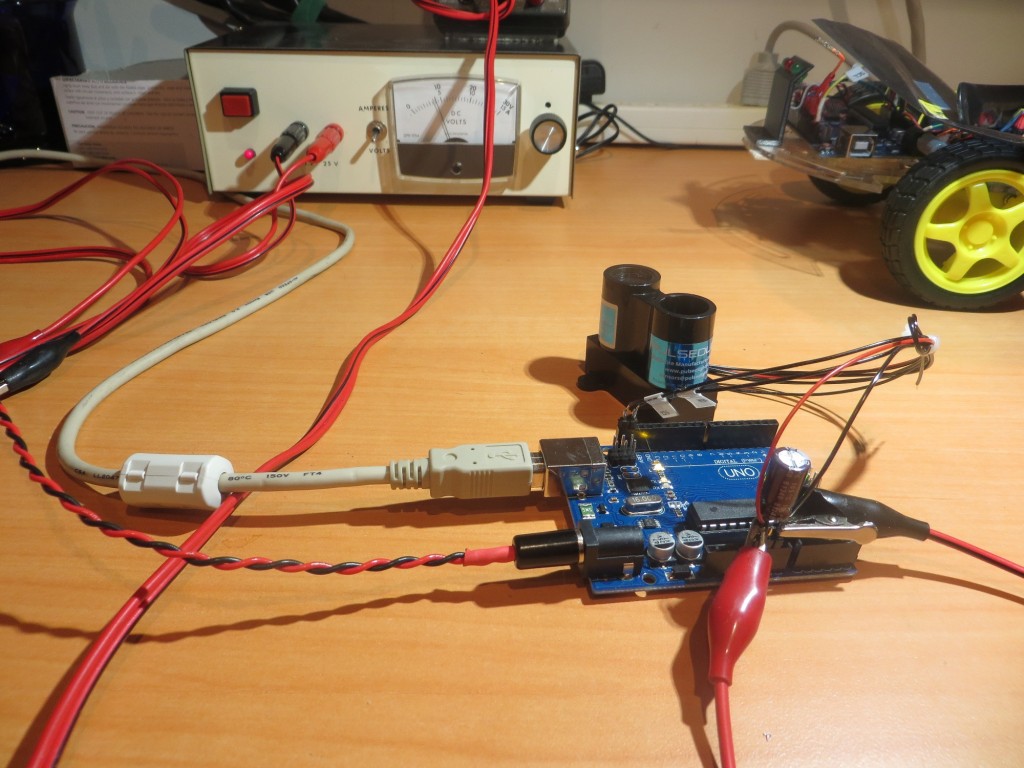

In my last round of emails with Austin (apparently one of two support guys.Bob is the other one, but he is on vacation, so Austin is stuck with me), he mentioned that he had found & fixed a bug or two in the V2 support libraries, and suggested that I download it again and see if that fixes some/all of the issues I’m seeing here with my ‘Blue Label’ V2 unit.

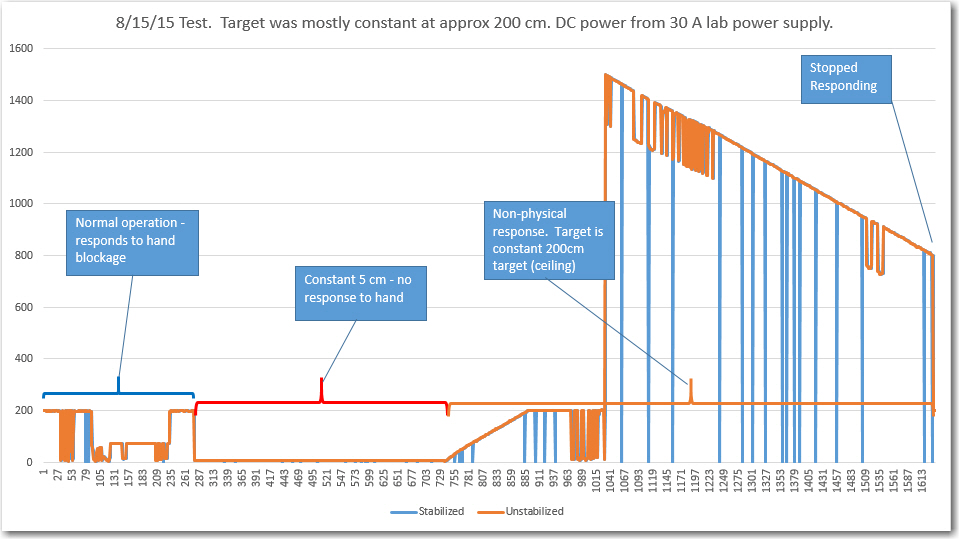

So, I downloaded the new library, loaded up one of my two newly-arrived ‘genuine’ Arduino Uno boards with their ‘Distance As Fast as Possible’ example sketch, and gave it a whirl. After at least 45 minutes so far of run time, the ‘Blue Label’ unit is still humming along nicely, with no hangups and no dropouts – YAY!!

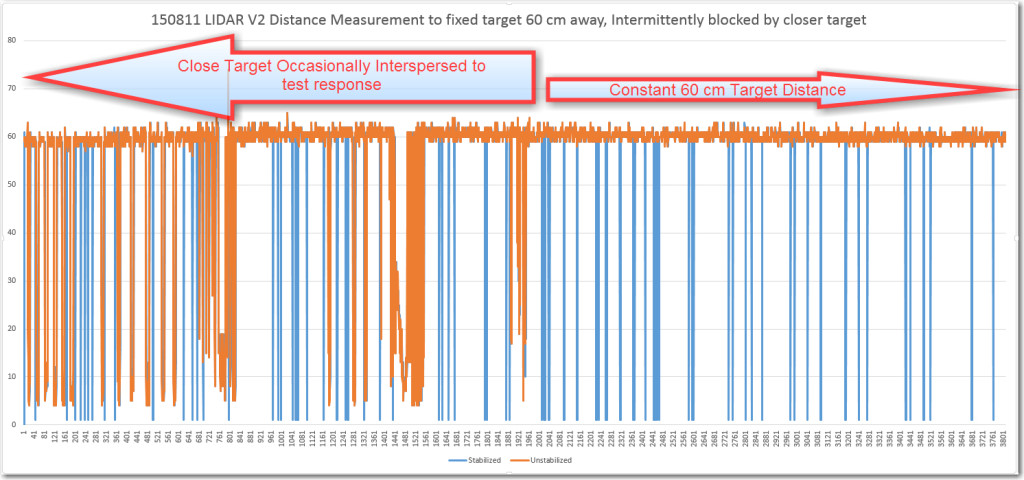

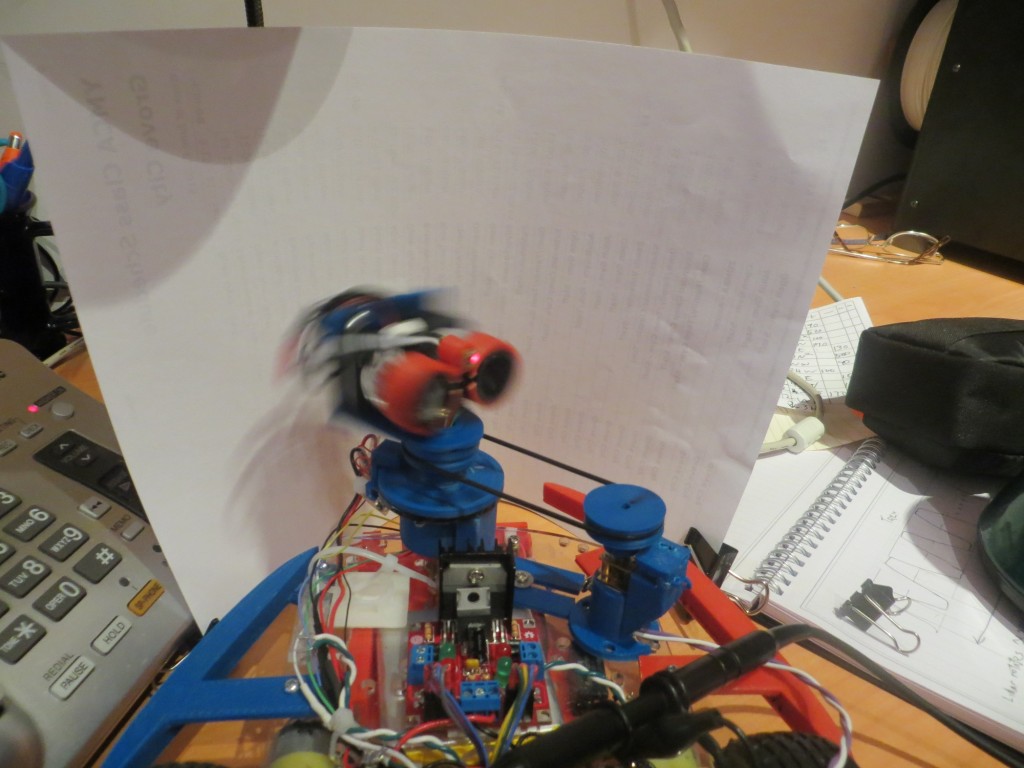

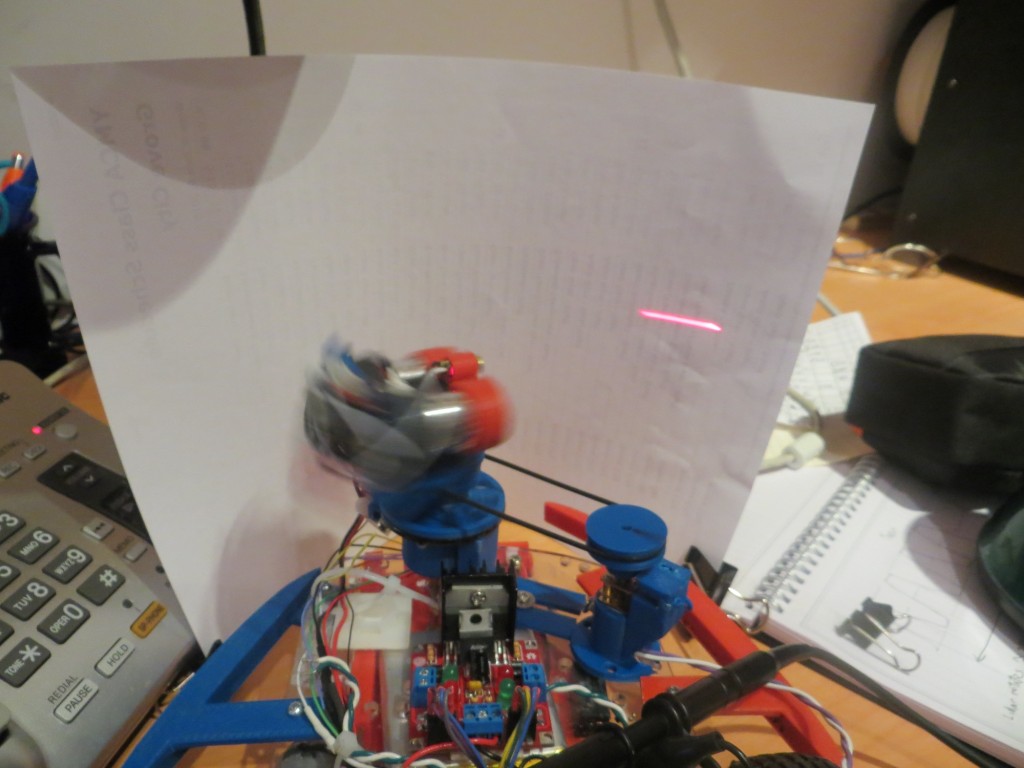

The ‘Distance As Fast as Possible’ sketch starts by configuring the LIDAR for lower-than-default acquisition count to speed up the measurement cycle, and increasing the I2C speed to 400 KHz. Then, in the main loop, it takes one ‘stabilized’ measurement, followed by 100 ‘unstabilized’ measurement. The idea is that re-stabilization (re-referencing?) isn’t required for every measurement for typical measurement scenarios, so why pay the extra cost in measurement time. This is certainly true for my wall-following robot application, where typical measurement distances are less than 200 cm and target surfaces are typically white-painted sheet-rock walls.

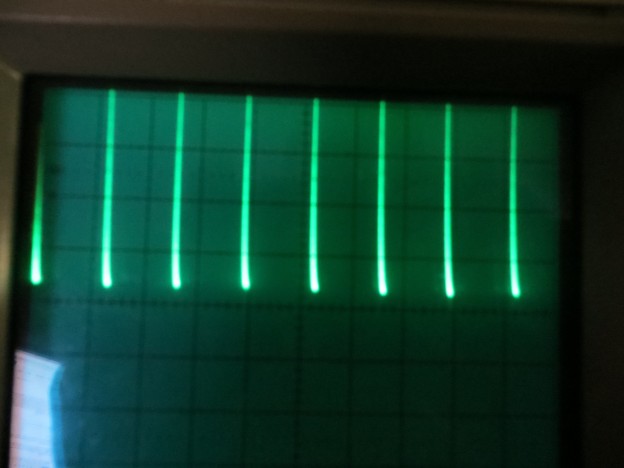

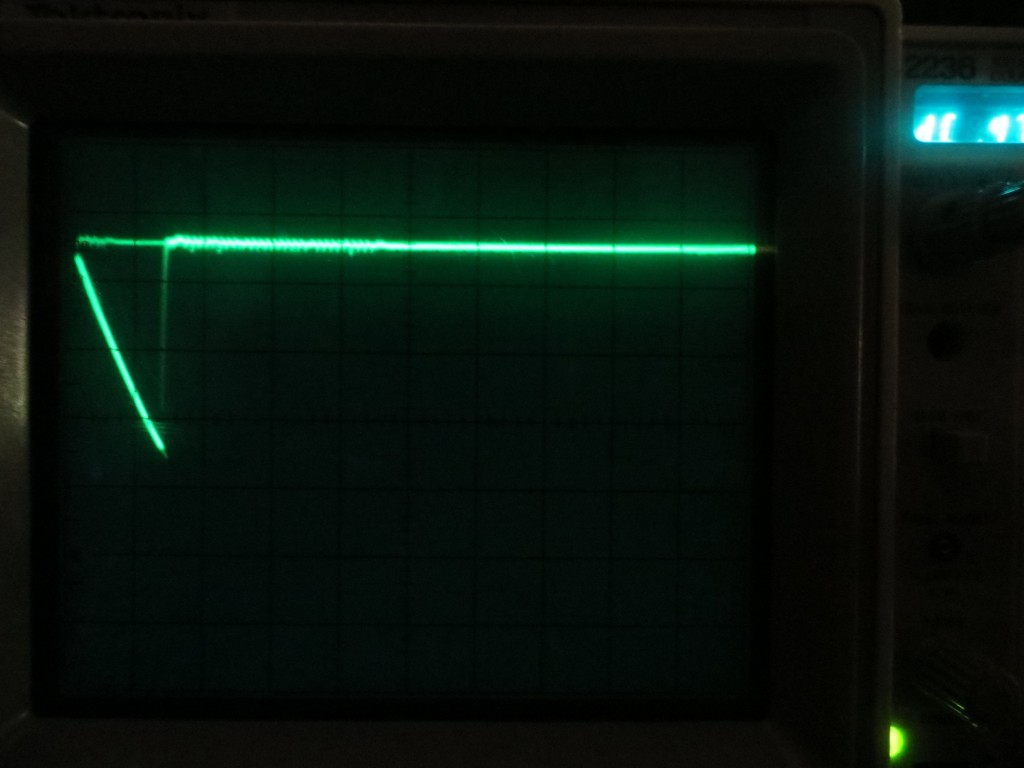

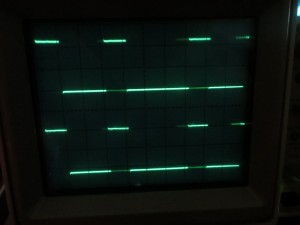

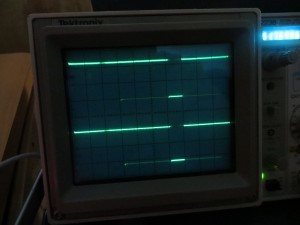

To get an accurate measurement cycle time, I instrumented the example code with ‘digitalWrite() calls to toggle Arduino Uno pin 12 at appropriate spots in the code. In the main loop() section the pin goes HIGH just before the single ‘stabilized’ measurement, and LOW immediately thereafter. Then (after an intermediate Serial.print() statement) it goes HIGH again immediately before the start of the 100-count ‘unstabilized’ measurement loop, and then LOW after all 100 measurements complete. After another Serial.print() statement the loop() section repeats.

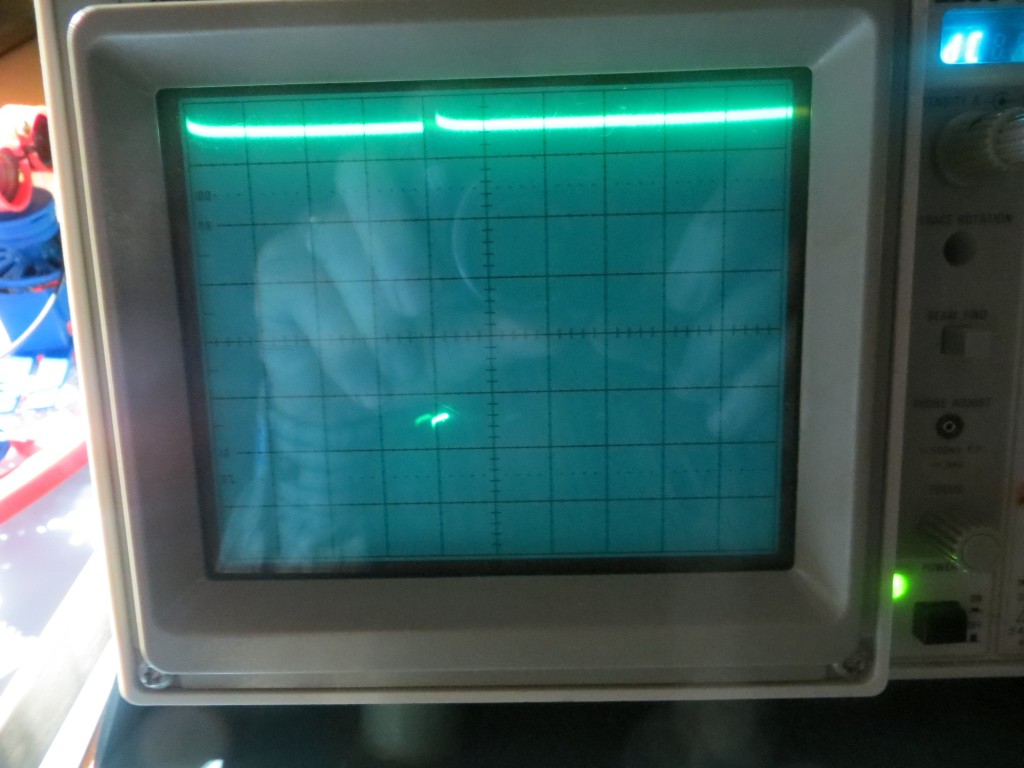

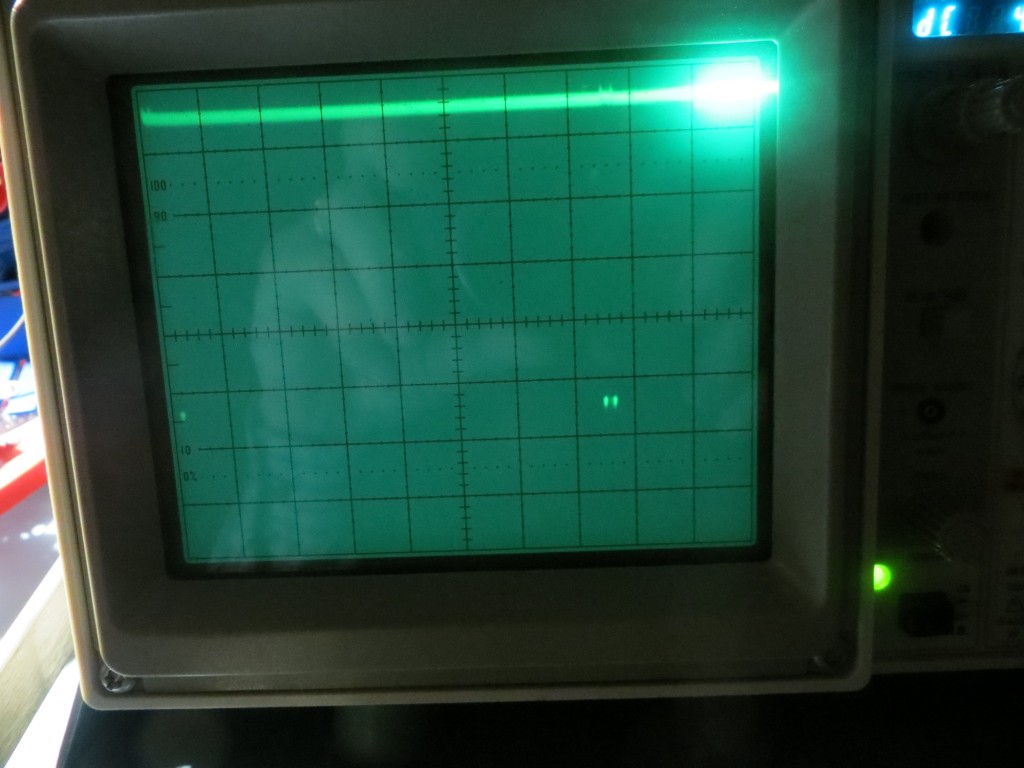

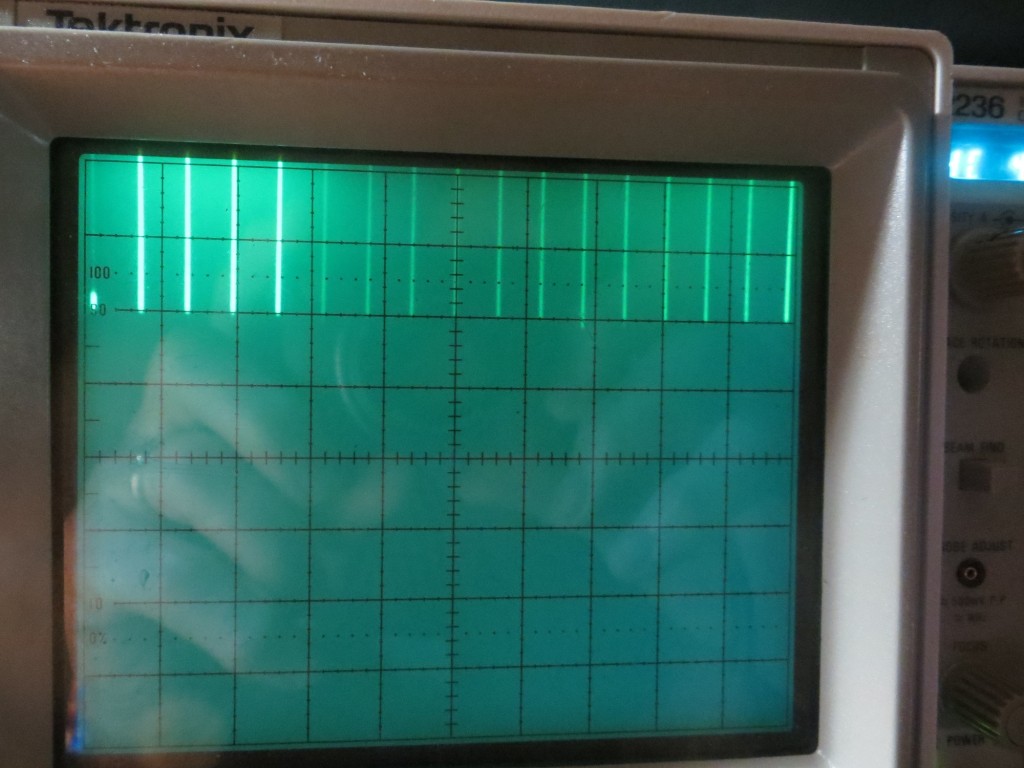

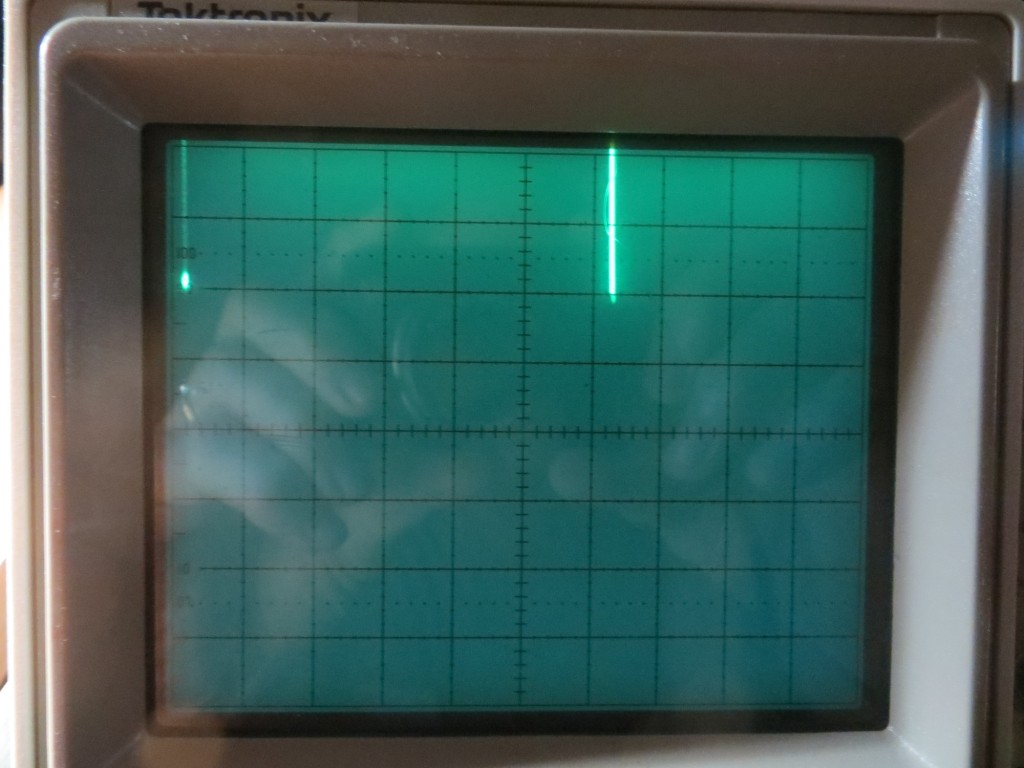

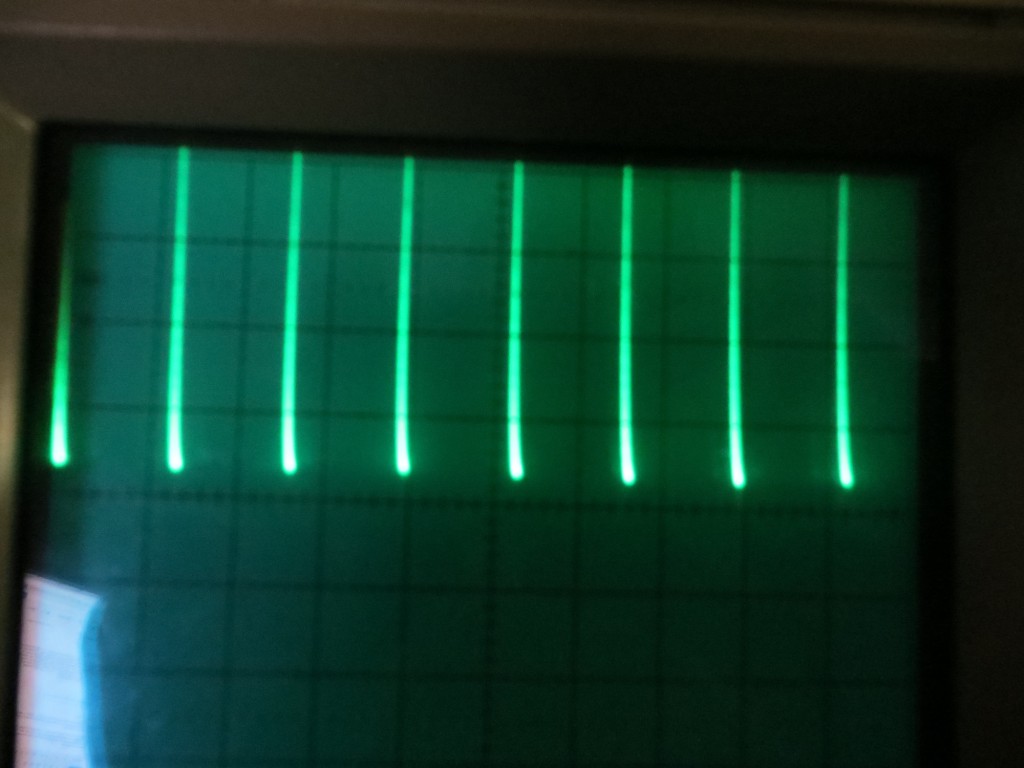

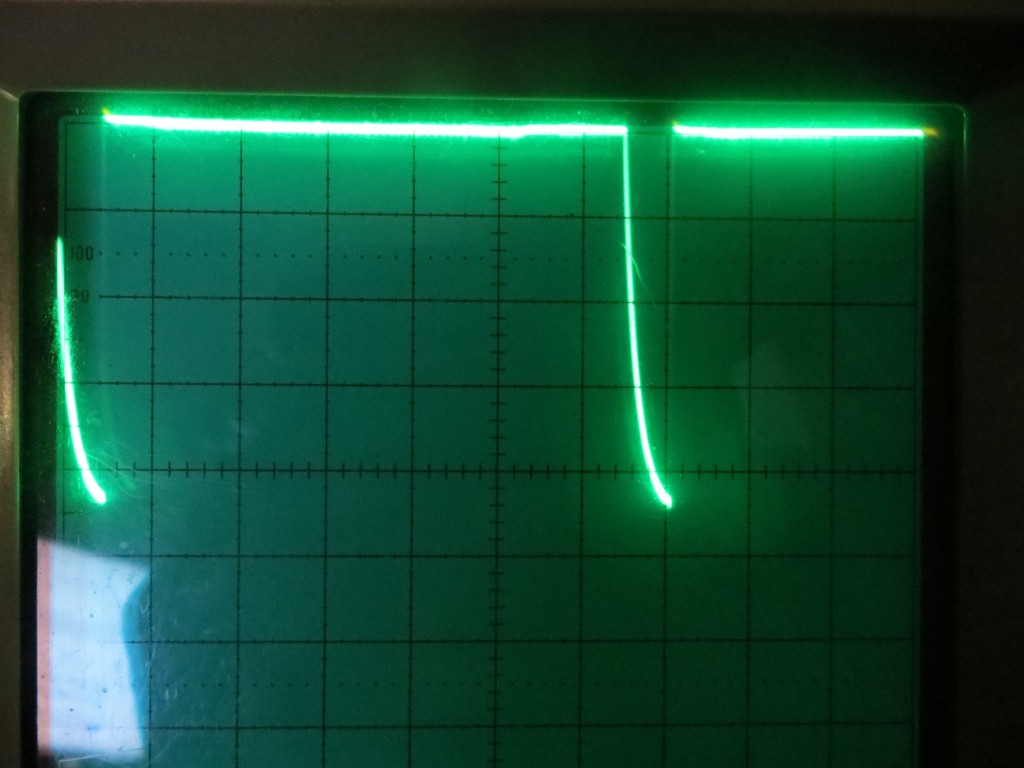

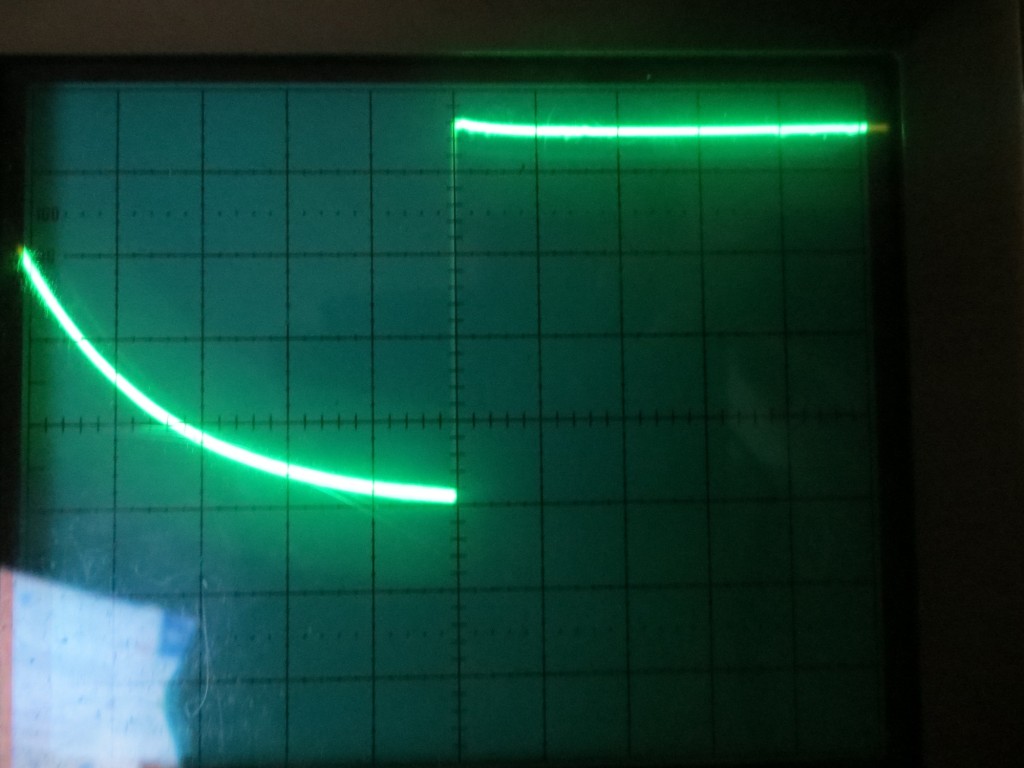

The following O’scope screenshots show the results. The first one shows the single ‘stabilized’ measurement time, with the scope set for 0.2 msec/div. From the photo, it appears this measurement completes in about 0.8 msec – WOW!!! The second one shows the time required for 100 ‘unstabilized measurements, with the scope set for 20 msec/div. From this it appears that 100 measurements take about 140 msec – about 1.4 msec per measurement — WOW WOW!!

Hmm, from the comments in the code, the ‘stabilized’ measurements are supposed to take longer than the ‘unstabilized’ ones – but the scope measurements indicate the opposite – wonder what I’m getting wrong :-(.

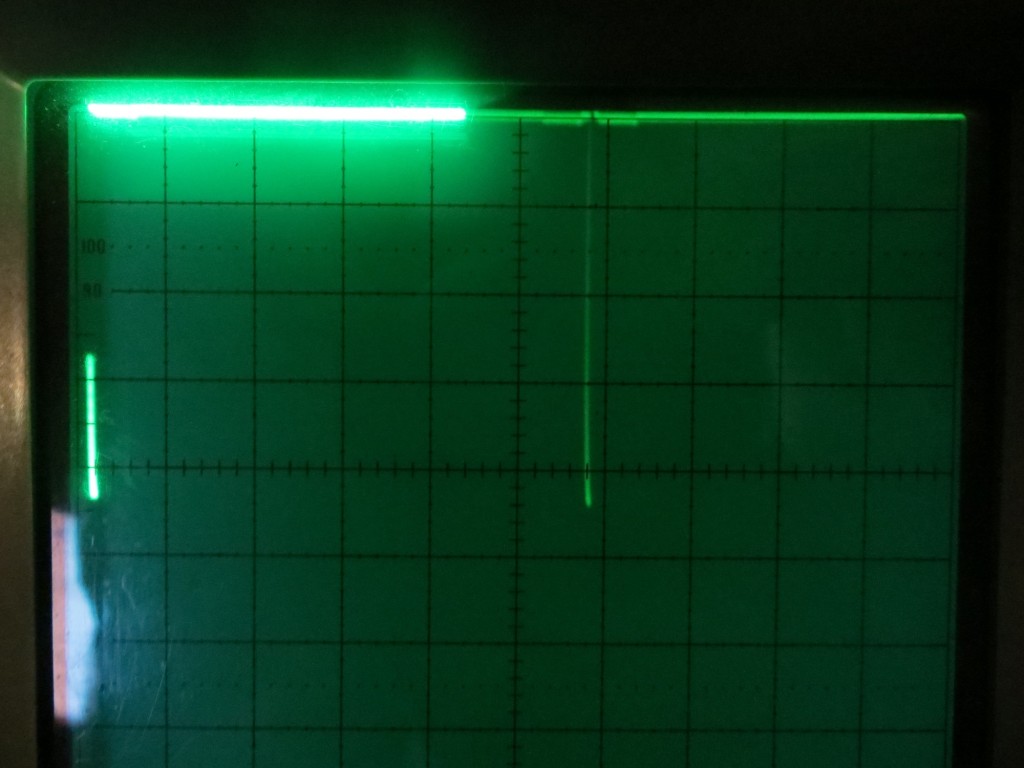

I left the LIDAR and Arduino system running for most of a day while I played a duplicate bridge session and did some other errands. When I got back after about 6 hours, the system was still running and was still responsive when I waved my hand over the optics, but the timing had changed considerably. Instead of 0.8 msec for the single ‘stabilized’ measurement I was now seeing times in the 3-6 msec range. For the 100 ‘unstabilized’ measurements, I was now seeing around 325 msec or about 3.2 msec per measurement. Something had definitely changed, but I have no idea what. A software restart fixed the problem, and now I’m again looking at 0.8 msec for one ‘stabilized’ measurement, and 150 msec for 100 ‘unstabilized’ ones.

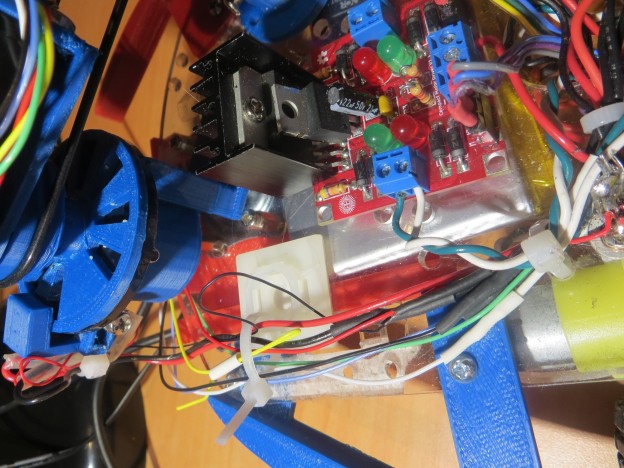

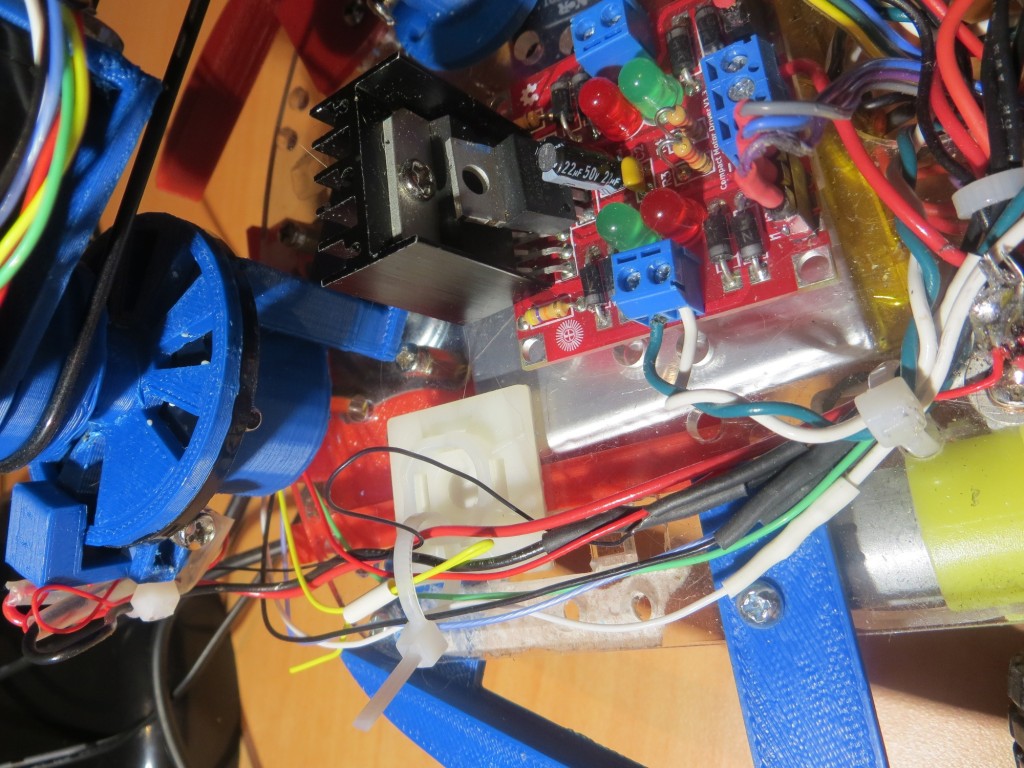

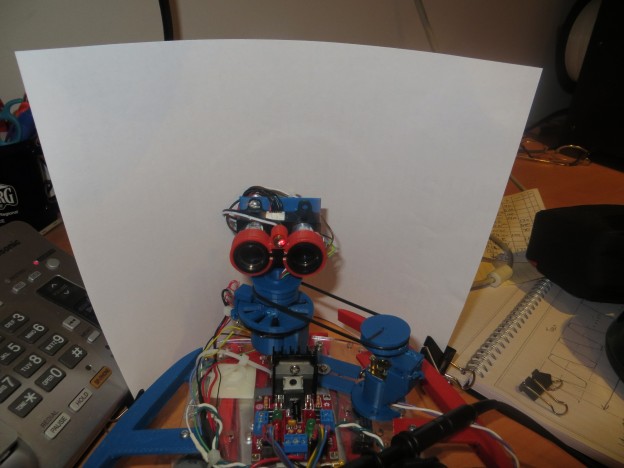

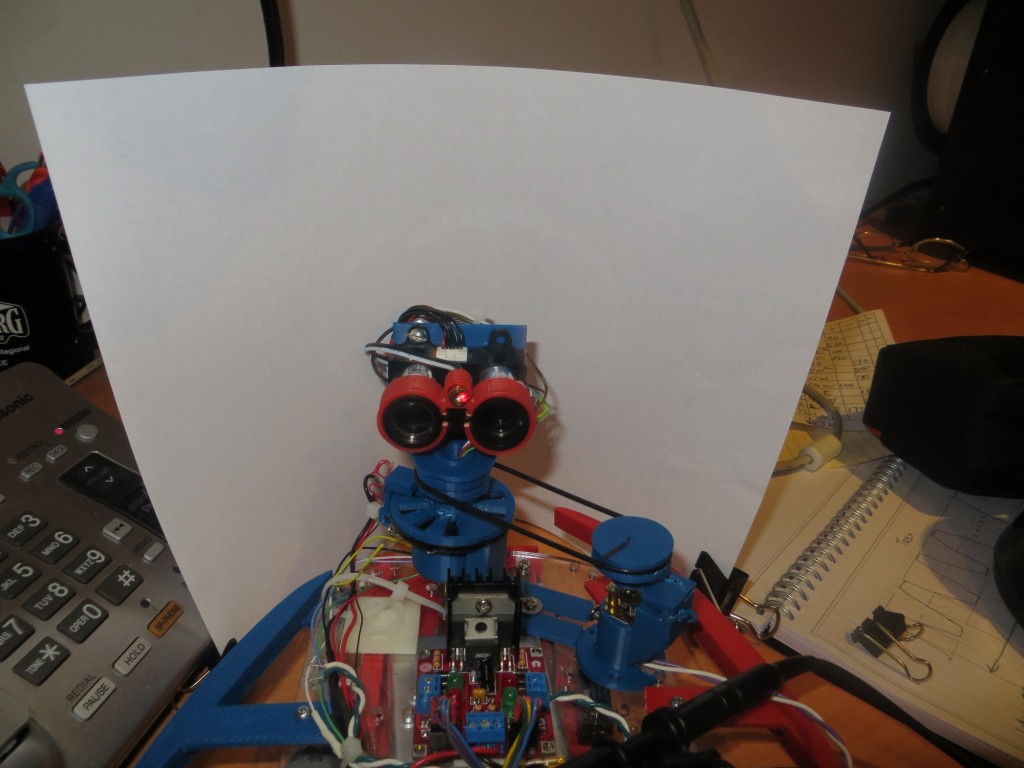

So, the good news is, the new V2 ‘Blue Label’ LIDAR is blindingly fast – I mean REALLY REALLY FAST (like ‘Ludicrous Speed’ in the SpaceBalls movie). The bad news is, it still seems to slow down A LOT after a while (where ‘while’ seems to be on the order of an hour or so). However, even at it’s ‘slow’ speed it is pretty damned fast, and still way faster than I need for my spinning LIDAR project. With this setup I should be able to change from a 10-tooth to at least a 12-tooth (or even a 24-tooth if the photo-sensor setup is sensitive enough) and still keep the 120 rpm motor speed.

Interestingly, I have seen this same sort of slowdown in my V1 (‘Silver Label’) LIDAR testing, so I’m beginning to wonder if the slowdown isn’t more a problem with the Arduino I2C hardware or library implementation. I can just barely spell ‘I2C’, much less have any familiarity with the hardware/software nuances, but the fact that a software reset affects the timing lends strongly exonerates the LIDAR hardware (the LIDAR can’t know that I rebooted the software) and lends credence to the I2C library as the culprit.

Stay tuned,

Frank