Posted 12 August 2016

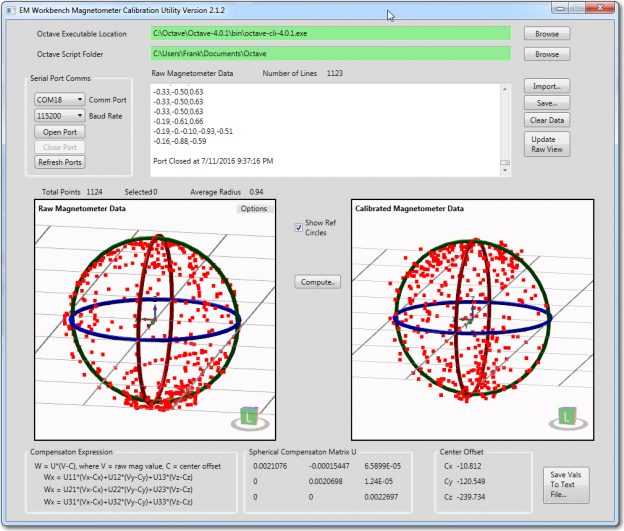

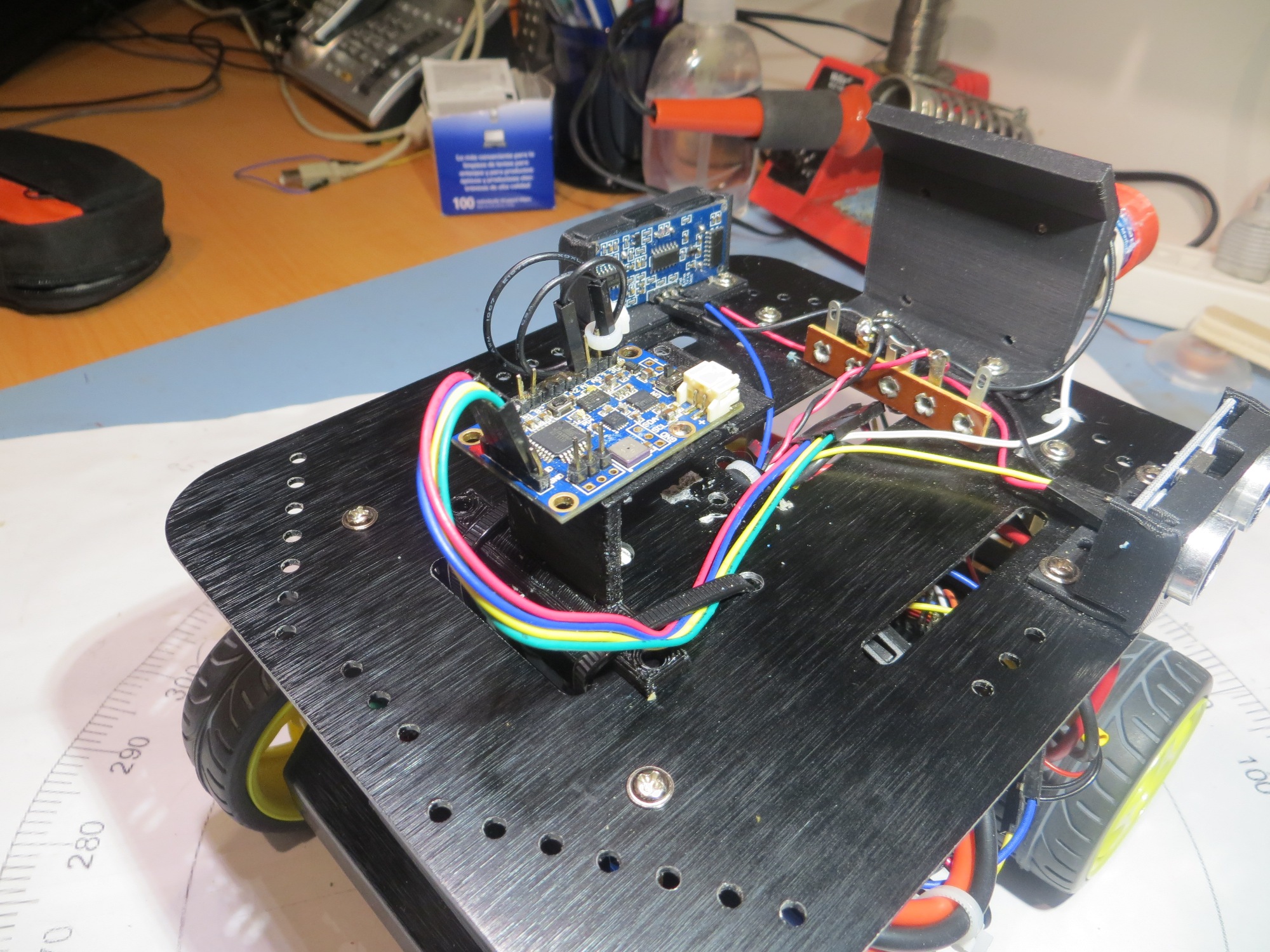

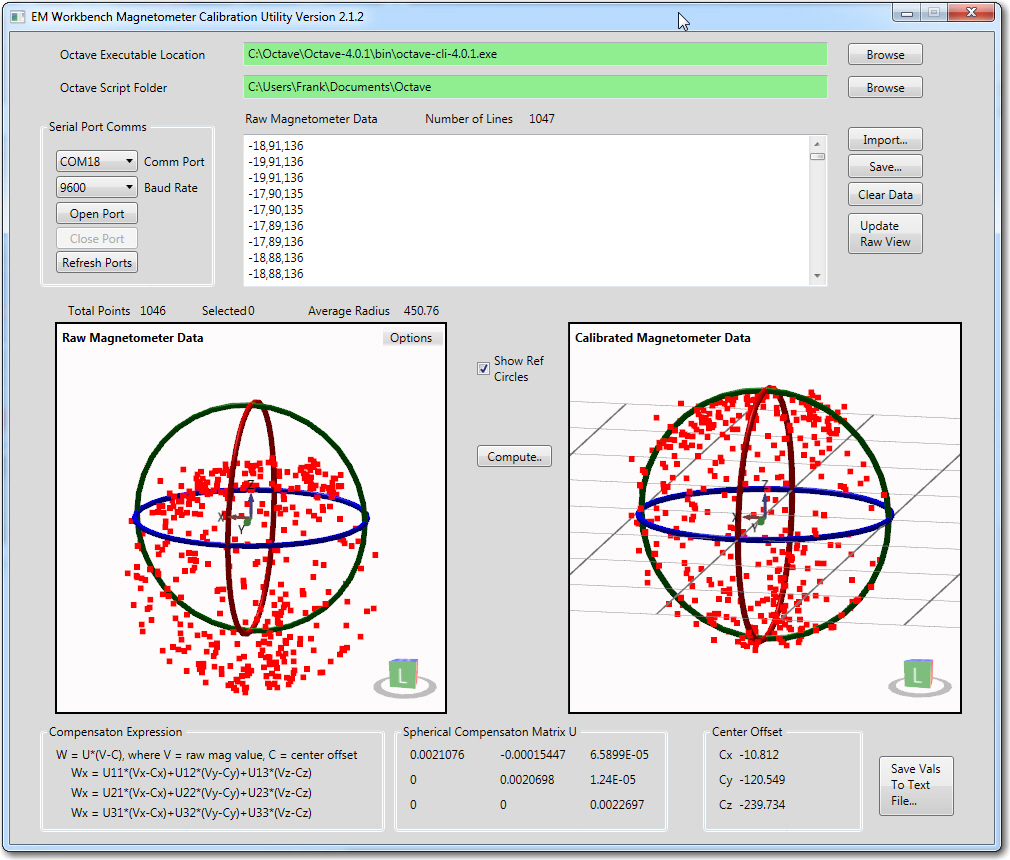

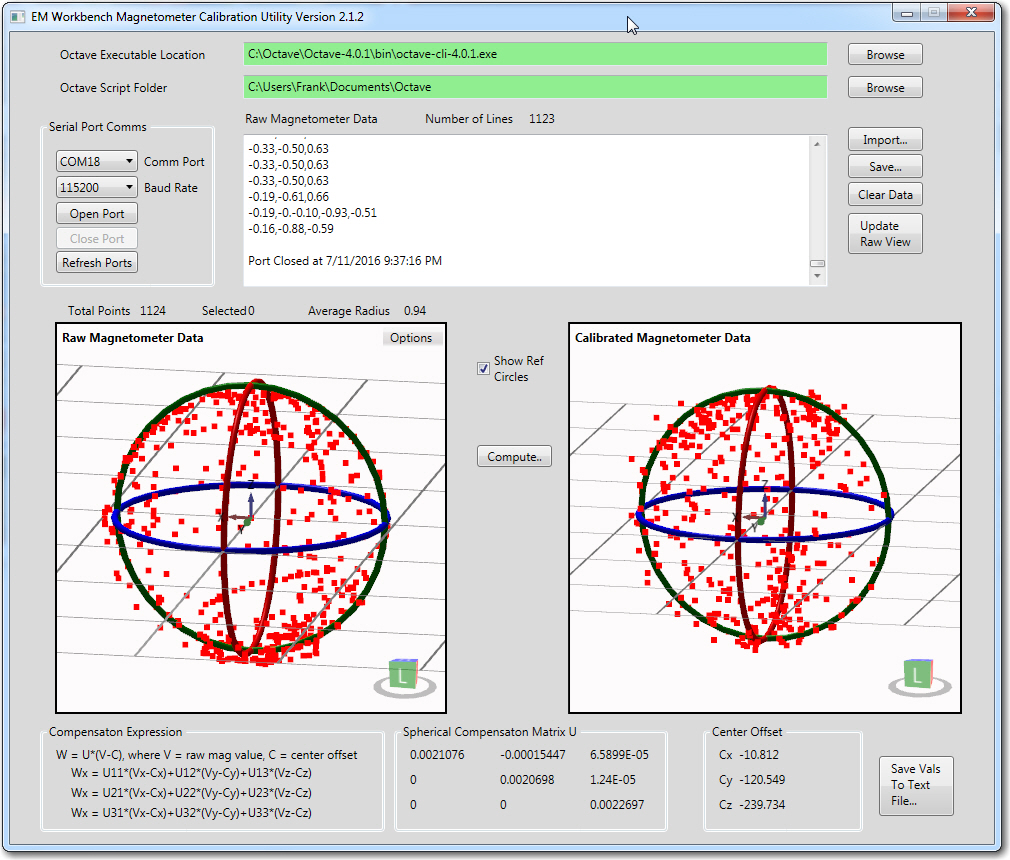

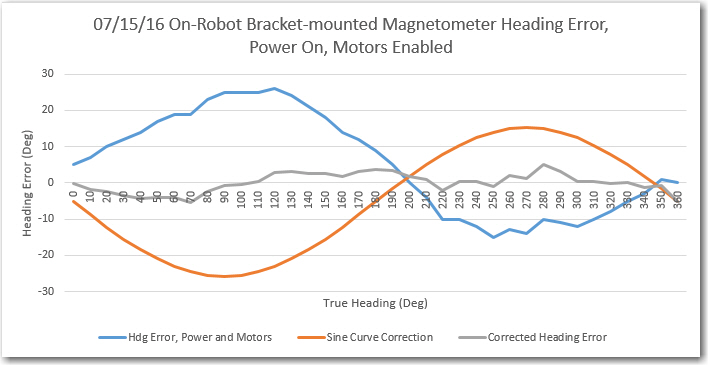

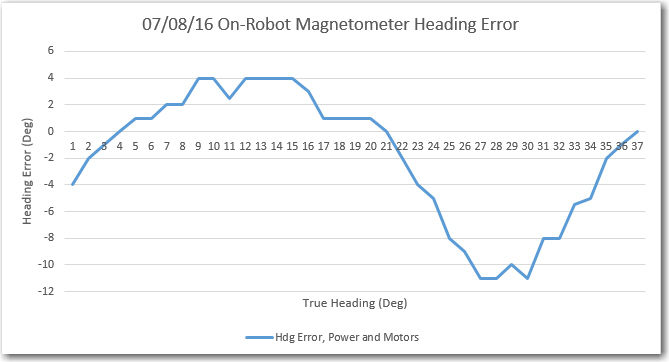

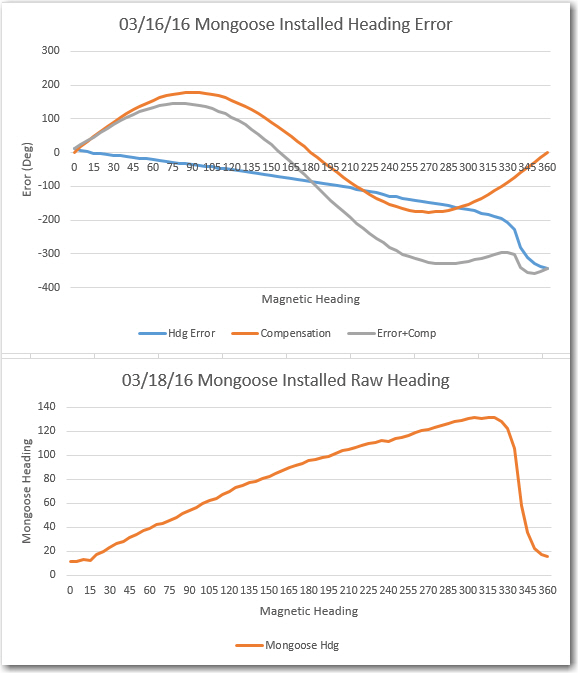

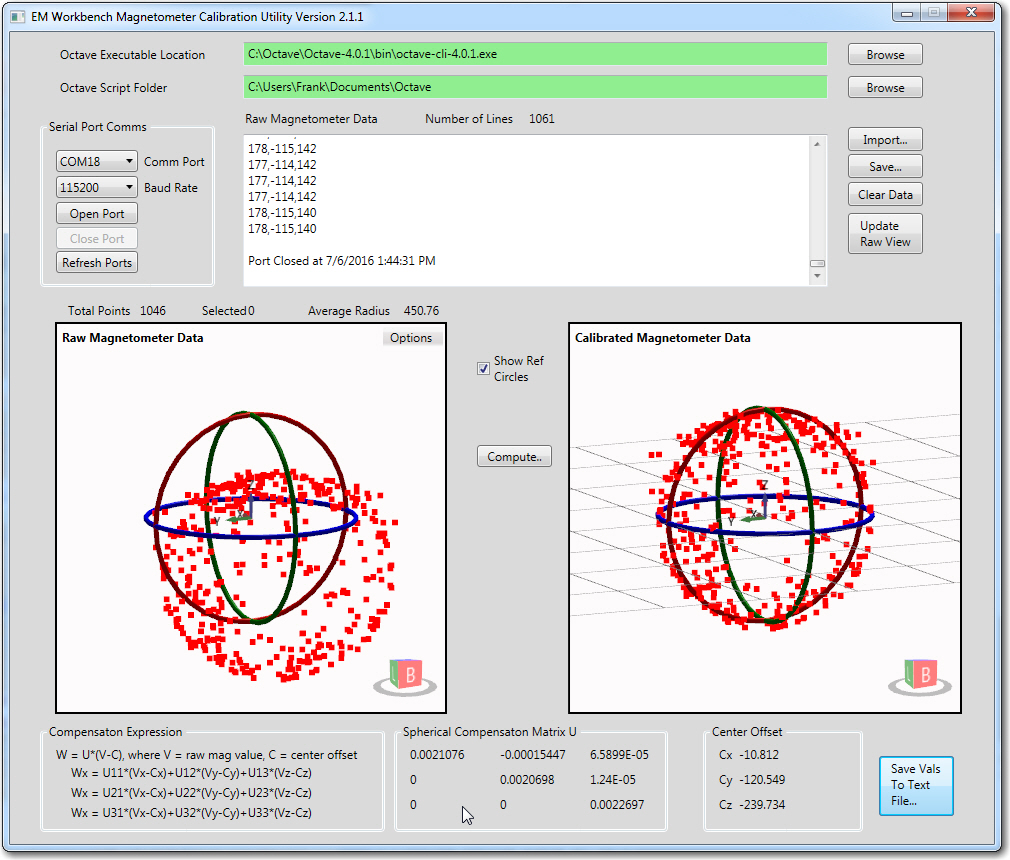

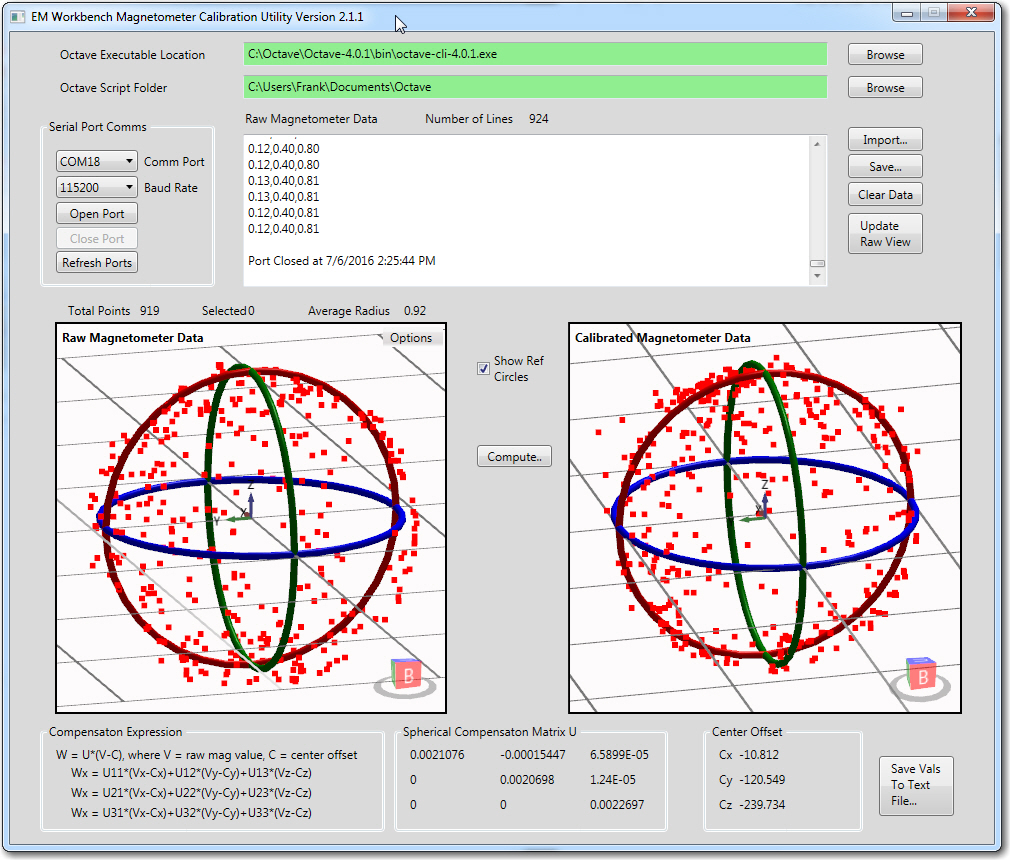

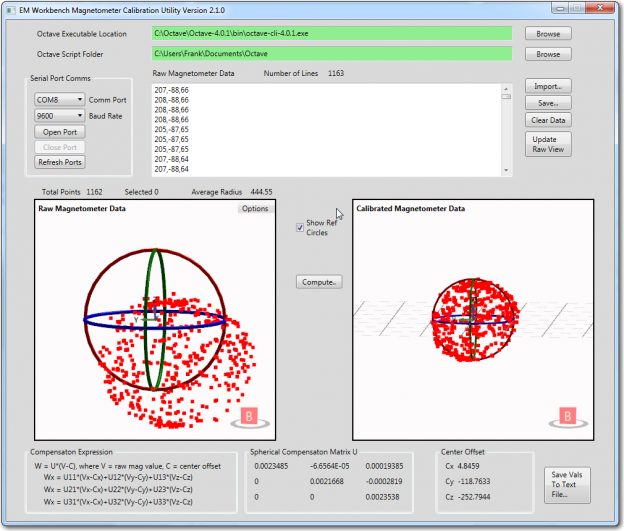

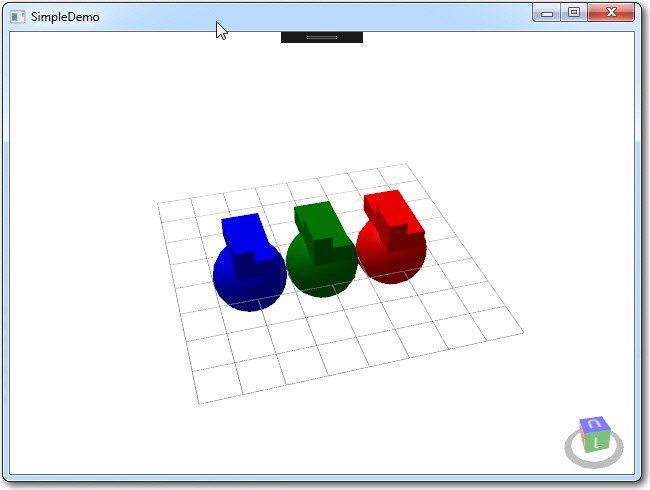

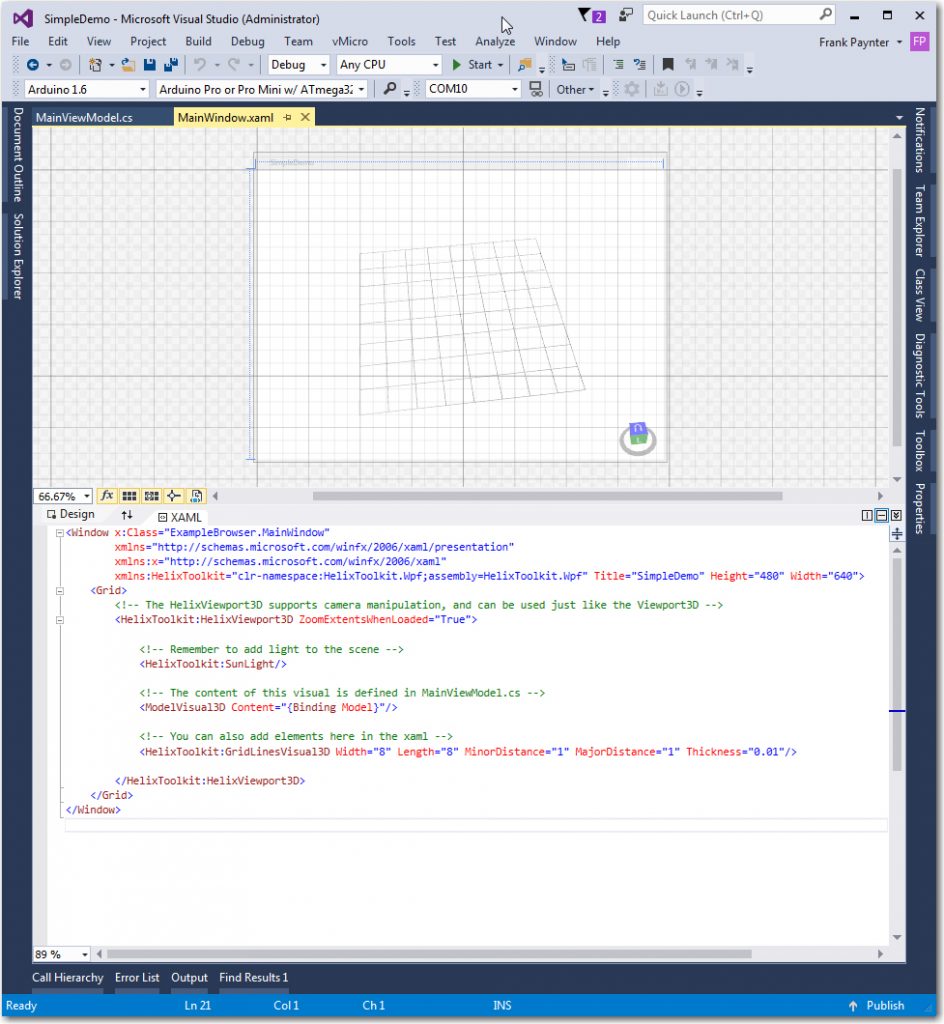

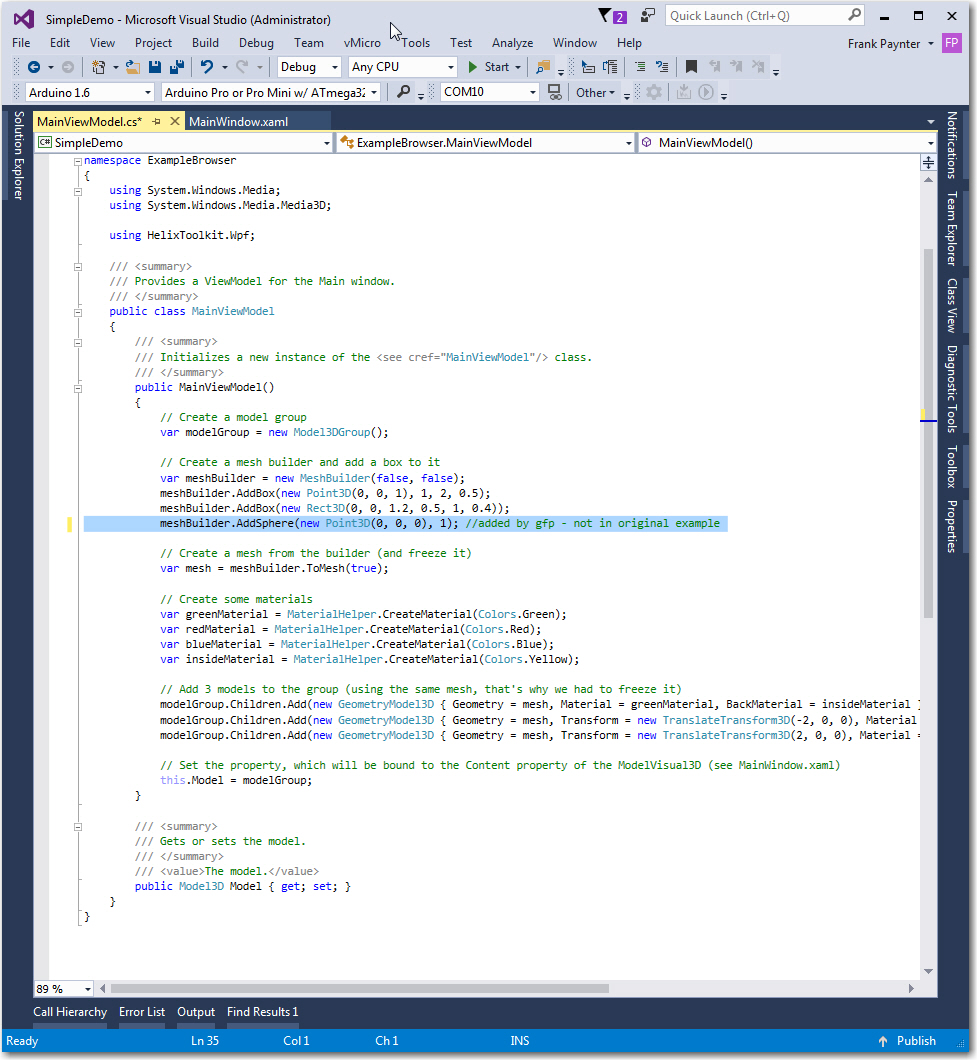

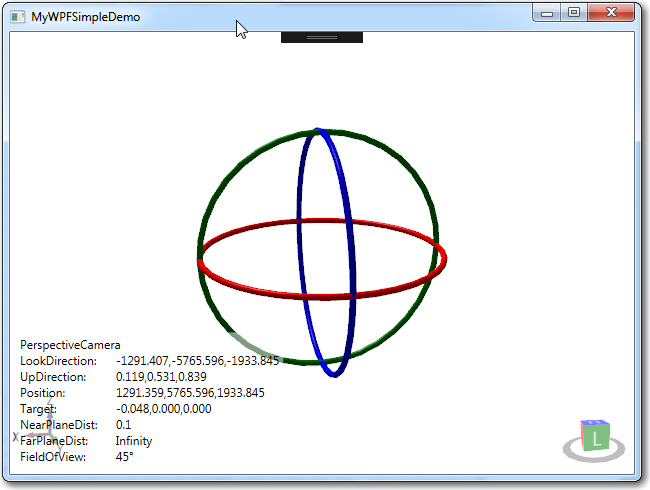

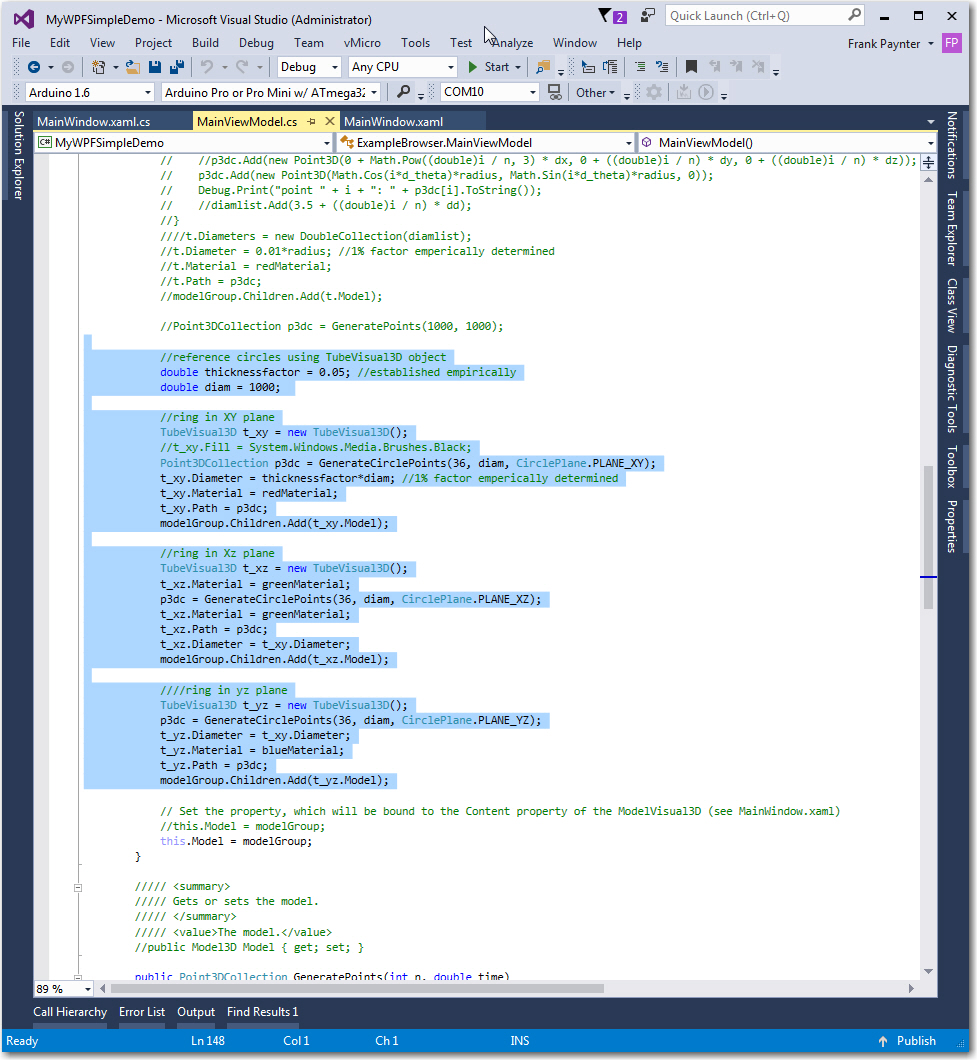

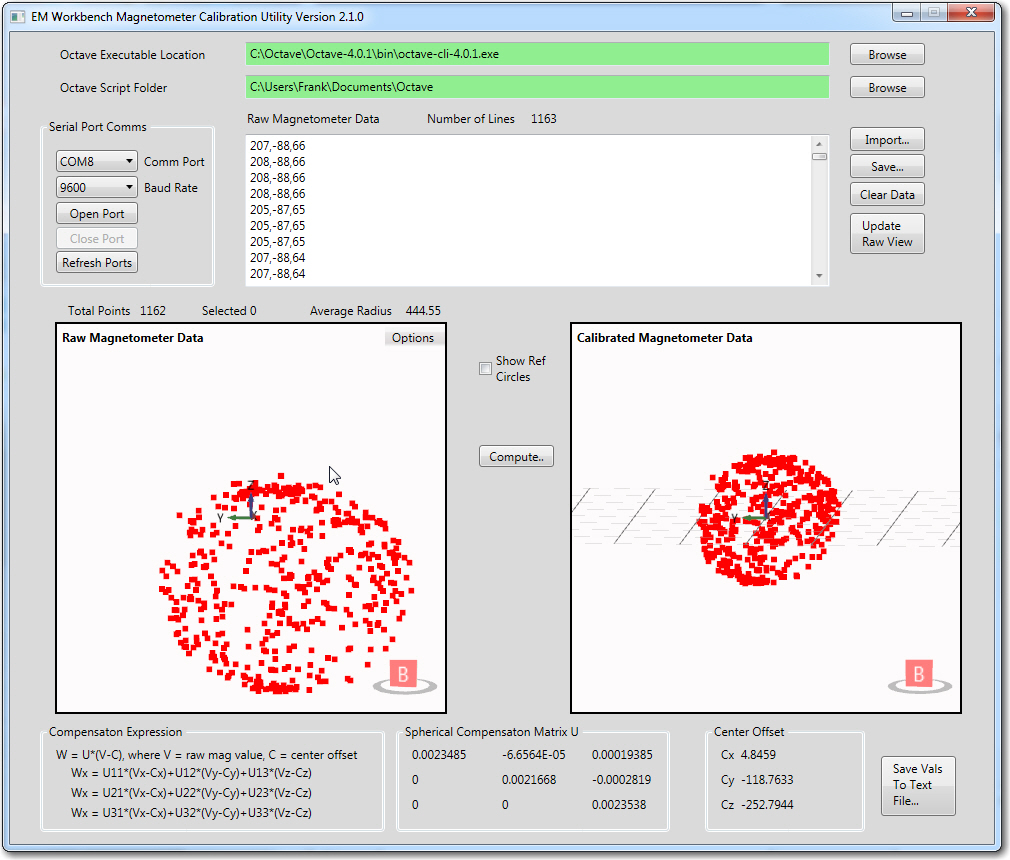

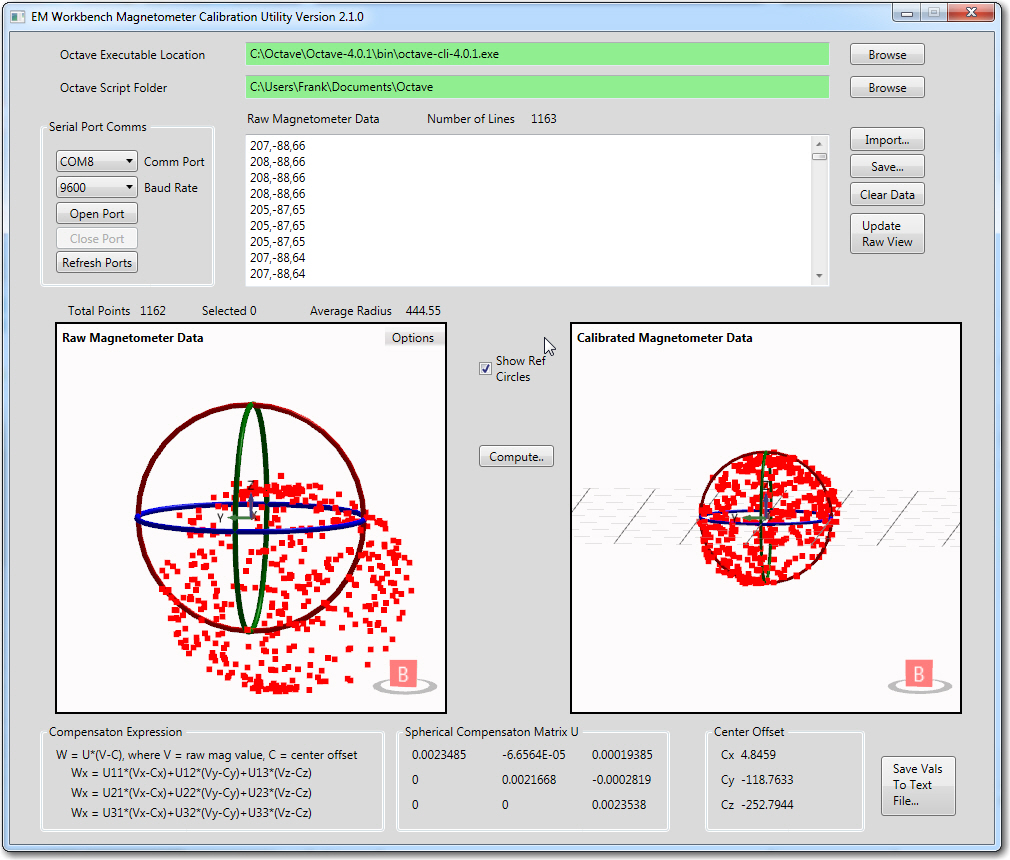

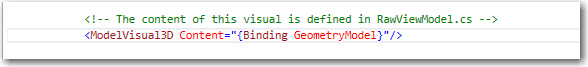

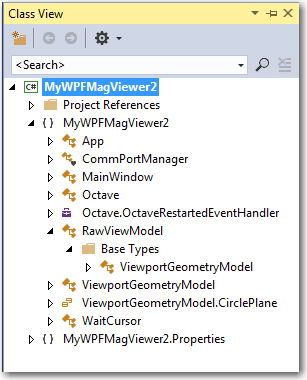

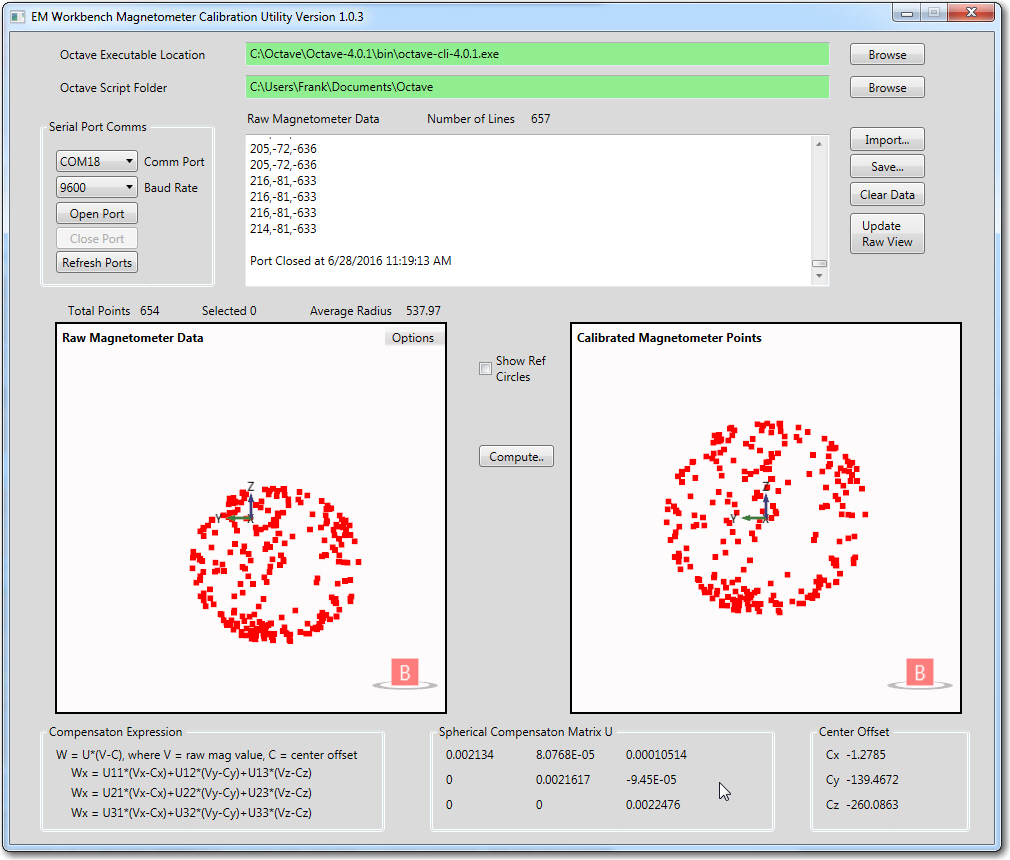

For the last several months (or was it years – hard to tell anymore) I have been trying to implement a magnetic heading sensor for Wall-E2, my wall-following robot. What started out last March as “an easy mod” has now turned into a Sisyphean ordeal – every time I think I have one problem figured out, another (bigger) one pops up to ruin my day. The first problem was to re-familiarize myself with the CK Devices ‘Mongoose’ IMU, and get it installed on the robot. The next one was to figure out why it didn’t work quite the way I thought it should, only to discover that sensitive magnetometers don’t really appreciate being installed millimeters away from dc motor magnets – oops! So, that little problem led me into the world of in-situ magnetometer calibration, which resulted in my creation of a complete 3D magnetometer calibration utility based on a MATLAB routine (the tool uses Windows WPF for 3D visualization, and Octave for the MATLAB calculations – see this post). After getting the calibration tool squared away, I used it to calibrate the Mongoose unit (now relocated to Wall-E2’s top deck, well away from the motors), and once again I thought I was home free. Unfortunately, reality intruded again when my ‘field testing’ (in the entry hallway of my home) revealed that there were places in the hallway where the magnetometer-based magnetic heading reports were wildly different than the actual physical robot orientation, as reported in my July post on this subject.

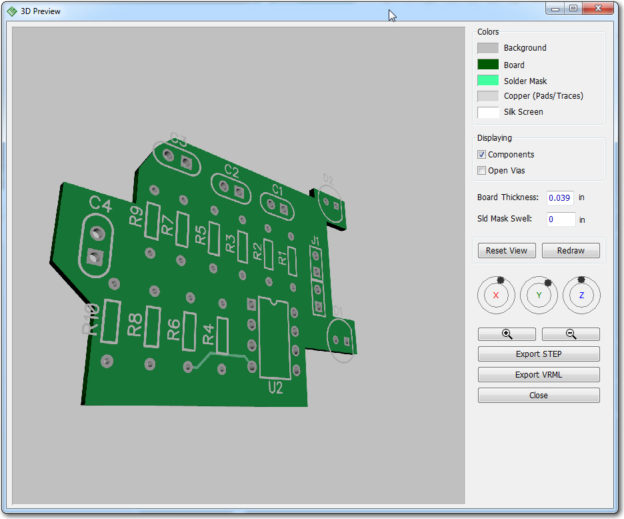

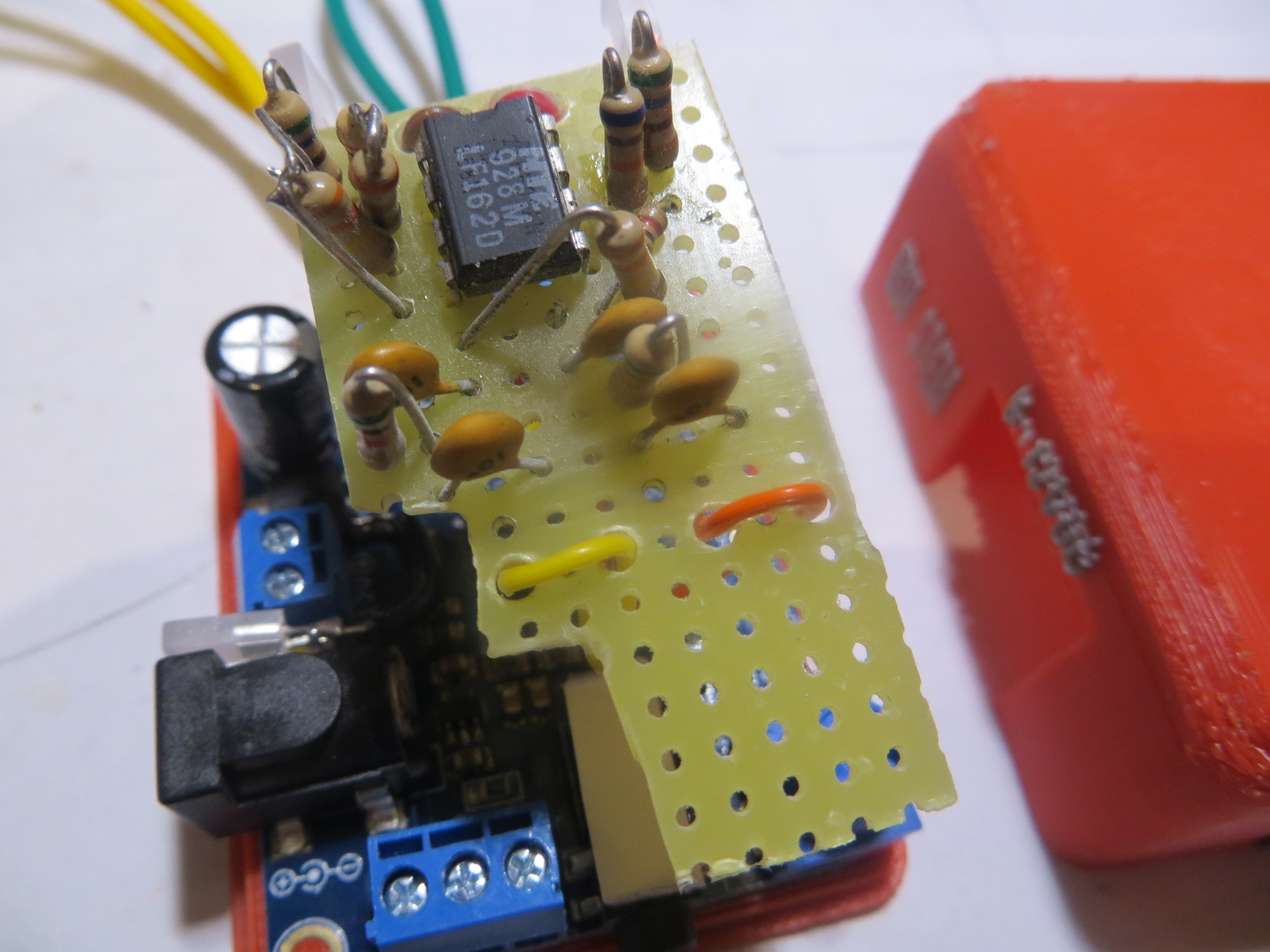

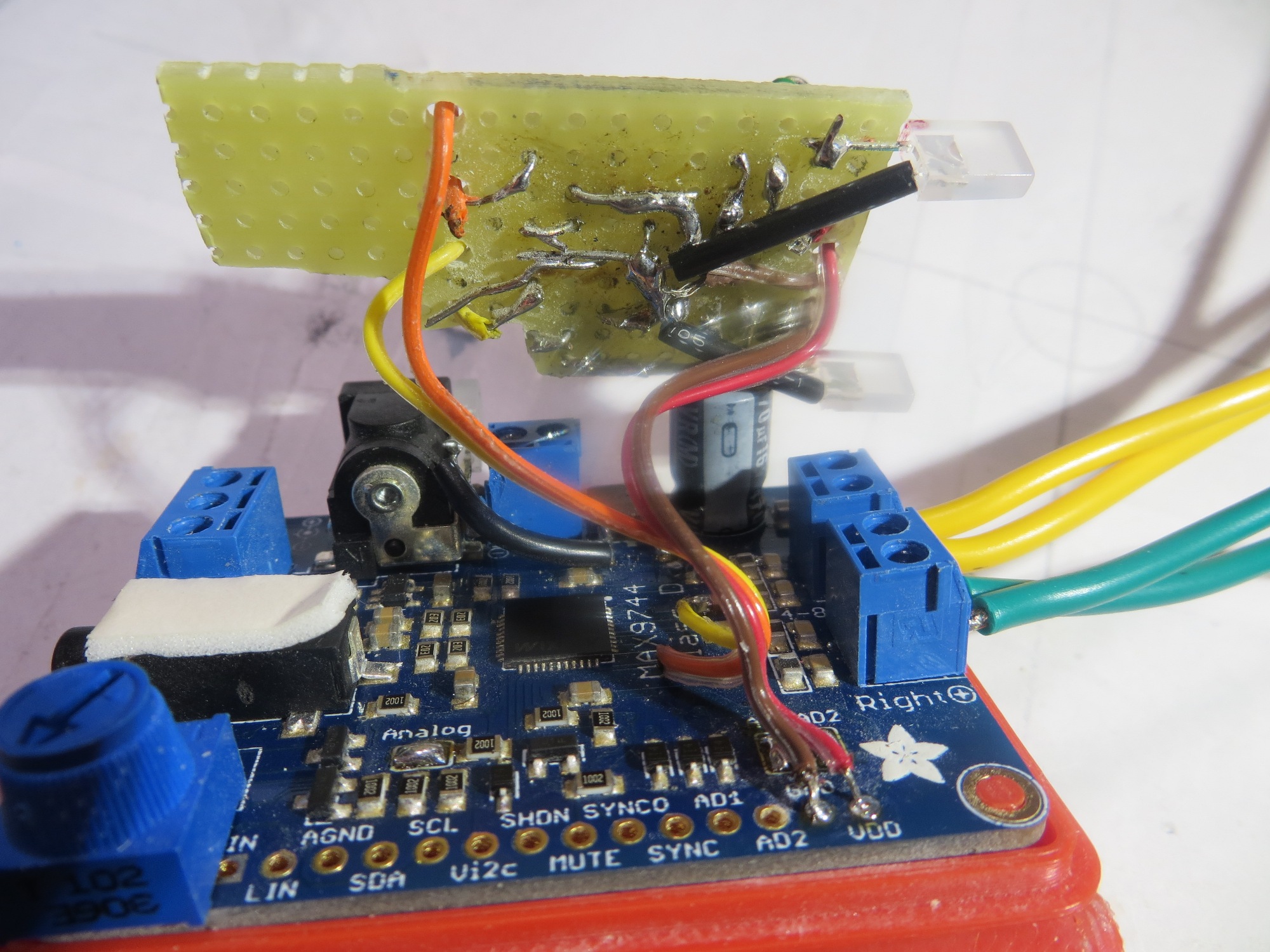

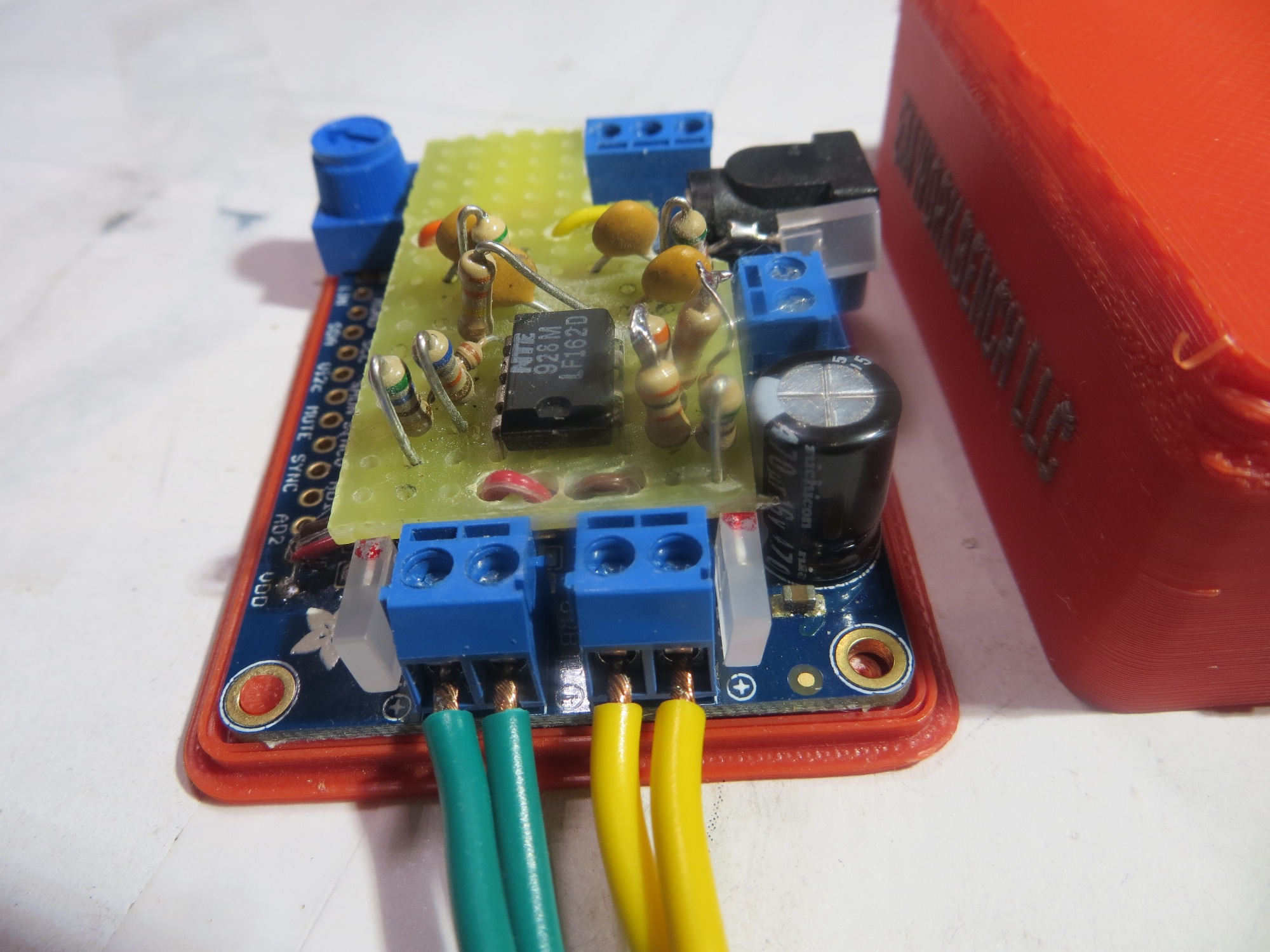

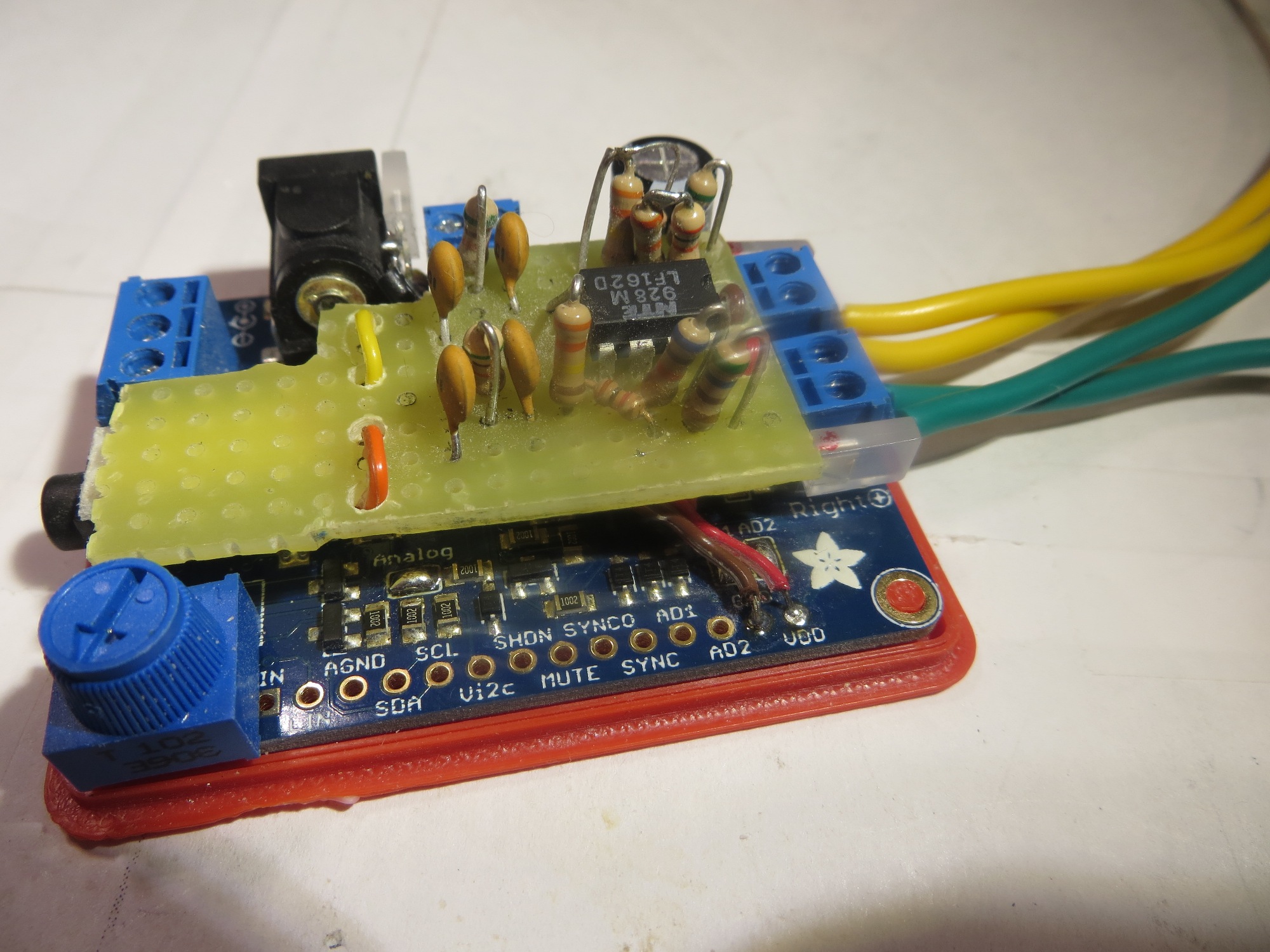

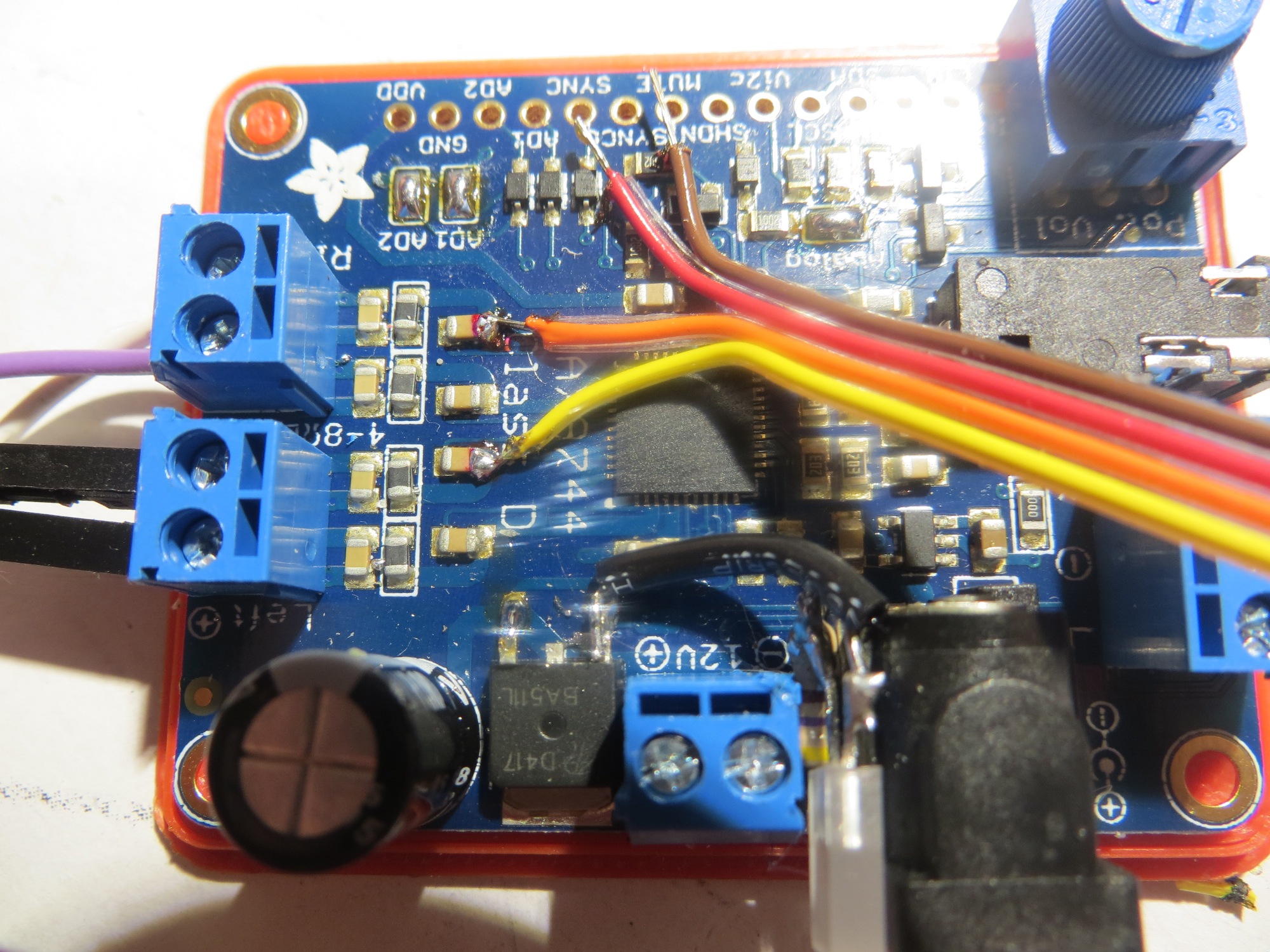

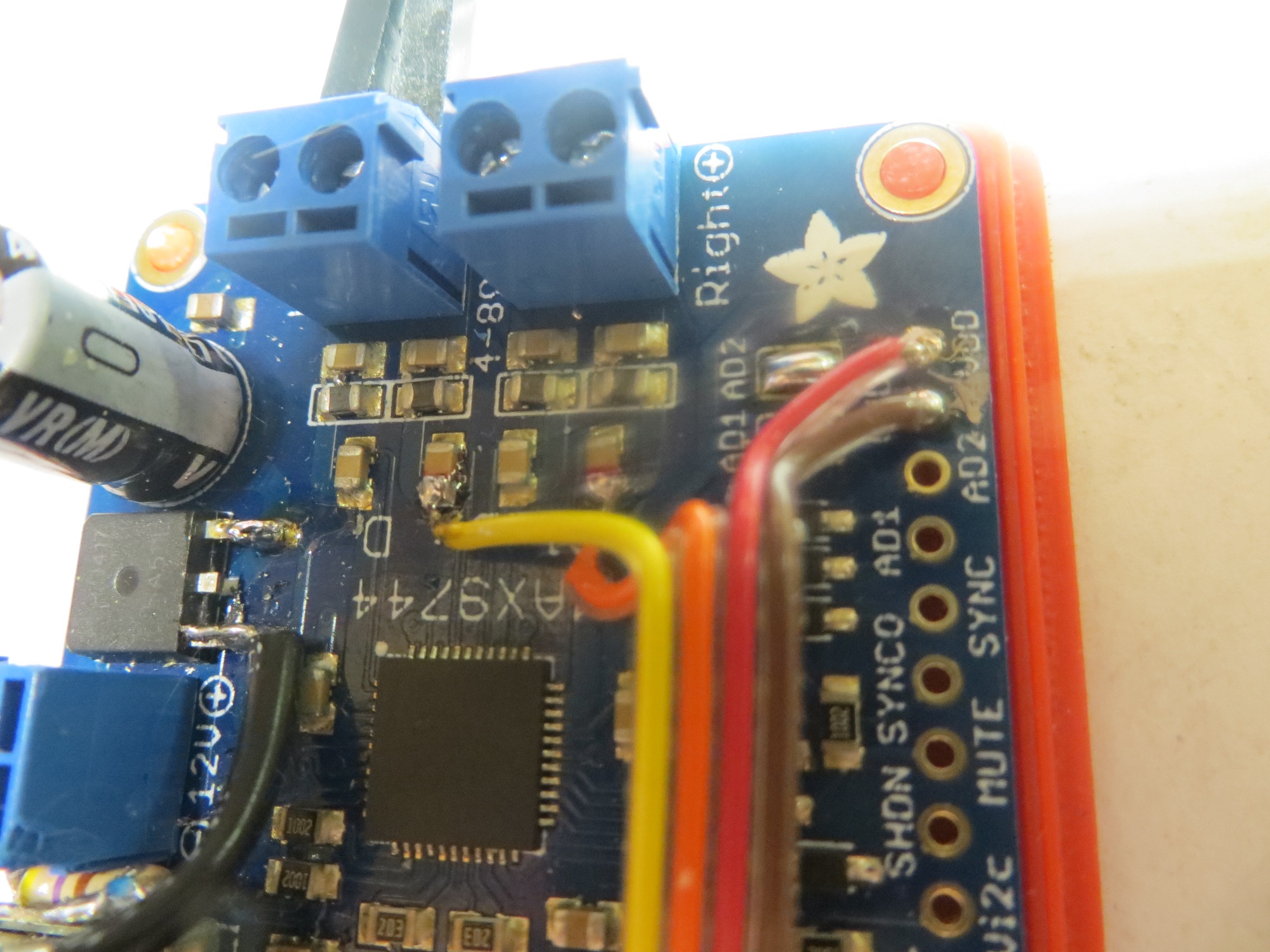

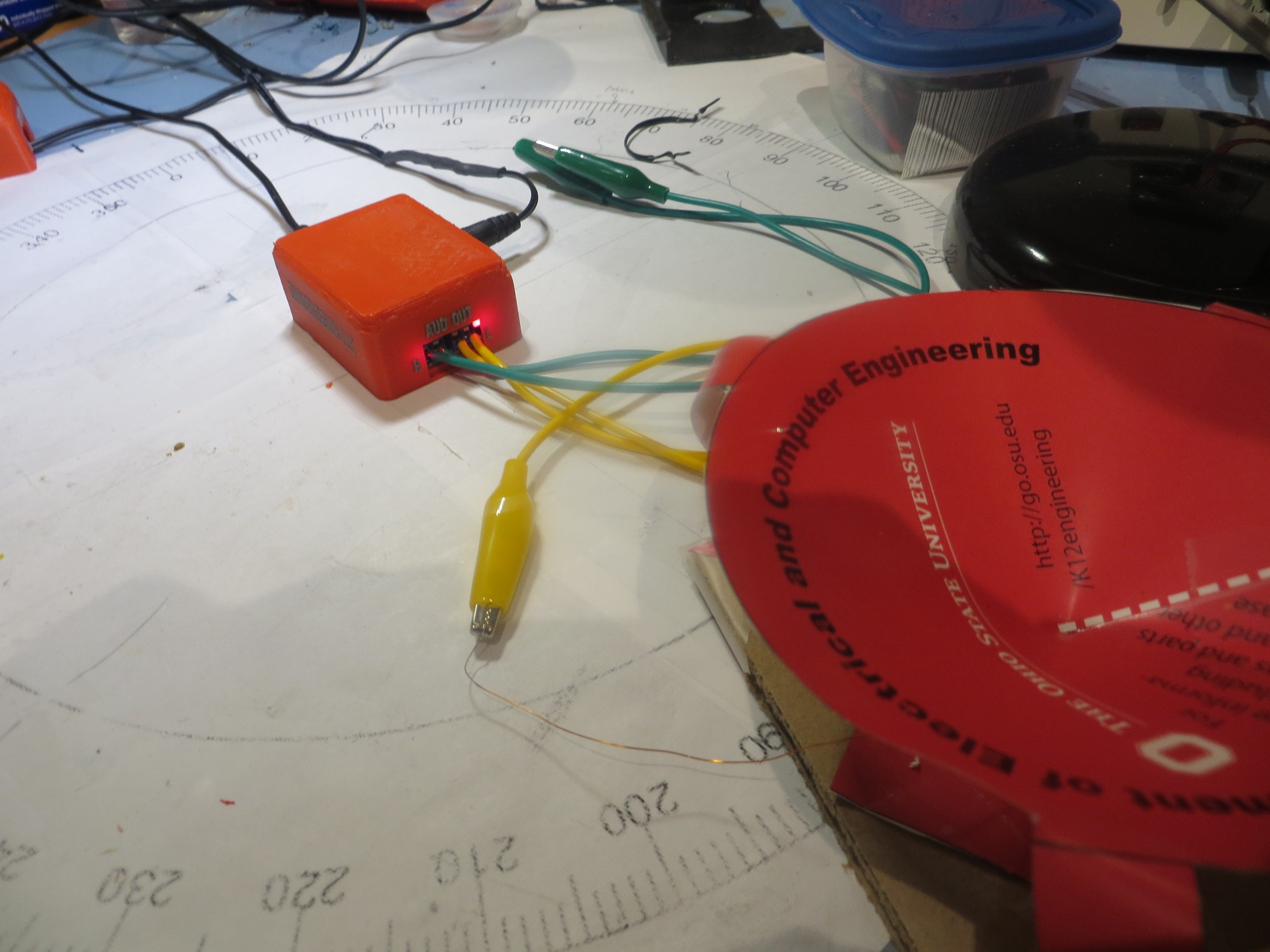

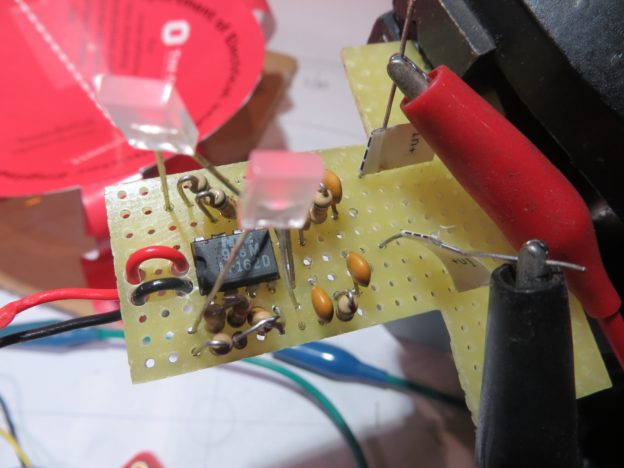

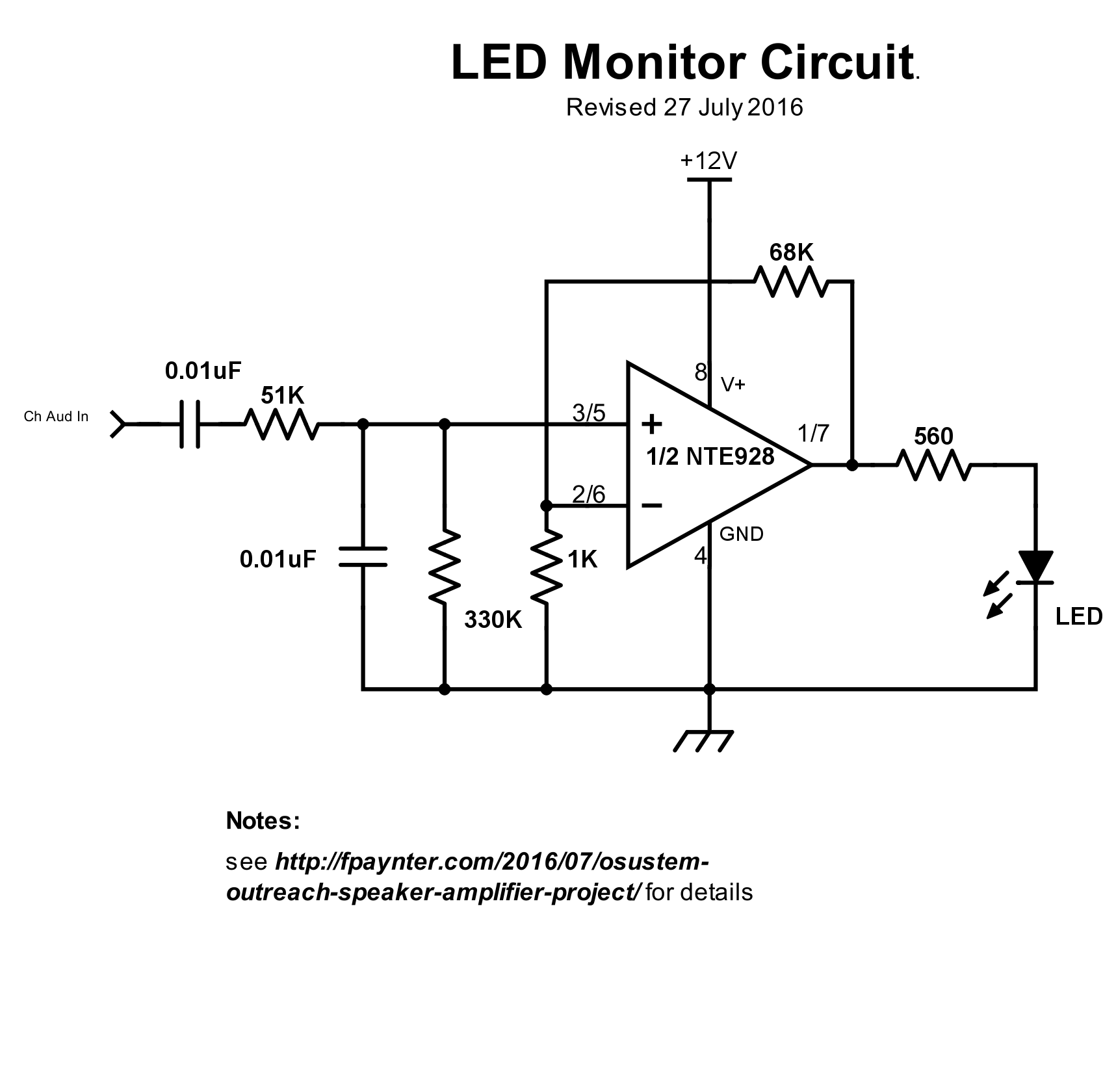

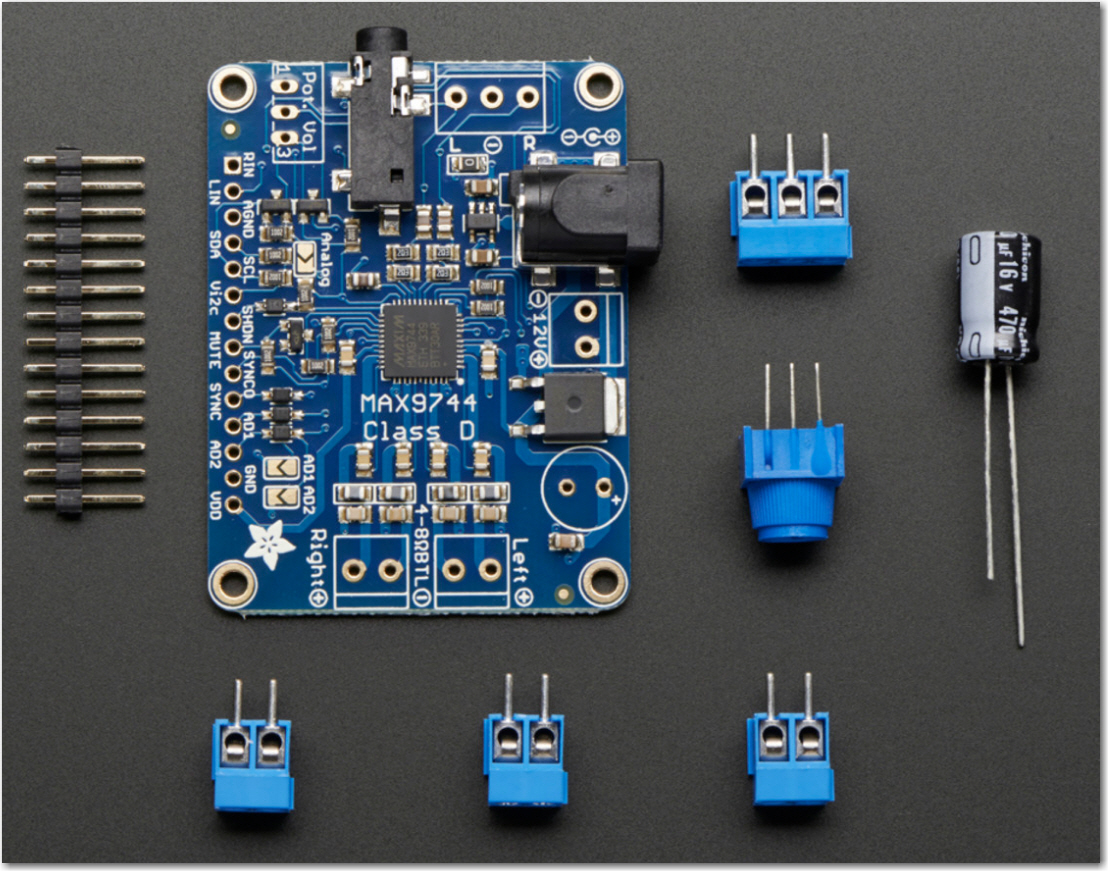

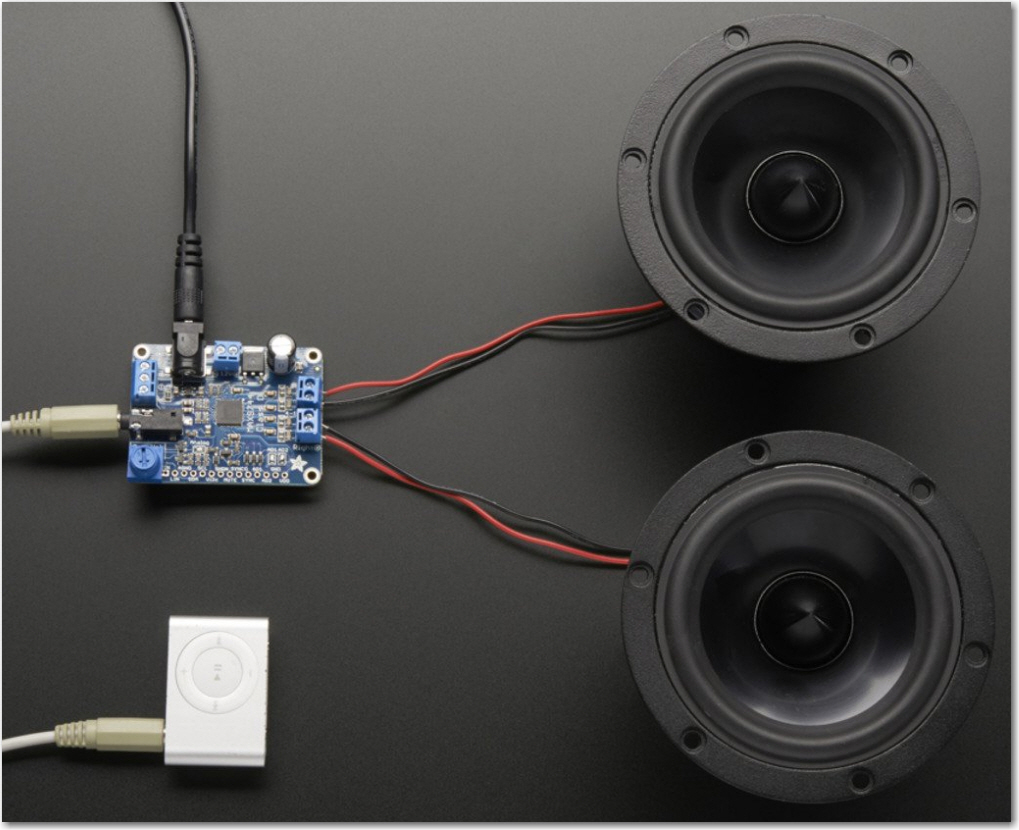

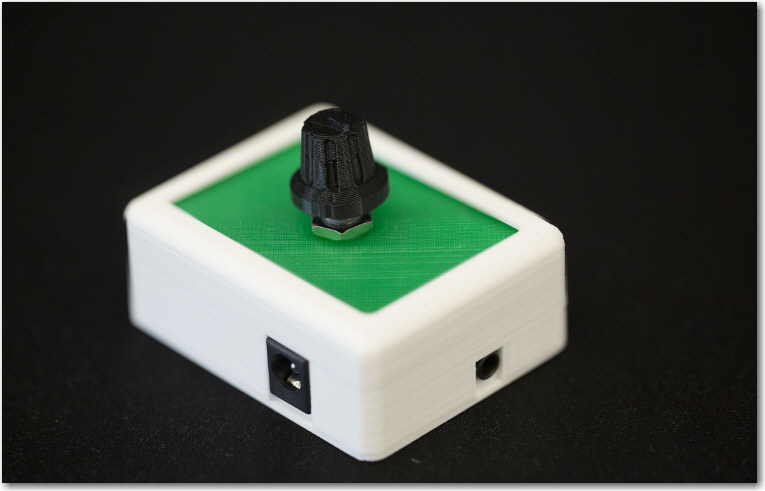

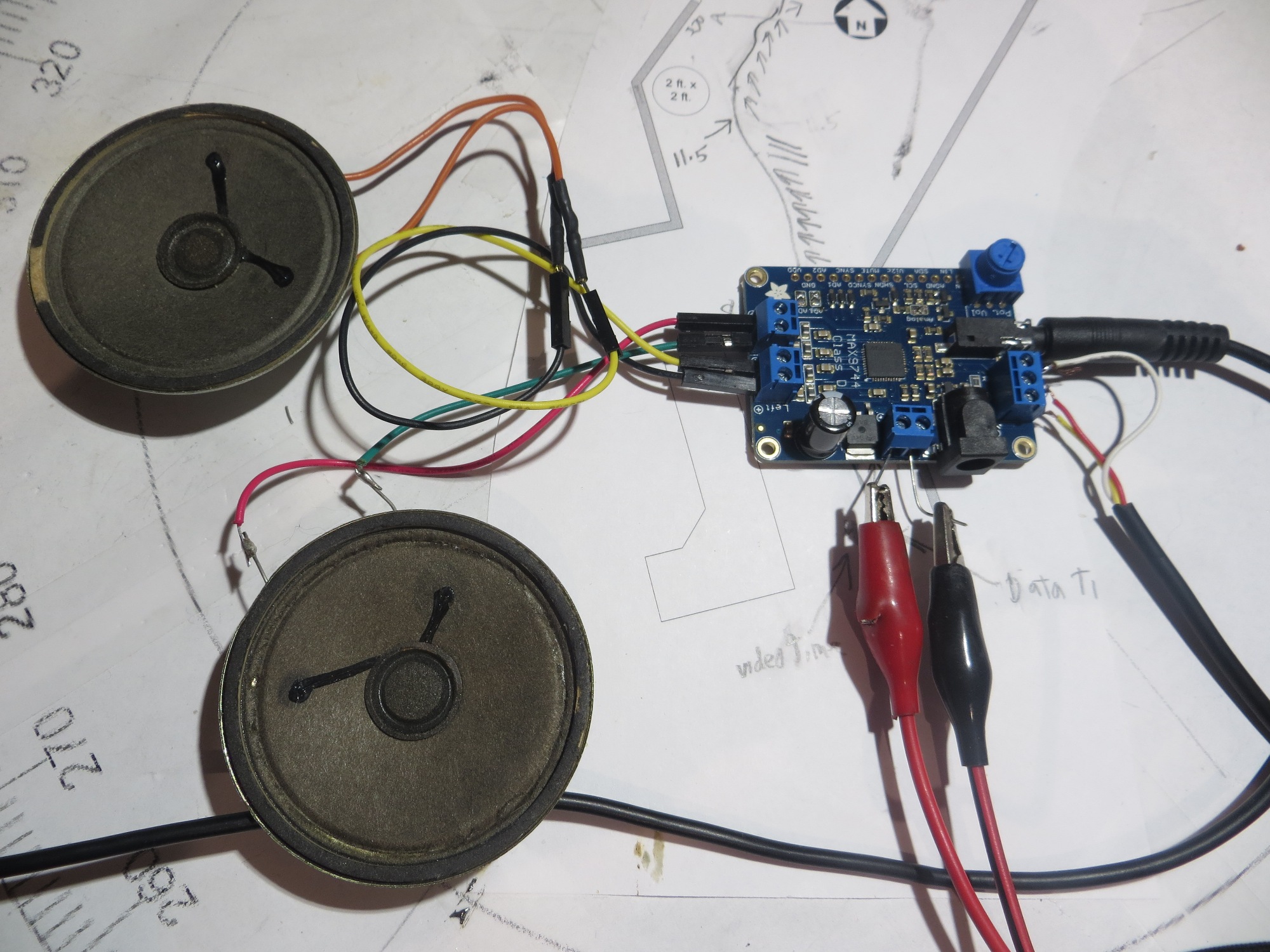

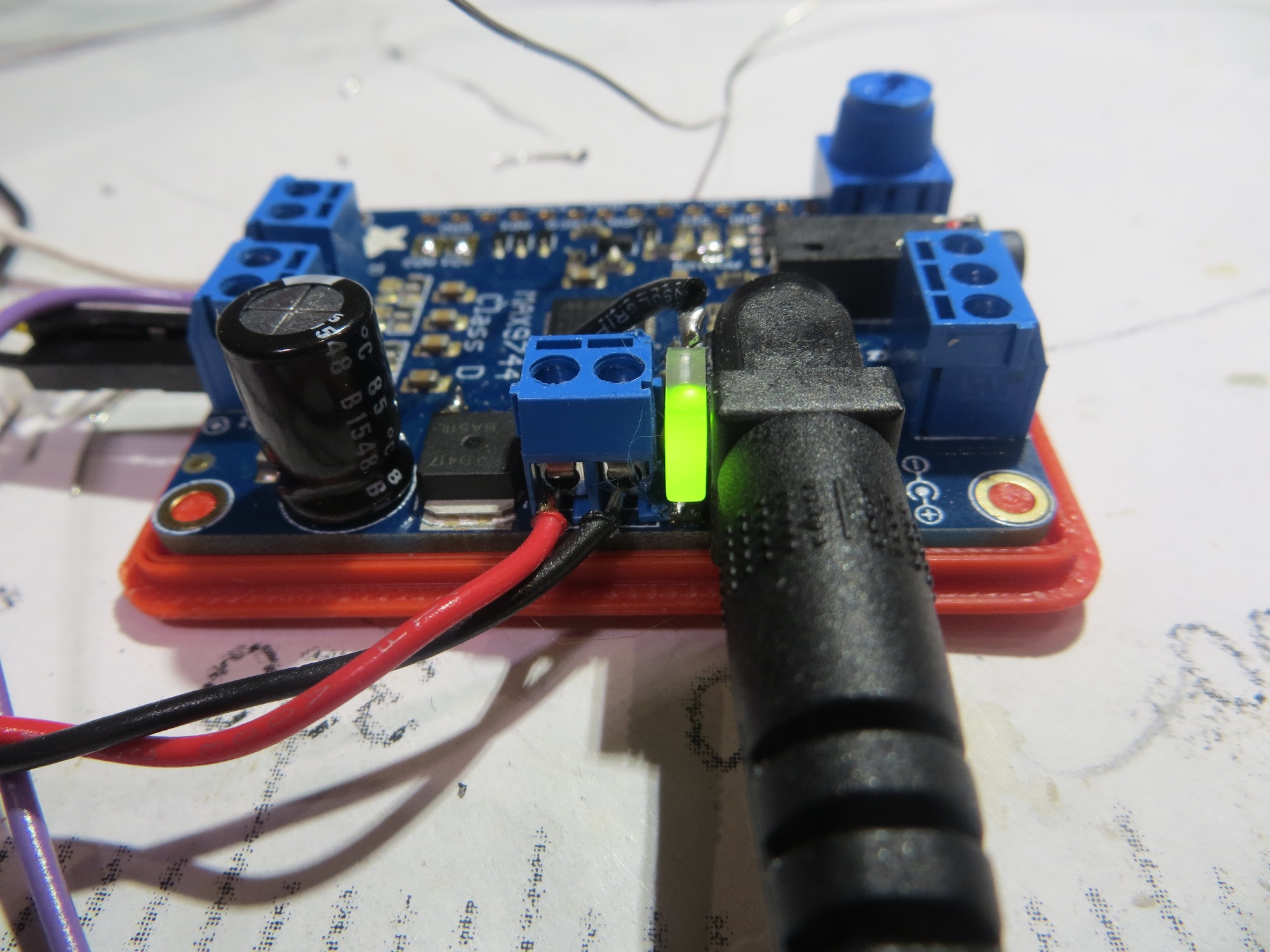

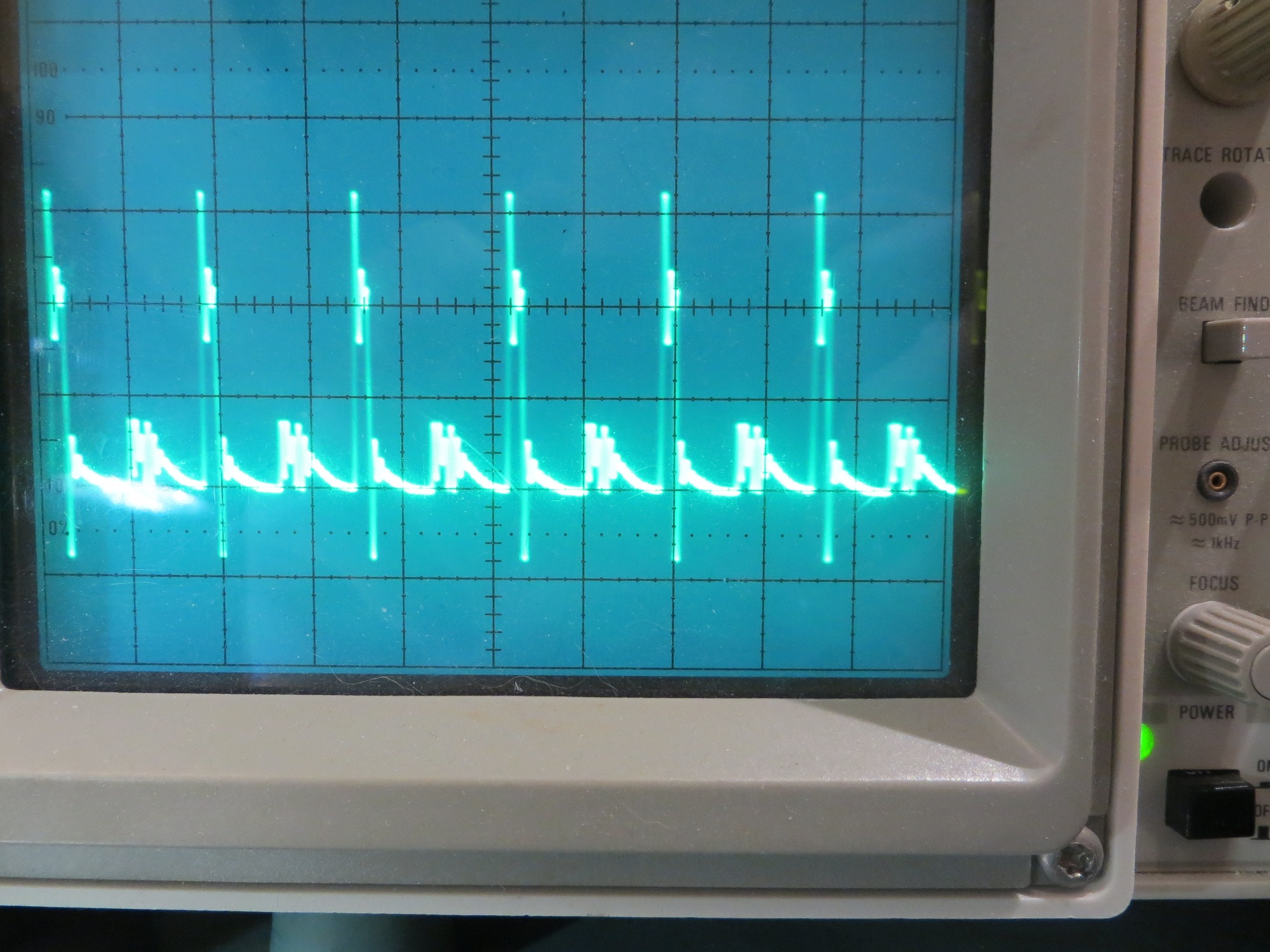

After the July tests, I knew something was badly wrong, but I didn’t have a clue what it was, so I decided to put the problem down for a while and let my subconscious poke at it for a while. In the interim I had a wonderful time with my two grandchildren (ages 13 & 15) and a real 3D printing geek-fest with the younger of the two. I also got involved in creating a small audio amplifier in support of the Engineering Outreach program here at ‘The’ Ohio State University.

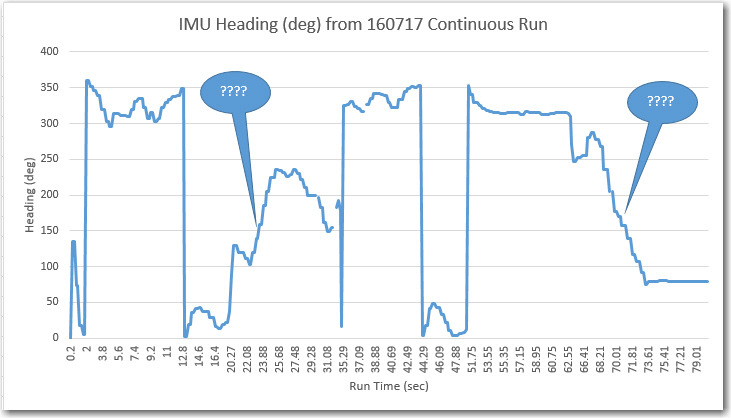

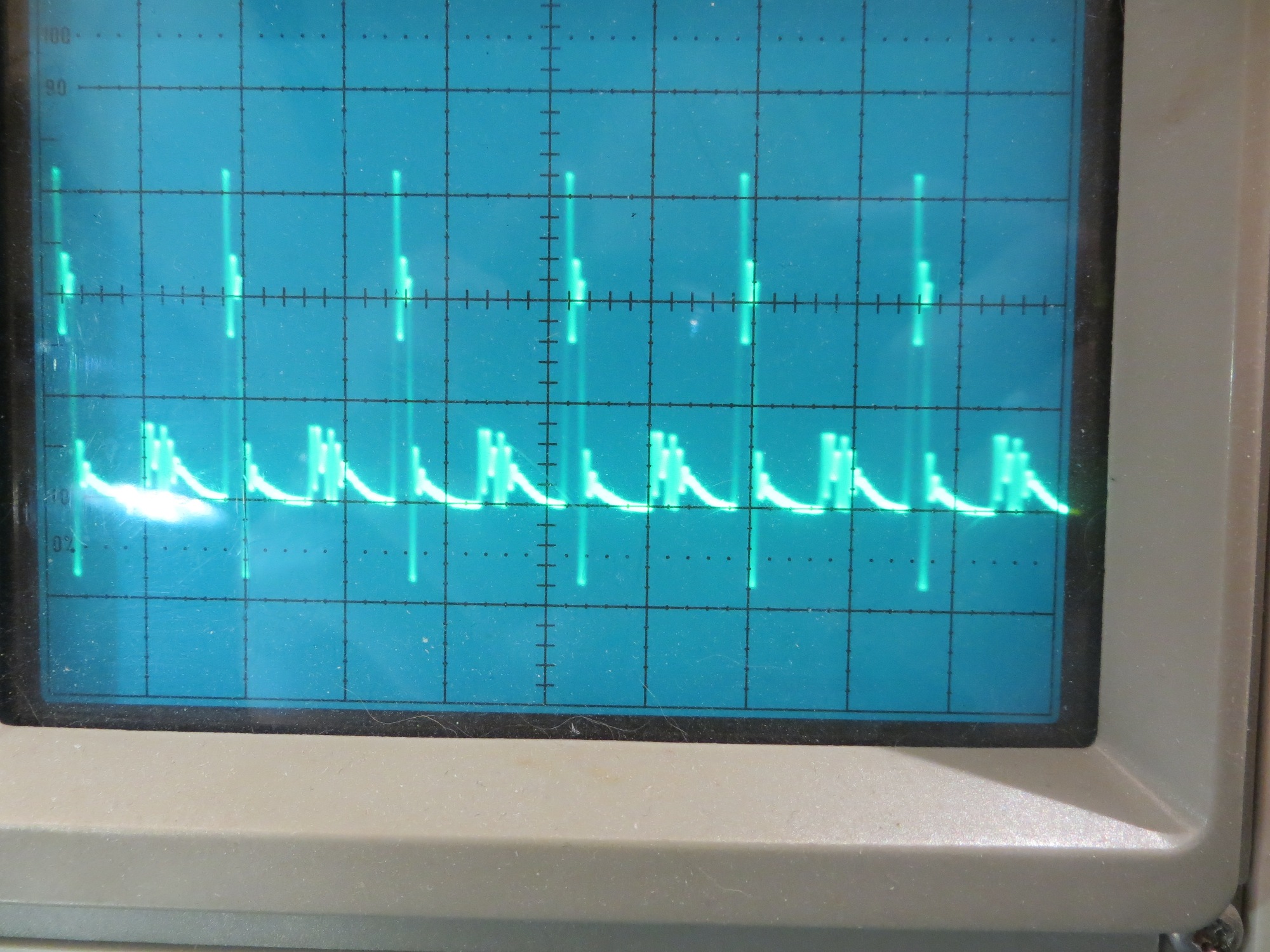

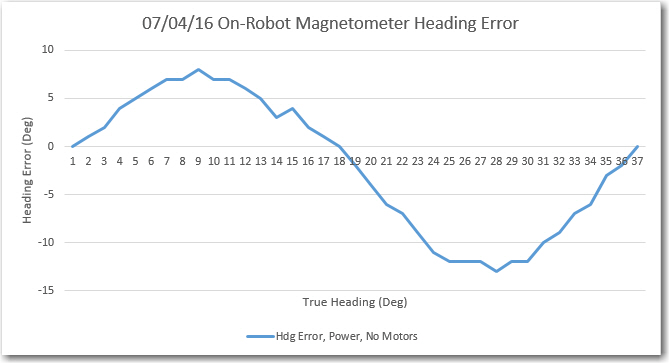

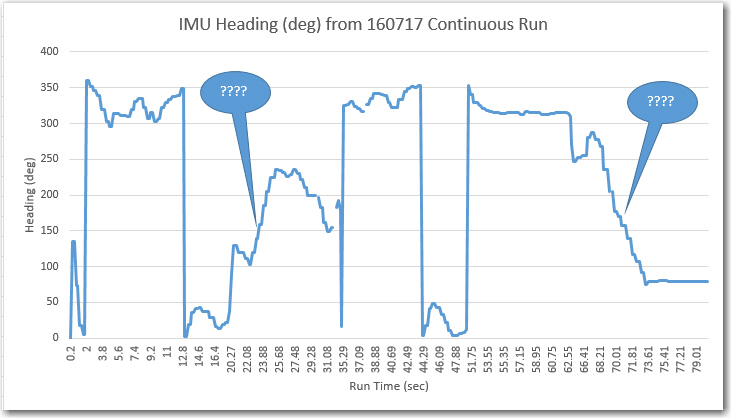

So now, after almost a month off, I’m back on the case again, trying to make sense of that clearly erroneous (or at least non-understandable) data, as shown below (repeated from my previous post)

July continuous run test, showing two areas where the reported headings don’t match reality

The two areas marked with ‘???’ correspond to the times where the robot was traversing along the west (garage side) wall of the entry hall, the first time going north, and the second time going south. The robot was clearly going in a mostly constant direction, so the data obviously doesn’t reflect the robot’s actual heading. However, on the other (east) side of the entry hallway, the data looks much better, so I began to think there was maybe something about the west wall that was screwing up the magnetometer data.

As usual with experimentation, it is important to design experiments where the number of variables is kept to the minimum, ideally just one. By keeping all other parameters fixed, any variation in the data must be due solely to that one variable. In this case, there were several variables that needed to be considered:

- The anomalous data might be due to some changes in motor current. When the robot is wall following, there are constant small changes to the left & right motor currents. When the robot encounters an obstacle, it backs up and spins around, and this entails radical changes in motor current direction and amplitude.

- The anomaly might be due to some timing issue. It could be, for instance, that the heading data from the magnetometer is coming in too fast for the comm link to handle, so it starts becoming decoupled from the actual robot position/orientation, and then catches up again at some other point.

- The anomaly might be due to some physical characteristic of the entry hallway. The west side is where the most obvious anomalies occurred, and that wall is common with the garage. Maybe something in the garage (cars, tools, electrical wiring, …) is causing the problem.

Using my new magnetometer calibration utility, Wall-E2’s magnetometer has been calibrated with the motors running and with them off, and there was very little difference between the two calibration matrices. Moreover, my bench testing has shown very little heading change regardless of motor current/direction. So although I couldn’t rule it out completely, I didn’t see the first item above as a viable suspect.

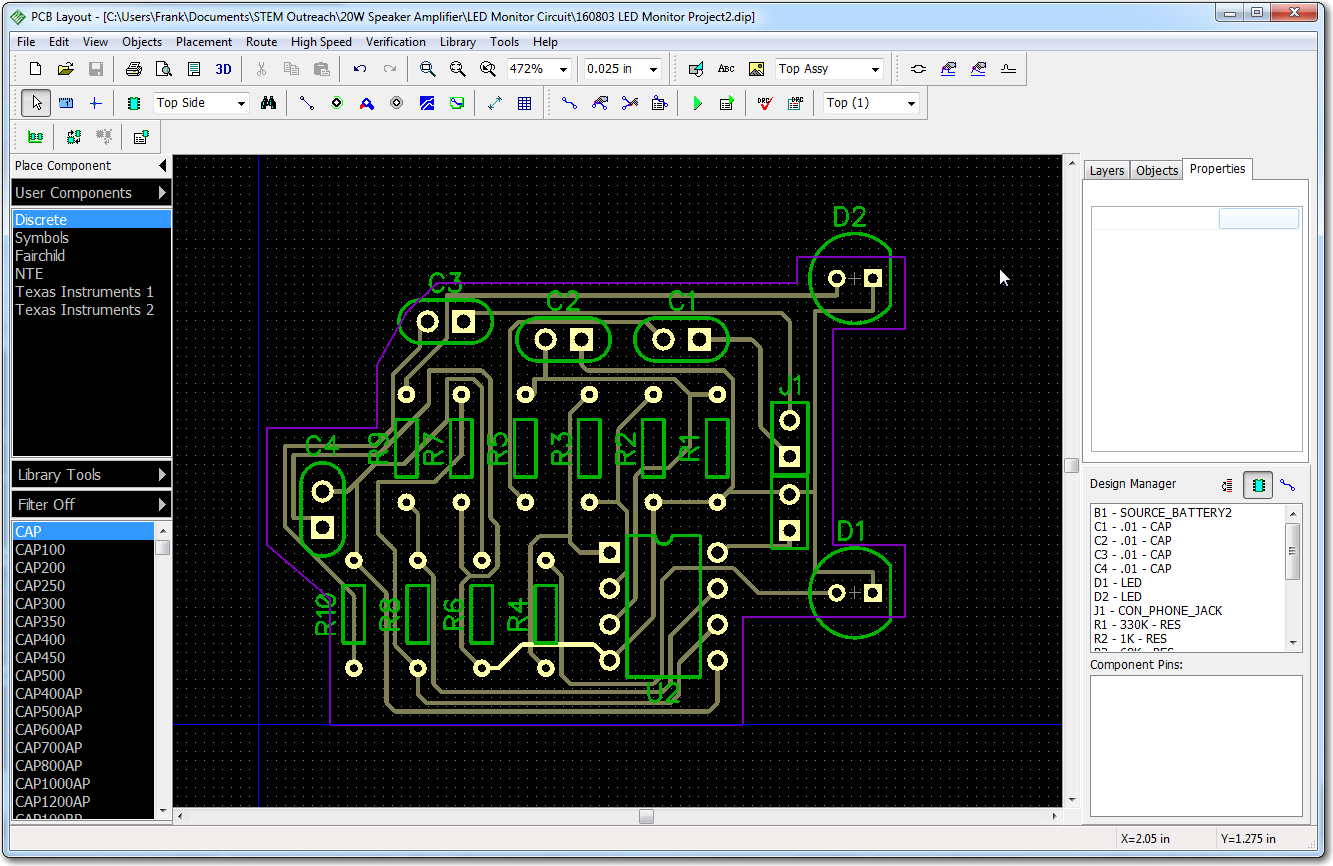

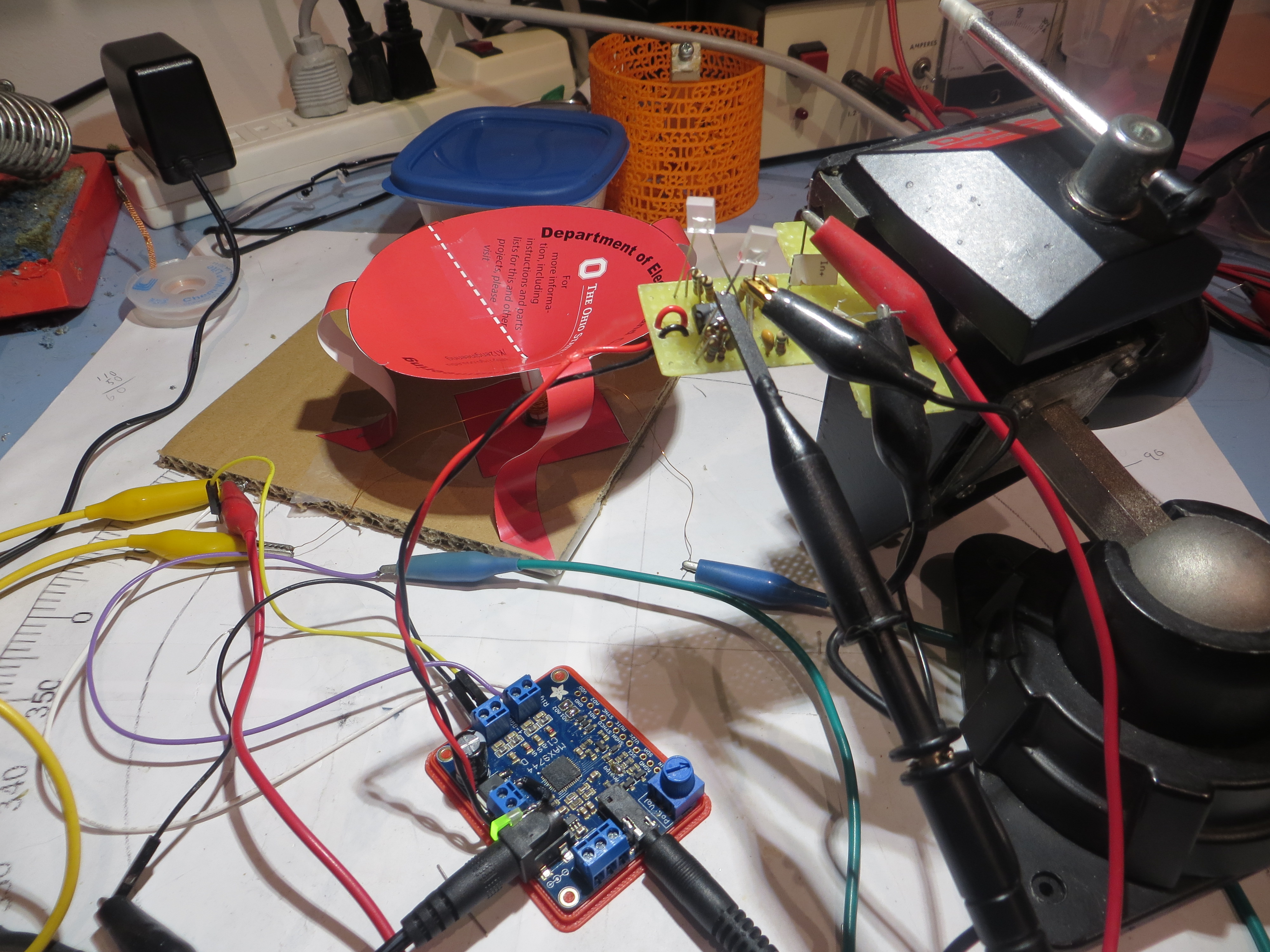

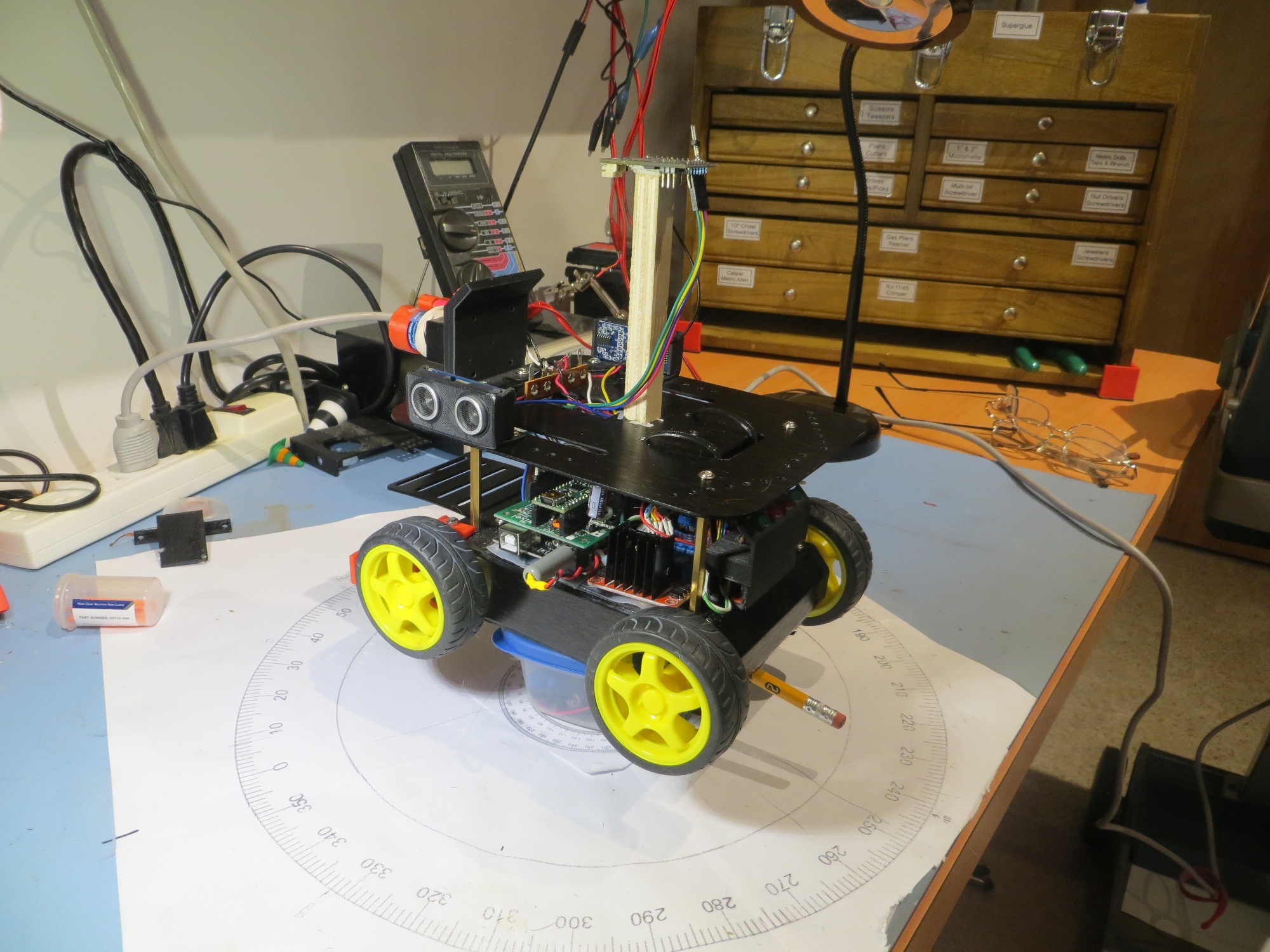

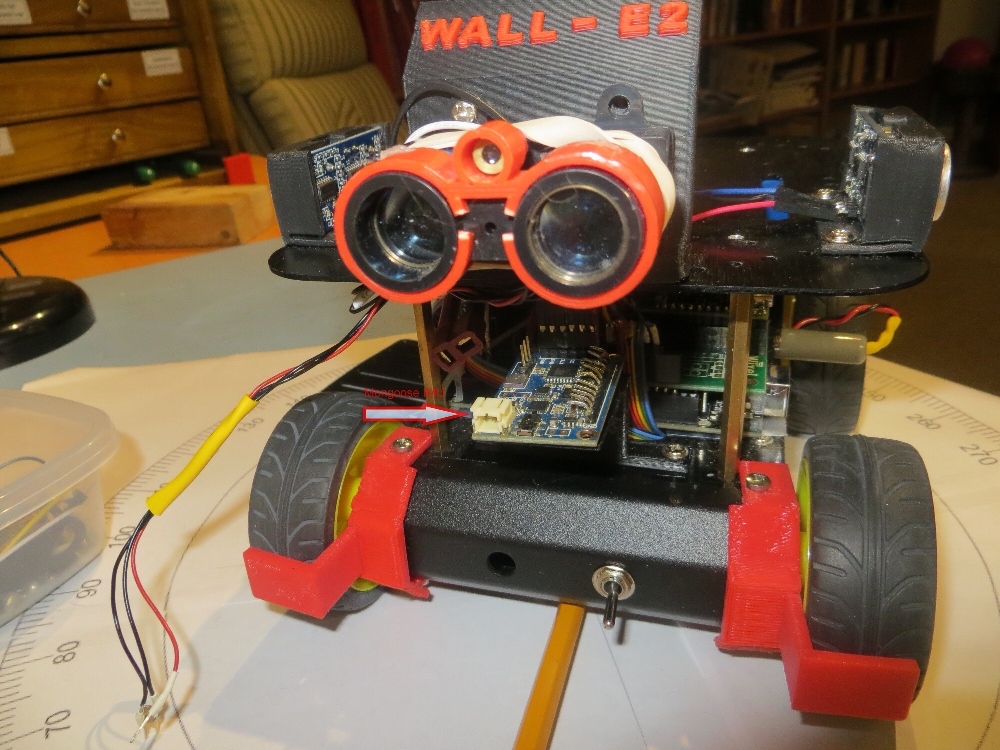

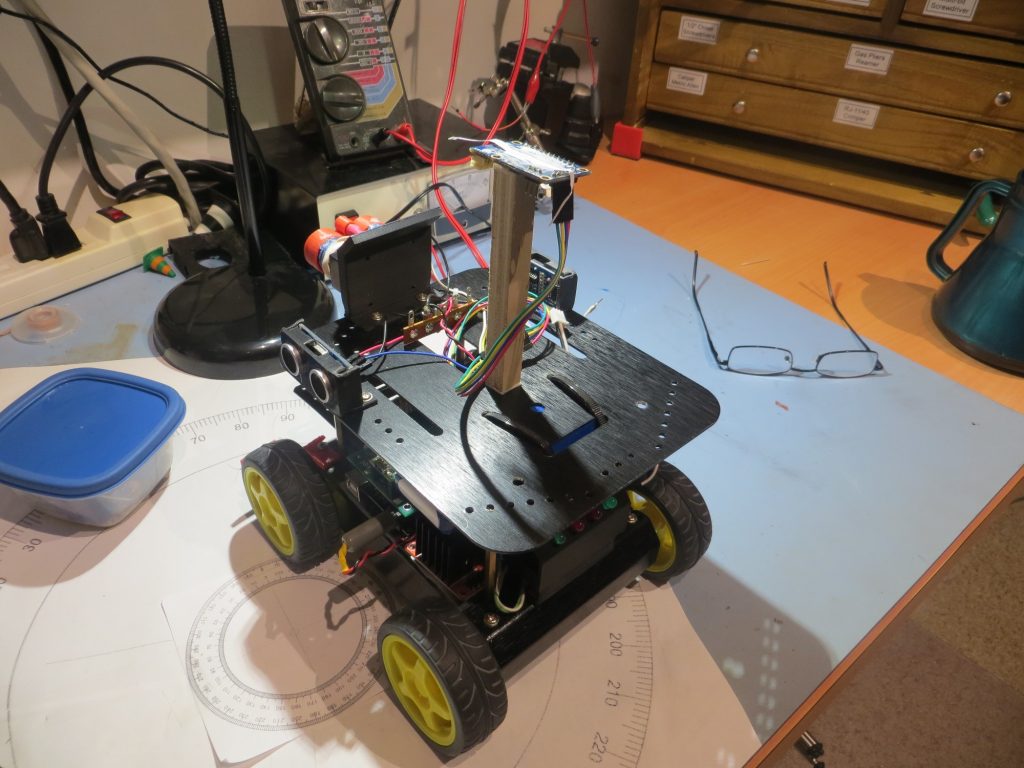

Although I haven’t seen any timing issues during my bench testing, this one remained a viable suspect IMHO, as did the idea of a physical anomaly, so my experimental design was going to have to discriminate between these two variables. To eliminate timing as the root cause, I ran a series of experiments in the entry hallway where the robot was placed on a pedestal (so its wheels were clear of the floor) at several fixed spots in the entry hallway, and heading data was collected for several seconds at each point. The following images show the layout and the robot/pedestal arrangement

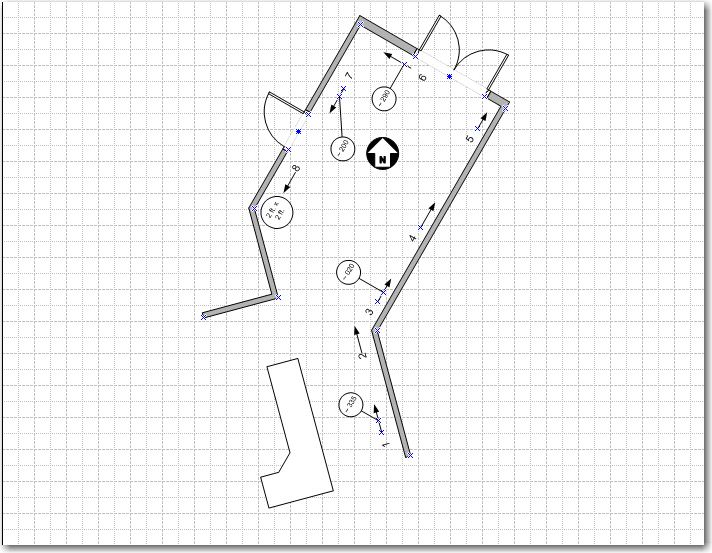

Experimental layout. Blue spots correspond to numbered/lettered position in layout diagram

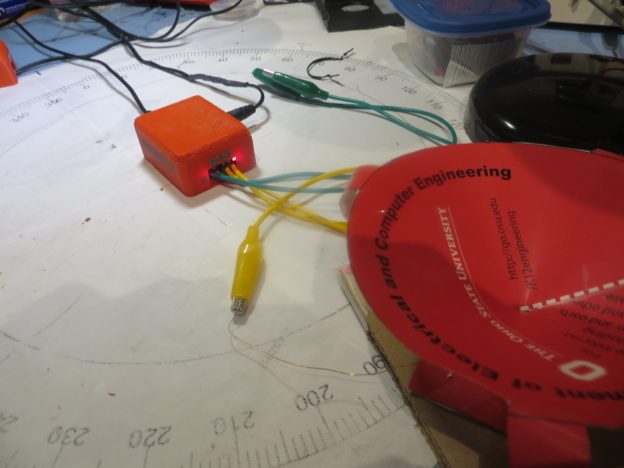

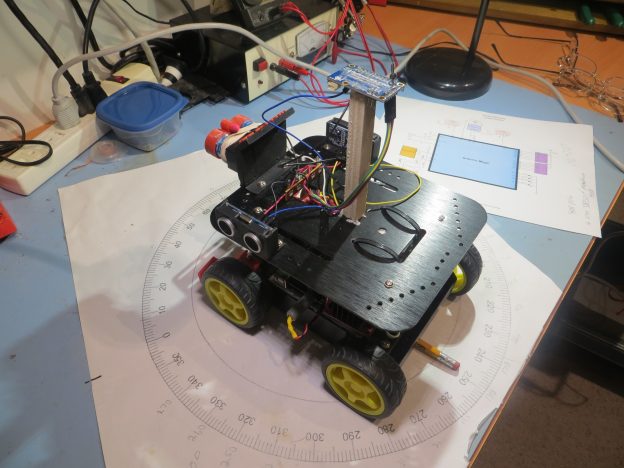

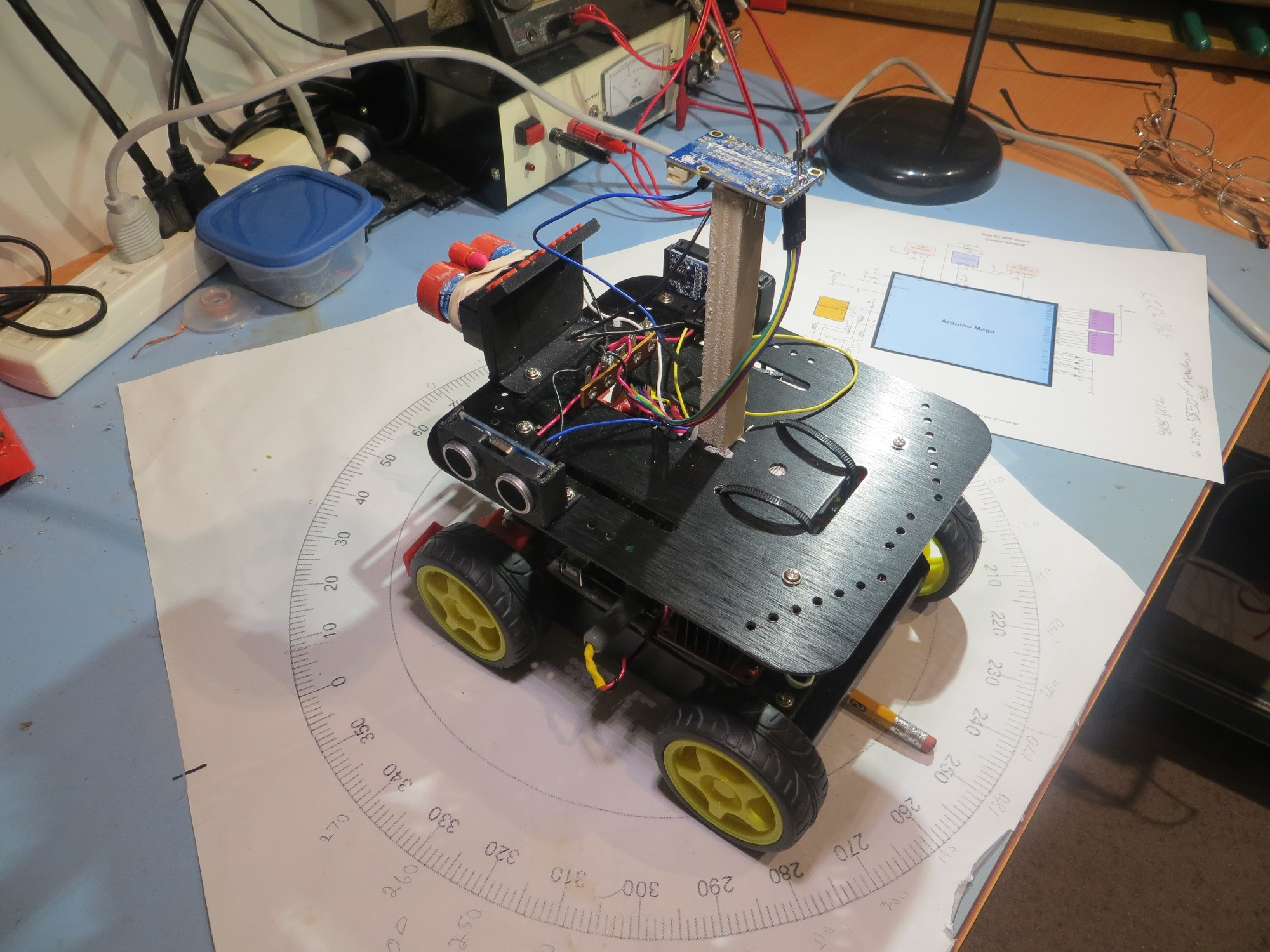

Wall-E2 on a pedestal (at position ‘A’ in diagram) so motors can run normally without moving the robot

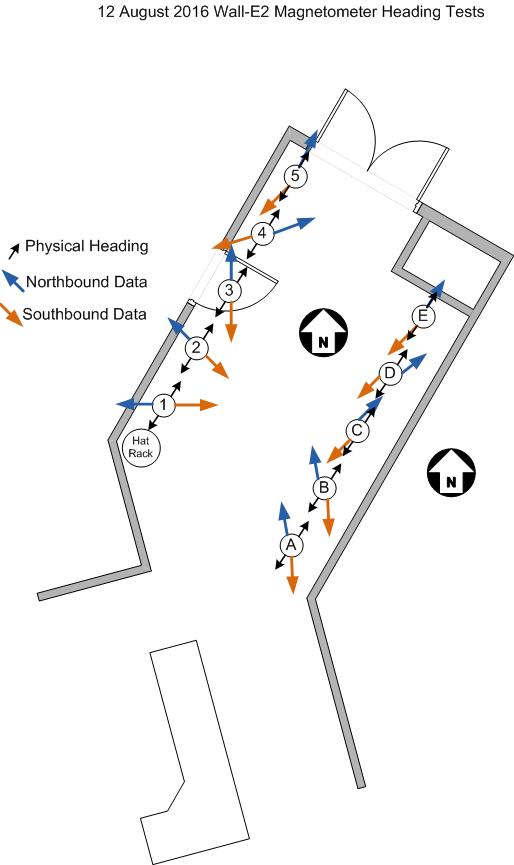

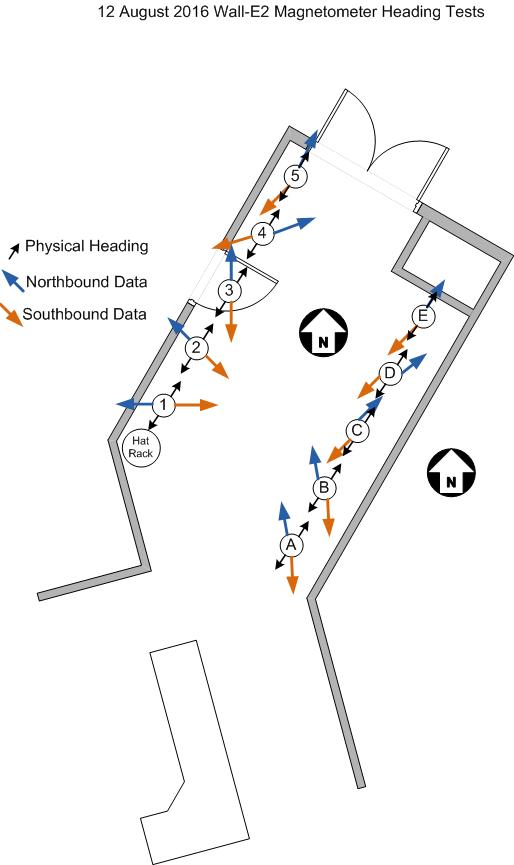

10-12 August Mag Heading Field Test Layout

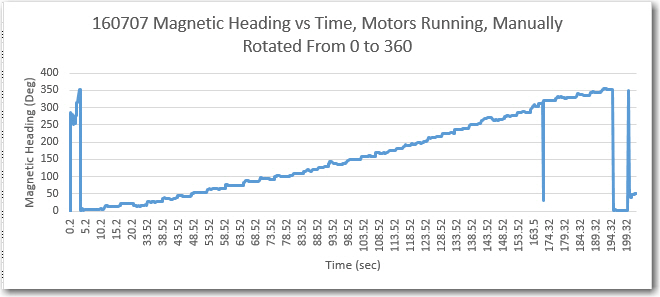

Data was collected at the positions shown by the numbers from 1 to 5 along the west (garage) wall, and by the letters from A to F along the east wall. At each position the robot was placed on a pedestal and allowed to run for several seconds. If the heading errors are caused by the physical characteristics of the hallway, then the collected data should be constant for each spot, and the data should correspond well to the heading data from my earlier continuous runs.

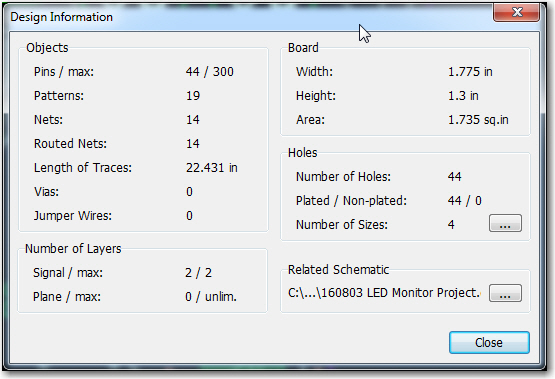

The above graphic shows the results of all 10 test positions. For each position, data was collected with the robot oriented ‘north’ (actually about 020 deg) and ‘south’ (actually about 200 deg), as denoted by the small black orientation arrows. The ‘north’ heading results are represented by the blue arrows, and the ‘south’ heading results are represented by the orange arrows. There is no meaning associated with the length of the arrows.

Results:

- Northbound and southbound data are almost exactly opposite each other at all points. To me, this indicates that the 3-axis magnetometer data and the heading values derived from that raw data are valid.

- The data clearly shows that there is a significant magnetic interferer in the vicinity of the west (garage) wall of the entry hallway. The west wall data is skewed more significantly than the east wall results, indicating that the magnitude of the interference decreases from west to east. Since mag field intensity decreases as the cube of the distance, I infer that the interferer is very close to the west wall (if it were farther away, then the difference between the east and west wall results would be smaller, because the distance difference would be smaller).

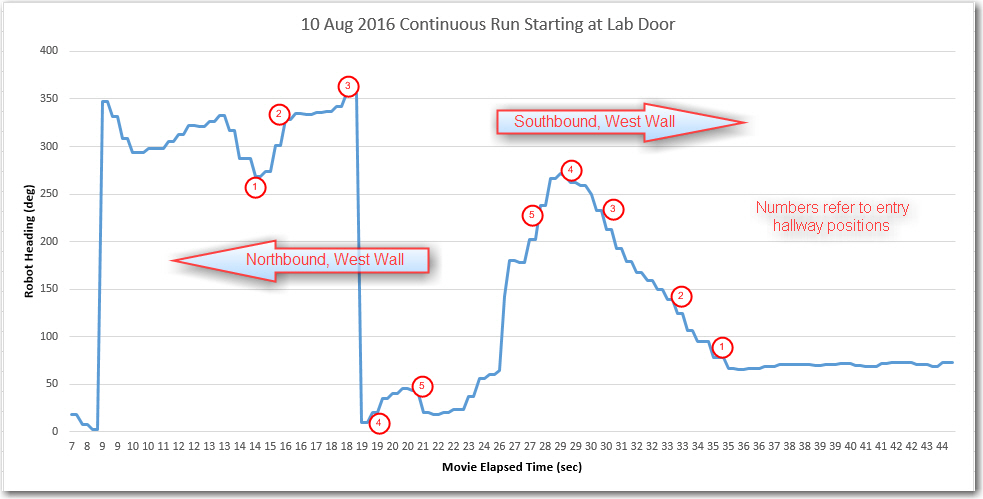

- The data at each position corresponds well with data from the same position and orientation from the various continuous runs. Given a continuous run and the knowledge of the interference pattern, it is possible to determine the robot’s location to a fair degree. The following image shows the heading results from a 10 August continuous run, labelled with the position numbers from the entry hall layout diagram. The positions were deduced from a movie of the run.

10 Aug continuous run, labelled with positions from the entry hall layout diagram

Conclusions:

- The magnetometer and the heading calculation algorithm are probably working correctly

- Magnetic interference is certainly a problem in the entry hallway next to the garage, and may (or may not) be a problem elsewhere

- Magnetic heading information may not be reliable/accurate enough to determine location with any precision, even coupled with left/right/front distances.

My next task is to run some continuous and step-by-step tests in other areas of the house, to determine if the entry hallway issue is unique to the house or an ubiquitous problem.

Stay tuned!

Frank