Posted 15 January 2022,

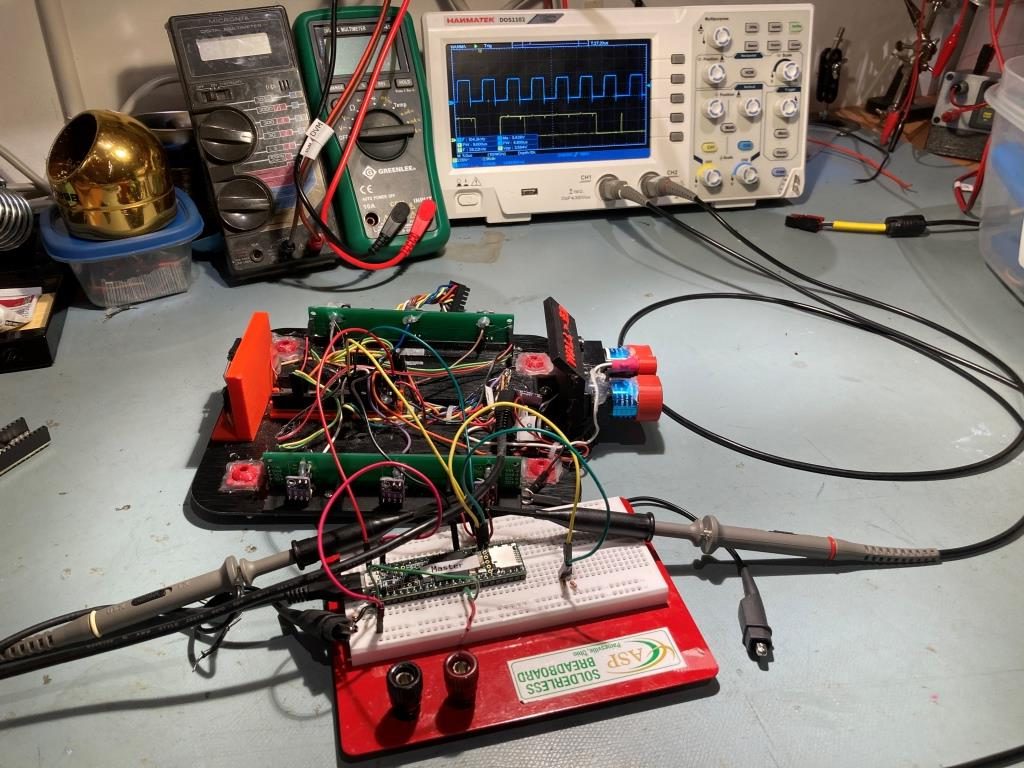

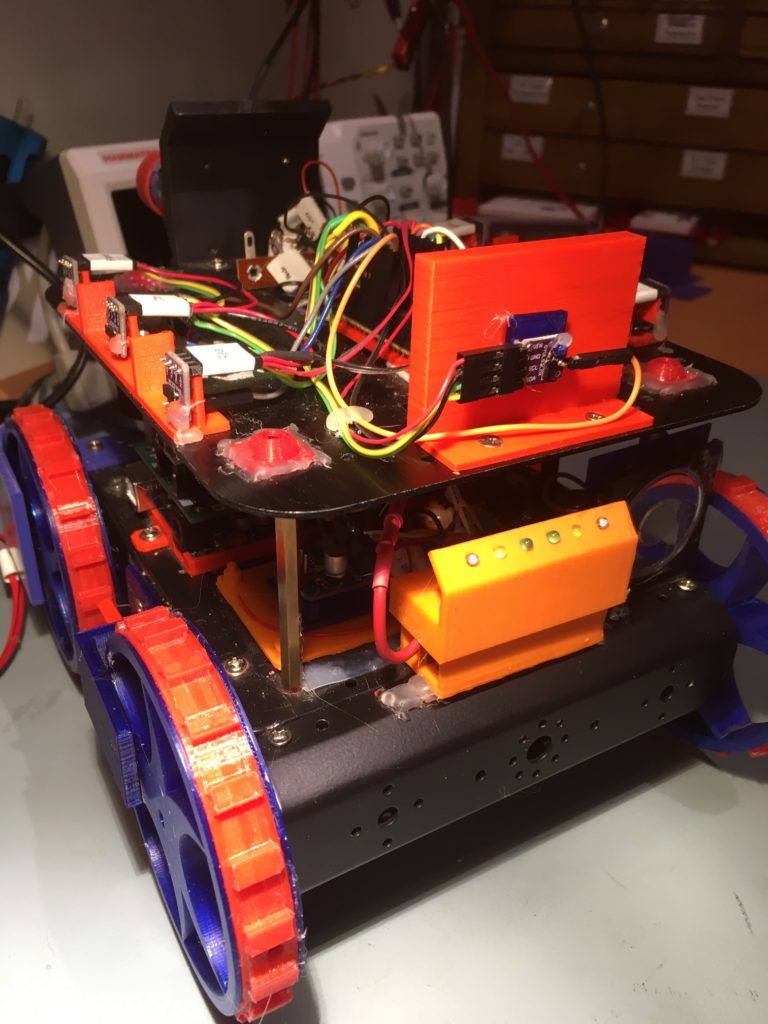

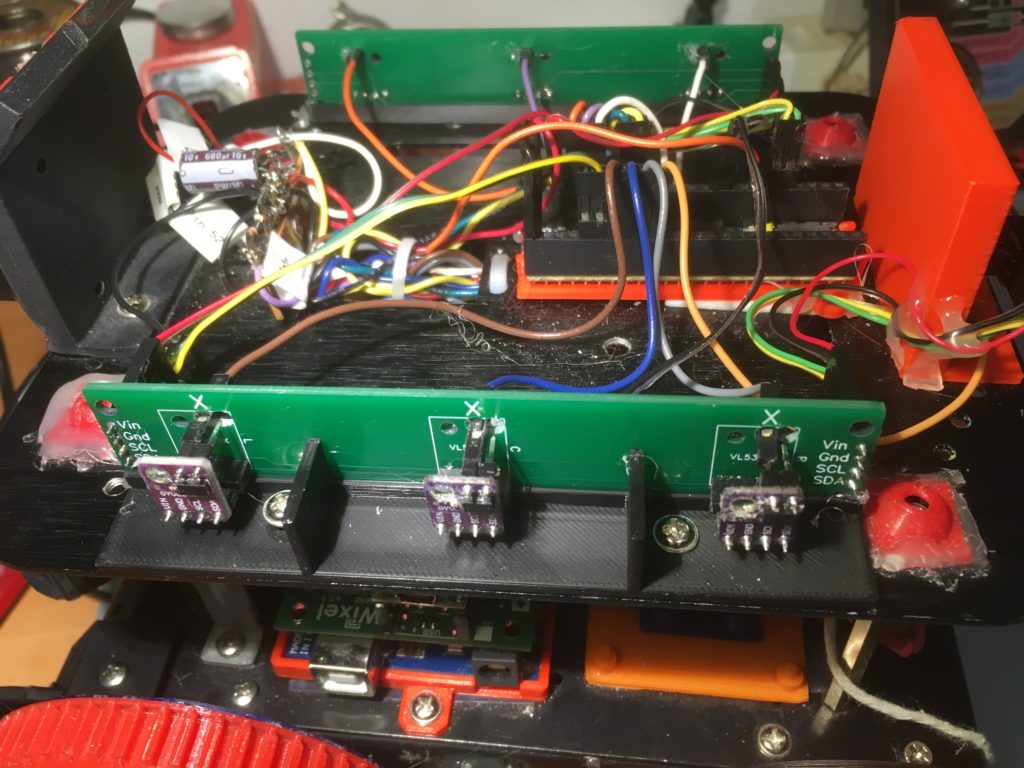

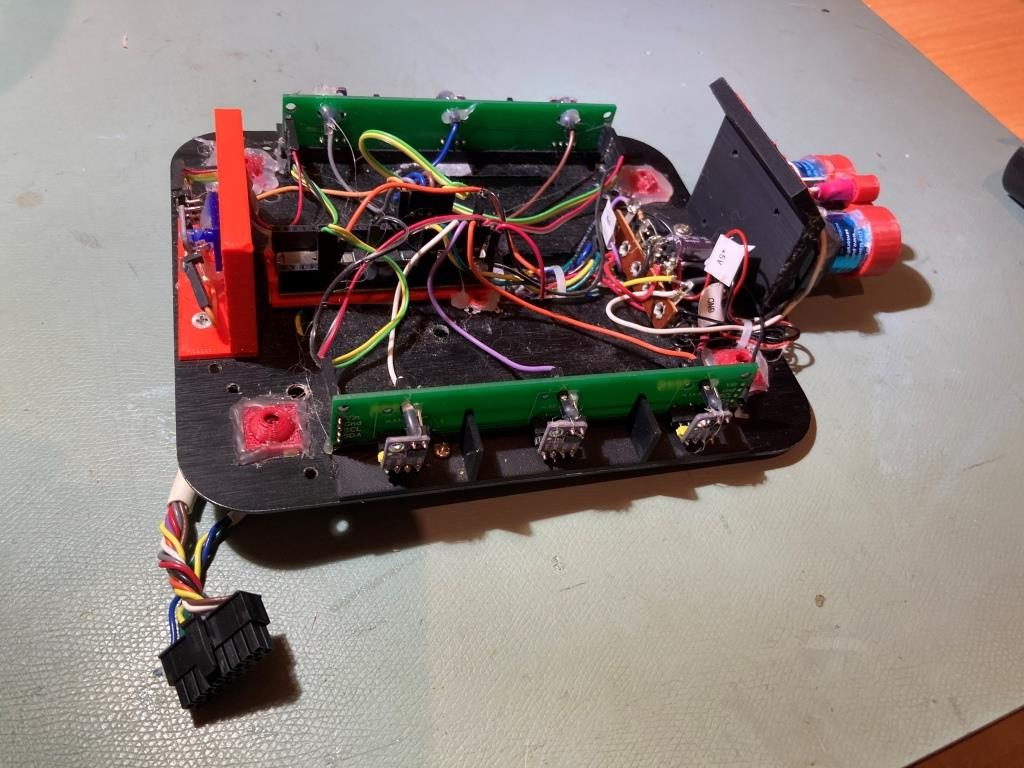

In my last post on this subject I described my effort to get IR Homing functional on my new Wall-E3 robot. This post is intended to document the process of getting the ‘second deck’ from Wall-E2 ported over to Wall-E3. The second deck from Wall-E2 houses the forward-looking PulsedLight (acquired by Garmin in 2015) LIDAR system, the two side-looking VL53L0X arrays, and a rear-looking VL53L0X, as shown in the photo below

The second deck connects to the main system via the 16-pin Amp connector visible in the bottom left-hand corner of the photo above.

Looking at the code, the only pin assignments associated with ‘second deck’ functionality are:

- const int RED_LASER_DIODE_PIN = 5;//Laser pointer

- const int LIDAR_MODE_PIN = 2; //LIDAR MODE pin (continuous mode)

- const int VL53L0X_TEENSY_RESET_PIN = 4; //pulled low for 1 mSec in Setup()

To start the process, I ported the following sections from From FourWD_WAllE2_V12.ino::setup() to T35_WallE3::setup():

- The code to reset the VL53L0X Teensy on the second deck

- The code to initialize the above output pins.

- The entire #pragma region VL53L0X_TEENSY section

- The entire #ifdef DISTANCES_ONLY section

- pragma region L/R/FRONT DISTANCE ARRAYS

That should take care of all new declarations and initializations associated with the second deck. And wonder of wonders, the T35_WallE3_V5 project compiled without errors! – time to quit for the night! 🙂

16 January 2022:

Well, of course when I connected up the second deck – nothing worked, so back to basic troubleshooting. First, I connected a USB cable directly to the T3.5 running the VL53L0X array, and determined that I can, in fact, see valid distance values from all seven sensors – yay! Now to determine why I can’t see them from the main processor.

Next, I loaded a basic I2C scanner program onto the main processor to see if the main processor could see the VL53L0X process on Wire1. The I2C scanner reported it could find the MPU6050 IMU module and the Teensy 3.2 IR homing beacon detection processor, but nothing else. After a few more seconds (and yet another face palm!) I realized that the I2C scanner program wasn’t finding the VL53L0X processor because it was only checking the Wire bus, not Wire1 or Wire2 – oops!

So, I modified the basic scanner so it would optionally check Wire1 & Wire2 in addition to Wire1, as shown below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 |

/* Name: T35_BasicI2CScanner.ino Created: 1/6/2022 12:11:46 PM Author: NEWXPS15\paynt 01/16/22 Added code to scan all three I2C busses */ // ------------------------------------------------------------------------------------------- // I2C Bus Scanner // ------------------------------------------------------------------------------------------- // // This creates an I2C master device which will scan the address space and report all // devices which ACK. It does not attempt to transfer data, it only reports which devices // ACK their address. // // Pull the control pin low to initiate the scan. Result will output to Serial. // // This example code is in the public domain. // ------------------------------------------------------------------------------------------- #include <i2c_t3.h> #define WIRE1_AVAILABLE #define WIRE2_AVAILABLE uint8_t found, target, all; void setup() { pinMode(LED_BUILTIN, OUTPUT); // LED pinMode(12, INPUT_PULLUP); // pull pin 12 low to show ACK only results pinMode(11, INPUT_PULLUP); // pull pin 11 low for a more verbose result (shows both ACK and NACK) // Setup for Master mode, pins 18/19, external pullups, 400kHz, 10ms default timeout Wire.begin(I2C_MASTER, 0x00, I2C_PINS_18_19, I2C_PULLUP_INT, 400000); //using internal pullups also works! Wire.setDefaultTimeout(10000); // 10ms #ifdef WIRE1_AVAILABLE Wire1.begin(I2C_MASTER, 0x00, I2C_PINS_37_38 , I2C_PULLUP_INT, 400000); //using internal pullups also works! Wire1.setDefaultTimeout(10000); // 10ms #endif #ifdef WIRE2_AVAILABLE Wire2.begin(I2C_MASTER, 0x00, I2C_PINS_3_4 , I2C_PULLUP_INT, 400000); //using internal pullups also works! Wire2.setDefaultTimeout(10000); // 10ms #endif Serial.begin(115200); delay(2000); Serial.printf("Teensy Basic I2C Scanner\npull pin 12 low to show ACK only results\npull pin 11 low for a more verbose result (shows both ACK and NACK)\n"); } void loop() { // Scan I2C addresses // if (digitalRead(12) == LOW || digitalRead(11) == LOW) { all = (digitalRead(11) == LOW); found = 0; Serial.print("---------------------------------------------------\n"); Serial.print("Starting scan on Wire bus...\n"); digitalWrite(LED_BUILTIN, HIGH); // LED on for (target = 1; target <= 0x7F; target++) // sweep addr, skip general call { Wire.beginTransmission(target); // slave addr Wire.endTransmission(); // no data, just addr //print_scan_status(target, all); print_scan_status(Wire, target, all); } digitalWrite(LED_BUILTIN, LOW); // LED off if (!found) Serial.print("No devices found.\n"); delay(500); // delay to space out tests #ifdef WIRE1_AVAILABLE Serial.print("---------------------------------------------------\n"); Serial.print("Starting scan on Wire1 bus...\n"); found = 0; digitalWrite(LED_BUILTIN, HIGH); // LED on for (target = 1; target <= 0x7F; target++) // sweep addr, skip general call { Wire1.beginTransmission(target); // slave addr Wire1.endTransmission(); // no data, just addr //print_scan_status(target, all); print_scan_status(Wire1, target, all); } digitalWrite(LED_BUILTIN, LOW); // LED off if (!found) Serial.print("No devices found.\n"); #endif #ifdef WIRE2_AVAILABLE Serial.print("---------------------------------------------------\n"); Serial.print("Starting scan on Wire2 bus...\n"); found = 0; digitalWrite(LED_BUILTIN, HIGH); // LED on for (target = 1; target <= 0x7F; target++) // sweep addr, skip general call { Wire2.beginTransmission(target); // slave addr Wire2.endTransmission(); // no data, just addr //print_scan_status(target, all); print_scan_status(Wire2, target, all); } digitalWrite(LED_BUILTIN, LOW); // LED off if (!found) Serial.print("No devices found.\n"); #endif delay(500); // delay to space out tests } } //01/16/22 rev to take wire object ref as an argument void print_scan_status(i2c_t3& wire, uint8_t target, uint8_t all) { //switch (Wire.status()) switch (wire.status()) { case I2C_WAITING: Serial.printf("Addr: 0x%02X ACK\n", target); found = 1; break; case I2C_ADDR_NAK: if (all) Serial.printf("Addr: 0x%02X\n", target); break; default: break; } } |

And here’s the output with all three I2C busses enabled.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

Opening port Port open Teensy Basic I2C Scanner pull pin 12 low to show ACK only results pull pin 11 low for a more verbose result (shows both ACK and NACK) --------------------------------------------------- Starting scan on Wire bus... Addr: 0x08 ACK <<<< IR Homing Teensy Addr: 0x68 ACK <<<< MPU6050 IMU --------------------------------------------------- Starting scan on Wire1 bus... Addr: 0x20 ACK <<<< VL53L0X Array Teensy --------------------------------------------------- Starting scan on Wire2 bus... No devices found. |

So now that I know that the main processor can ‘see’ the VL53L0X Teensy on Wire1, I have to figure out why it’s not working properly. As usual, this turned out to be pretty simple once I knew what I was looking for. The entire solution was to change this line:

|

1 |

Wire.beginTransmission(VL53L0X_I2C_SLAVE_ADDRESS); |

To this line:

|

1 |

Wire1.beginTransmission(VL53L0X_I2C_SLAVE_ADDRESS); |

Wall-E2 used a Mega 2560 processor with only one I2C bus, so everything had to be on that one bus. However, when I changed to the Teensy 3.5, I had more busses available, so I chose to move the VL53L0X array manager to Wire1, but forgot to change the initialization code to use Wire1 vs Wire – oops!

18 January 2022 Update:

Or,….. Maybe not. When I tried to compile the above changes I started running into ‘#include file hell’. I couldn’t figure out whether to use #include <i2c_t3.h> or #include <Wire.h> and every time I changed the include in one file, it seemed to conflict with an earlier change in another – argggghhhhh!

So, I took my troubles to the Teensy forum and asked what the difference was between the ‘i2c_t3.h’ and ‘Wire’ libraries, particularly with respect to multiple I2C bus support. The answer I got from ‘defragster’ (a very experienced Teensy forum contributor) was:

Wire.h is the base i2c supplied and supported by PJRC. It covers all WIRE#’s on various Teensy models: See {local install}\hardware\teensy\avr\libraries\Wire\WireKinetis.h

i2c_t3.h is an alternative library that can be used to instead of WIRE.h when it works or offers some added feature or alternate method.

I took his post to say “use Wire.h” unless you have some specific reason why the i2c_t3.h library offers a needed feature that Wire.h doesn’t offer.

So, now I have to go back through all the code that I have dicked with over the years to make work (like ‘I2C_Anything’, for instance) with multiple I2C buses, and see what needs to be done to, as much as possible, use ‘Wire.h’ in lieu of ‘i2c_t3.h’

The main file for this project has the following #include’s that may require work:

|

1 2 3 4 |

#include "MPU6050_6Axis_MotionApps_V6_12.h" //changed to this version 10/05/19 #include "I2Cdev.h" //02/19/19: this includes SBWire.h #include "I2C_Anything.h" //needed for sending float data over I2C #include "I2C_Anything1.h" //01/16/22 added for Wire1 bus |

MPU6050_6Axis_MotionApps_V6_12.h: Right away I run into problems; the first thing this file does is #include “I2Cdev.h”, which has the following code:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

#ifdef ARDUINO #if ARDUINO < 100 #include "WProgram.h" #else #include "Arduino.h" #endif #if I2CDEV_IMPLEMENTATION == I2CDEV_ARDUINO_WIRE //#include <Wire.h> #include <i2c_t3.h> #endif #if I2CDEV_IMPLEMENTATION == I2CDEV_I2CMASTER_LIBRARY #include <I2C.h> #endif #if I2CDEV_IMPLEMENTATION == I2CDEV_BUILTIN_SBWIRE #include "SBWire.h" #endif #endif |

Which I modified sometime in the distant past to use #include <i2c_t3.h> instead of #include <Wire.h>. At the time I think I was convinced that I had to use i2c_t3.h to get access to multiple I2C buses, but now I know that isn’t necessary. Fortunately this is a ‘local’ file, so changing this back shouldn’t (fingers crossed!) break other programs.

Aside: I did a search on MPU6050_6Axis_MotionApps_V6_12.h and I2Cdev.h in the Arduino folder, and got 48 different hits for MPU6050_6Axis_MotionApps_V6_12.h and 108 hits for ‘I2Cdev.h – ouch!! Not going to worry about this now, but I’m sure I’ll be dealing with this problem forever – if not longer 🙁

Aside2: The ‘I2Cdev.h’ file gets specialized to different hardware in I2CDevLib. For ‘Arduino’ hardware it is this file:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 |

// I2Cdev library collection - Main I2C device class header file // Abstracts bit and byte I2C R/W functions into a convenient class // 2013-06-05 by Jeff Rowberg <jeff@rowberg.net> // // Changelog: // 2021-09-28 - allow custom Wire object as transaction function argument // 2020-01-20 - hardija : complete support for Teensy 3.x // 2015-10-30 - simondlevy : support i2c_t3 for Teensy3.1 // 2013-05-06 - add Francesco Ferrara's Fastwire v0.24 implementation with small modifications // 2013-05-05 - fix issue with writing bit values to words (Sasquatch/Farzanegan) // 2012-06-09 - fix major issue with reading > 32 bytes at a time with Arduino Wire // - add compiler warnings when using outdated or IDE or limited I2Cdev implementation // 2011-11-01 - fix write*Bits mask calculation (thanks sasquatch @ Arduino forums) // 2011-10-03 - added automatic Arduino version detection for ease of use // 2011-10-02 - added Gene Knight's NBWire TwoWire class implementation with small modifications // 2011-08-31 - added support for Arduino 1.0 Wire library (methods are different from 0.x) // 2011-08-03 - added optional timeout parameter to read* methods to easily change from default // 2011-08-02 - added support for 16-bit registers // - fixed incorrect Doxygen comments on some methods // - added timeout value for read operations (thanks mem @ Arduino forums) // 2011-07-30 - changed read/write function structures to return success or byte counts // - made all methods static for multi-device memory savings // 2011-07-28 - initial release /* ============================================ I2Cdev device library code is placed under the MIT license Copyright (c) 2013 Jeff Rowberg Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. =============================================== */ #ifndef _I2CDEV_H_ #define _I2CDEV_H_ // ----------------------------------------------------------------------------- // Enable deprecated pgmspace typedefs in avr-libc // ----------------------------------------------------------------------------- #define __PROG_TYPES_COMPAT__ // ----------------------------------------------------------------------------- // I2C interface implementation setting // ----------------------------------------------------------------------------- #ifndef I2CDEV_IMPLEMENTATION //#define I2CDEV_IMPLEMENTATION I2CDEV_ARDUINO_WIRE #define I2CDEV_IMPLEMENTATION I2CDEV_TEENSY_3X_WIRE //#define I2CDEV_IMPLEMENTATION I2CDEV_BUILTIN_SBWIRE //#define I2CDEV_IMPLEMENTATION I2CDEV_BUILTIN_FASTWIRE #endif // I2CDEV_IMPLEMENTATION // comment this out if you are using a non-optimal IDE/implementation setting // but want the compiler to shut up about it #define I2CDEV_IMPLEMENTATION_WARNINGS // ----------------------------------------------------------------------------- // I2C interface implementation options // ----------------------------------------------------------------------------- #define I2CDEV_ARDUINO_WIRE 1 // Wire object from Arduino #define I2CDEV_BUILTIN_NBWIRE 2 // Tweaked Wire object from Gene Knight's NBWire project // ^^^ NBWire implementation is still buggy w/some interrupts! #define I2CDEV_BUILTIN_FASTWIRE 3 // FastWire object from Francesco Ferrara's project #define I2CDEV_I2CMASTER_LIBRARY 4 // I2C object from DSSCircuits I2C-Master Library at https://github.com/DSSCircuits/I2C-Master-Library #define I2CDEV_BUILTIN_SBWIRE 5 // I2C object from Shuning (Steve) Bian's SBWire Library at https://github.com/freespace/SBWire #define I2CDEV_TEENSY_3X_WIRE 6 // Teensy 3.x support using i2c_t3 library // ----------------------------------------------------------------------------- // Arduino-style "Serial.print" debug constant (uncomment to enable) // ----------------------------------------------------------------------------- //#define I2CDEV_SERIAL_DEBUG #ifdef ARDUINO #if ARDUINO < 100 #include "WProgram.h" #else #include "Arduino.h" #endif #if I2CDEV_IMPLEMENTATION == I2CDEV_ARDUINO_WIRE #include <Wire.h> #endif #if I2CDEV_IMPLEMENTATION == I2CDEV_TEENSY_3X_WIRE #include <i2c_t3.h> #endif #if I2CDEV_IMPLEMENTATION == I2CDEV_I2CMASTER_LIBRARY #include <I2C.h> #endif #if I2CDEV_IMPLEMENTATION == I2CDEV_BUILTIN_SBWIRE #include "SBWire.h" #endif #endif |

Where it can be seen that sometime in the distant past, I modified the original library file to select ‘I2CDEV_TEENSY_3X_WIRE’, which has the effect of #include <i2c_t3.h> instead of #include <Wire.h> – oops! So, any project that uses the library version of this file will always #include <i2c_t3.h> instead of #include <Wire.h> – double, triple, and quadruple oops! In addition, I discovered that the version of i2cdev.h I am using for this project is at least 6 years out of date – wonderful (at least it looks like the MPU6050_6Axis_MotionApps_V6_12.h is reasonably current (in fact, it is essentially identical to the library version).

To start with, I made sure T35_WallE3_V5 compiles for T3.5 ‘as is’, and then modified the includes to use the library version of MPU6050_6Axis_MotionApps_V6_12.h. This actually worked (yay!), although I discovered I had to use the “file.h” format rather than <file.h> – don’t know why.

23 January 2022 Update:

As usual, there were a few detours on the way to full ‘second-deck’ functionality. To start with, I discovered/realized that I was using the wrong I2C library (i2c_t3.h) for working with multiple I2C busses. The i2c_t3.h library does a lot of nice things, including multiple I2C bus support, but unfortunately isn’t compatible with a lot of the hardware driver libraries I use, most of which assume you are using the ‘Wire.h’ library. I had ‘sort-of’ solved this problem with many of my Teensy projects by hacking the needed hardware driver libraries to use i2c_t3.h instead of Wire.h, but this got old pretty quickly, and even older when I started trying to use multiple I2C bus enabled hardware driver libraries (like Kurt E’s wonderful multiple bus MPU6050 driver).

So, I wound up spending way too many hours tracking down the differences and similarities between i2c_t3.h and the Teensy version of Wire.h See this post for all the gory details. Along the way I learned how to simplify access to deeply buried library files using the Windows 10 flavor of symlinks, which was kinda cool, but I could probably have spent the time more wisely elsewhere. Also, I ran headlong into yet another multiple bus problem with one of my favorite libraries – Nick Gammon’s wonderful ‘I2C_Anything’ library that does just two things – it reads/rights ‘anything’ – ints, floats, unsigned long ints, doubles, whatever – across an I2C connection – no more worrying about how to do that, or being forced to transmit ASCII across the bus and construct/deconstruct as necessary on both ends. Unfortunately, this library is unabashedly single-bus – it expects to be used in a Wire.h environment and that is that. The good news is, by the time I was done the question of ‘why use i2c_t3.h as opposed to Wire.h?’, I was able to intelligently (I hope) modify I2C_Anything.h to accommodate multiple I2C busses (though it still assuming a ‘Wire.h’ environment). At the end, I made a pull request to the I2C_Anything github repo, so maybe others can use this as well.

After all this was done, I still hadn’t even started on getting the second-deck VL53L0X sensors talking to the main Teensy 3.5 processor, but at least now I (kinda) knew what I was doing.

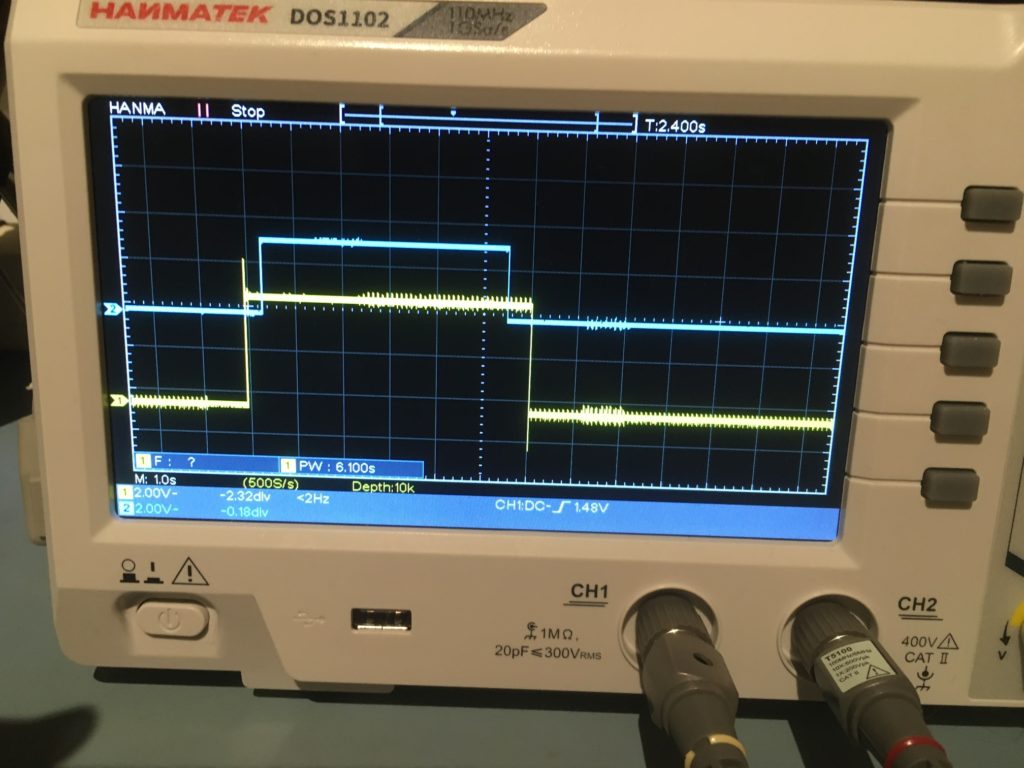

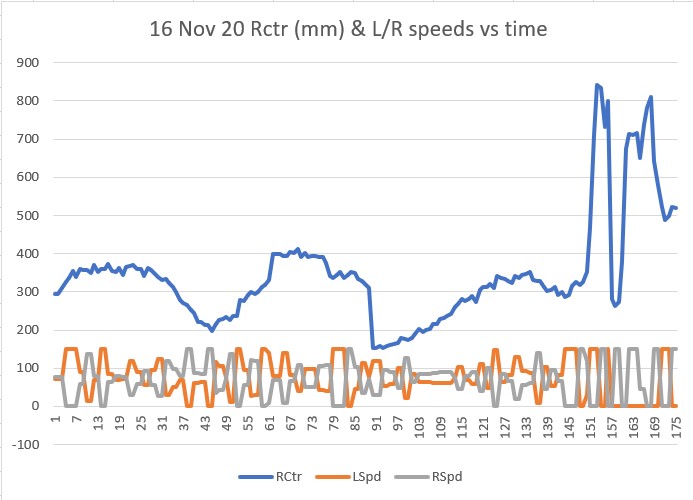

Anyhoo, once I got back to trying to get connected to the VL53L0X array, I was able to reasonably quickly revise both the main Teensy 3.5 processor program and the VL53L0X array management program to use Wire.h vs i2c_t3.h, and to instantiate/initialize the multiple busses required for both processors (the main processor talks to the VL53L0X array manager via Wire1 on its end to Wire0 on the array end, and the array manager talks to the seven VL53L0X modules via its Wire1 & Wire2 busses). And with my newly modified I2C_Anything library, I was ready to get them talking to each other.

Here’s the working Array manager code (pardon the extra comment lines):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 |

/* Name: Teensy_7VL53L0X_I2C_Slave_V3.ino Created: 1/24/2021 4:03:35 PM Author: FRANKNEWXPS15\Frank This is the 'final' version of the Teensy 3.5 project to manage all seven VL53L0X lidar distance sensors, three on left, three on right, and now including a rear distance sensor. As of this date it was an identical copy of the TeensyHexVL53L0XHighSpeedDemo project, just renamed for clarity 01/23/22 - revised to work properly with Teensy 3.5 main processor */ /* Name: TeensyHexVL53L0XHighSpeedDemo.ino Created: 10/1/2020 3:49:01 PM Author: FRANKNEWXPS15\Frank This demo program combines the code from my Teensy_VL53L0X_I2C_Slave_V2 project with the VL53L0X 'high speed' code from the Pololu support forum post at https://forum.pololu.com/t/high-speed-with-vl53l0x/16585/3 */ /* Name: Teensy_VL53L0X_I2C_Slave_V2.ino Created: 8/4/2020 7:42:22 PM Author: FRANKNEWXPS15\Frank This project merges KurtE's multi-wire capable version of Pololu's VL53L0X library with my previous Teensy_VL53L0X_I2C_Slave project, which used the Adafruit VL53L0X library. This (hopefully) will be the version uploaded to the Teensy on the 2nd deck of Wall-E2 and which controls the left & right 3-VL53L0X sensor arrays 01/17/22: Copied VL53L0X.h/cpp from C:\Users\paynt\Documents\Arduino\Libraries\vl53l0x-arduino-multi_wire so I won't have to guess which library folder these files came from 01/17/22: if you have a local file.h same as a library\xx\file.h, then if VMicro's 'deep search'option is enabled, must use #include "file.h" instead of #include <file.h>. This is the reason for using #include "VL53L0X.h" and #include "I2C_Anything.h" below 01/17/22 the local version of "I2C_Anything.h" uses '#include <i2c_t3.h>' vice <Wire.h> for multiple I2C buss availability. 01/18/22 removed I2C_Anything.h & VL53L0X.h from project folder so can use library versions 01/22/22 added #ifdef NO_VL53L0X and assoc #ifndef blocks for debug */ #include <Wire.h> //01/18/22 Wire.h has multiple I2C bus access capability #include <VL53L0X.h> #include <I2C_Anything.h> #include "elapsedMillis.h" //#define NO_VL53L0X volatile uint8_t request_type; const int SETUP_DURATION_OUTPUT_PIN = 33; const int REQUEST_EVENT_DURATION_OUTPUT_PIN = 34; bool bTeensyReady = false; const int MSEC_BETWEEN_LED_TOGGLES = 100; elapsedMillis mSecBetweenLEDToggle; enum VL53L0X_REQUEST { VL53L0X_READYCHECK, //added 11/10/20 to prevent bad reads during Teensy setup() VL53L0X_CENTERS_ONLY, VL53L0X_RIGHT, VL53L0X_LEFT, VL53L0X_ALL, //added 08/05/20, chg 'BOTH' to 'ALL' 10/31/20 VL53L0X_REAR_ONLY //added 10/24/20 }; //right side array uint16_t RF_Dist_mm; uint16_t RC_Dist_mm; uint16_t RR_Dist_mm; //left side array uint16_t LF_Dist_mm; uint16_t LC_Dist_mm; uint16_t LR_Dist_mm; uint16_t Rear_Dist_mm; //float RightSteeringVal; //float LeftSteeringVal; double RightSteeringVal; double LeftSteeringVal; const int SLAVE_ADDRESS = 0x20; //just a guess for now const int DEFAULT_VL53L0X_ADDR = 0x29; //XSHUT required for I2C address init //right side array const int RF_XSHUT_PIN = 23; const int RC_XSHUT_PIN = 22; const int RR_XSHUT_PIN = 21; //left side array const int LF_XSHUT_PIN = 5; const int LC_XSHUT_PIN = 6; const int LR_XSHUT_PIN = 7; const int Rear_XSHUT_PIN = 8; const int MAX_LIDAR_RANGE_MM = 1000; //make it obvious when nearest object is out of range #ifndef NO_VL53L0X //right side array VL53L0X lidar_RF(&Wire1); VL53L0X lidar_RC(&Wire1); VL53L0X lidar_RR(&Wire1); //left side array VL53L0X lidar_LF(&Wire2); VL53L0X lidar_LC(&Wire2); VL53L0X lidar_LR(&Wire2); VL53L0X lidar_Rear(&Wire2); //added to Wire2 daisy-chain #endif // !NO_VL53L0X void setup() { bTeensyReady = false; //11/10/20 added to prevent bad data reads by main controller pinMode(SETUP_DURATION_OUTPUT_PIN, OUTPUT); pinMode(REQUEST_EVENT_DURATION_OUTPUT_PIN, OUTPUT); pinMode(LED_BUILTIN, OUTPUT); digitalWrite(SETUP_DURATION_OUTPUT_PIN, HIGH); Serial.begin(115200); // wait until serial port opens ... For 5 seconds max while (!Serial && millis() < 5000); Wire.begin(SLAVE_ADDRESS); //set Teensy Wire0 port up as slave Wire.onRequest(requestEvent); //ISR for I2C requests from master (Mega 2560) Wire.onReceive(receiveEvent); //ISR for I2C data from master (Mega 2560) Wire1.begin(); Wire2.begin(); Serial.printf("Teensy_7VL53L0X_I2C_Slave_V3\n"); #pragma region VL53L0X_INIT #ifndef NO_VL53L0X pinMode(RF_XSHUT_PIN, OUTPUT); pinMode(RC_XSHUT_PIN, OUTPUT); pinMode(RR_XSHUT_PIN, OUTPUT); pinMode(LF_XSHUT_PIN, OUTPUT); pinMode(LC_XSHUT_PIN, OUTPUT); pinMode(LR_XSHUT_PIN, OUTPUT); //Put all sensors in reset mode by pulling XSHUT low digitalWrite(RF_XSHUT_PIN, LOW); digitalWrite(RC_XSHUT_PIN, LOW); digitalWrite(RR_XSHUT_PIN, LOW); digitalWrite(LF_XSHUT_PIN, LOW); digitalWrite(LC_XSHUT_PIN, LOW); digitalWrite(LR_XSHUT_PIN, LOW); //added 10/23/20 for rear sensor pinMode(Rear_XSHUT_PIN, OUTPUT); digitalWrite(Rear_XSHUT_PIN, LOW); //now bring lidar_RF only out of reset and set it's address //input w/o pullups sets line to high impedance so XSHUT pullup to 3.3V takes over pinMode(RF_XSHUT_PIN, INPUT); delay(10); if (!lidar_RF.init()) { Serial.println("Failed to detect and initialize lidar_RF!"); while (1) {} } //from Pololu forum post lidar_RF.setMeasurementTimingBudget(20000); lidar_RF.startContinuous(); lidar_RF.setAddress(DEFAULT_VL53L0X_ADDR + 1); Serial.printf("lidar_RF address is 0x%x\n", lidar_RF.getAddress()); //now bring lidar_RC only out of reset, initialize it, and set its address //input w/o pullups sets line to high impedance so XSHUT pullup to 3.3V takes over pinMode(RC_XSHUT_PIN, INPUT); delay(10); if (!lidar_RC.init()) { Serial.println("Failed to detect and initialize lidar_RC!"); while (1) {} } //from Pololu forum post lidar_RC.setMeasurementTimingBudget(20000); lidar_RC.startContinuous(); lidar_RC.setAddress(DEFAULT_VL53L0X_ADDR + 2); Serial.printf("lidar_RC address is 0x%x\n", lidar_RC.getAddress()); //now bring lidar_RR only out of reset, initialize it, and set its address //input w/o pullups sets line to high impedance so XSHUT pullup to 3.3V takes over pinMode(RR_XSHUT_PIN, INPUT); delay(10); if (!lidar_RR.init()) { Serial.println("Failed to detect and initialize lidar_RR!"); while (1) {} } //from Pololu forum post lidar_RR.setMeasurementTimingBudget(20000); lidar_RR.startContinuous(); lidar_RR.setAddress(DEFAULT_VL53L0X_ADDR + 3); Serial.printf("lidar_RR address is 0x%x\n", lidar_RR.getAddress()); //now bring lidar_LF only out of reset, initialize it, and set its address //input w/o pullups sets line to high impedance so XSHUT pullup to 3.3V takes over pinMode(LF_XSHUT_PIN, INPUT); delay(10); if (!lidar_LF.init()) { Serial.println("Failed to detect and initialize lidar_LF!"); while (1) {} } //from Pololu forum post lidar_LF.setMeasurementTimingBudget(20000); lidar_LF.startContinuous(); lidar_LF.setAddress(DEFAULT_VL53L0X_ADDR + 4); Serial.printf("lidar_LF address is 0x%x\n", lidar_LF.getAddress()); //now bring lidar_LC only out of reset, initialize it, and set its address //input w/o pullups sets line to high impedance so XSHUT pullup to 3.3V takes over pinMode(LC_XSHUT_PIN, INPUT); delay(10); if (!lidar_LC.init()) { Serial.println("Failed to detect and initialize lidar_LC!"); while (1) {} } //from Pololu forum post lidar_LC.setMeasurementTimingBudget(20000); lidar_LC.startContinuous(); lidar_LC.setAddress(DEFAULT_VL53L0X_ADDR + 5); Serial.printf("lidar_LC address is 0x%x\n", lidar_LC.getAddress()); //now bring lidar_LR only out of reset, initialize it, and set its address //input w/o pullups sets line to high impedance so XSHUT pullup to 3.3V takes over pinMode(LR_XSHUT_PIN, INPUT); if (!lidar_LR.init()) { Serial.println("Failed to detect and initialize lidar_LR!"); while (1) {} } //from Pololu forum post lidar_LR.setMeasurementTimingBudget(20000); lidar_LR.startContinuous(); lidar_LR.setAddress(DEFAULT_VL53L0X_ADDR + 6); Serial.printf("lidar_LR address is 0x%x\n", lidar_LR.getAddress()); //added 10/23/20 for rear sensor //now bring lidar_Rear only out of reset, initialize it, and set its address //input w/o pullups sets line to high impedance so XSHUT pullup to 3.3V takes over pinMode(Rear_XSHUT_PIN, INPUT); if (!lidar_Rear.init()) { Serial.println("Failed to detect and initialize lidar_Rear!"); while (1) {} } //from Pololu forum post lidar_Rear.setMeasurementTimingBudget(20000); lidar_Rear.startContinuous(); lidar_Rear.setAddress(DEFAULT_VL53L0X_ADDR + 7); Serial.printf("lidar_Rear address is 0x%x\n", lidar_Rear.getAddress()); #pragma endregion VL53L0X_INIT //Serial.printf("Msec\tLFront\tLCtr\tLRear\tRFront\tRCtr\tRRear\tLSteer\tRSteer\n"); Serial.printf("Msec\tLFront\tLCtr\tLRear\tRFront\tRCtr\tRRear\tRear\tLSteer\tRSteer\n"); #endif // !NO_VL53L0X digitalWrite(SETUP_DURATION_OUTPUT_PIN, LOW); bTeensyReady = true; //11/10/20 added to prevent bad data reads by main controller mSecBetweenLEDToggle = 0; } void loop() { if (mSecBetweenLEDToggle <= MSEC_BETWEEN_LED_TOGGLES) { mSecBetweenLEDToggle -= MSEC_BETWEEN_LED_TOGGLES; digitalToggle(LED_BUILTIN); Serial.printf("%lu\t%d\t%d\t%d\t%d\t%d\t%d\t%d\t%2.2f\t%2.2f\n", millis(), LF_Dist_mm, LC_Dist_mm, LR_Dist_mm, RF_Dist_mm, RC_Dist_mm, RR_Dist_mm, Rear_Dist_mm, LeftSteeringVal, RightSteeringVal); } #ifndef NO_VL53L0X //from Pololu forum post RF_Dist_mm = lidar_RF.readRangeContinuousMillimeters(); RC_Dist_mm = lidar_RC.readRangeContinuousMillimeters(); RR_Dist_mm = lidar_RR.readRangeContinuousMillimeters(); RightSteeringVal = (RF_Dist_mm - RR_Dist_mm) / 100.f; //rev 06/21/20 see PPalace post //from Pololu forum post LF_Dist_mm = lidar_LF.readRangeContinuousMillimeters(); LC_Dist_mm = lidar_LC.readRangeContinuousMillimeters(); LR_Dist_mm = lidar_LR.readRangeContinuousMillimeters(); LeftSteeringVal = (LF_Dist_mm - LR_Dist_mm) / 100.f; //rev 06/21/20 see PPalace post //added 10/23/20 for rear sensor Rear_Dist_mm = lidar_Rear.readRangeContinuousMillimeters(); #endif // !NO_VL53L0X } // function that executes whenever data is requested by master // this function is registered as an event, see setup() void requestEvent() { //Purpose: Send requested sensor data to the Mega controller via main I2C bus //Inputs: // request_type = uint8_t value denoting type of data requested (from receiveEvent()) // 0 = left & right center distances only // 1 = right side front, center and rear distances, plus steering value // 2 = left side front, center and rear distances, plus steering value // 3 = both side front, center and rear distances, plus both steering values //Outputs: // Requested data sent to master //Notes: // 08/05/20 added VL53L0X_ALL request type to get both sides at once // 10/24/20 added VL53L0X_REAR_ONLY request type // 11/09/20 added write to pin for O'scope monitoring int data_size = 0; //DEBUG!! //Serial.printf("RequestEvent() with request_type = %d: VL53L0X Front/Center/Rear distances = %d, %d, %d\n", // request_type, RF_Dist_mm, RC_Dist_mm, RR_Dist_mm); //DEBUG!! digitalWrite(LED_BUILTIN, HIGH);//added 01/22/22 digitalWrite(REQUEST_EVENT_DURATION_OUTPUT_PIN, HIGH); switch (request_type) { case VL53L0X_READYCHECK: //added 11/10/20 to prevent bad reads during Teensy setup() Serial.printf("in VL53L0X_READYCHECK case at %lu with bTeensyReady = %d\n", millis(), bTeensyReady); I2C_writeAnything(bTeensyReady); break; case VL53L0X_CENTERS_ONLY: //DEBUG!! //data_size = 2*sizeof(uint16_t); //Serial.printf("Sending %d bytes LC_Dist_mm = %d, RC_Dist_mm = %d to master\n", data_size, LC_Dist_mm, RC_Dist_mm); //DEBUG!! I2C_writeAnything(RC_Dist_mm); I2C_writeAnything(LC_Dist_mm); break; case VL53L0X_RIGHT: //DEBUG!! //data_size = 3 * sizeof(uint16_t) + sizeof(float); //Serial.printf("Sending %d bytes RF/RC/RR/RS vals = %d, %d, %d, %3.2f to master\n", // data_size, RF_Dist_mm, RC_Dist_mm, RR_Dist_mm, RightSteeringVal); //DEBUG!! I2C_writeAnything(RF_Dist_mm); I2C_writeAnything(RC_Dist_mm); I2C_writeAnything(RR_Dist_mm); I2C_writeAnything(RightSteeringVal); break; case VL53L0X_LEFT: //DEBUG!! //data_size = 3 * sizeof(uint16_t) + sizeof(float); //Serial.printf("Sending %d bytes LF/LC/LR/LS vals = %d, %d, %d, %3.2f to master\n", // data_size, LF_Dist_mm, LC_Dist_mm, LR_Dist_mm, LeftSteeringVal); //DEBUG!! I2C_writeAnything(LF_Dist_mm); I2C_writeAnything(LC_Dist_mm); I2C_writeAnything(LR_Dist_mm); I2C_writeAnything(LeftSteeringVal); break; case VL53L0X_ALL: //Serial.printf("In VL53L0X_ALL case\n"); //added 08/05/20 to get data from both sides at once //10/31/20 chg to VL53L0X_ALL & report all 7 sensor values //DEBUG!! //data_size = 3 * sizeof(uint16_t) + sizeof(float); data_size = 7 * sizeof(uint16_t) + 2 * sizeof(float); //Serial.printf("Sending %d bytes to master\n", data_size); //Serial.printf("%d bytes: %d\t%d\t%d\t%3.2f\t%d\t%d\t%d\t%d\t%3.2f\n", // data_size, LF_Dist_mm, LC_Dist_mm, LR_Dist_mm, LeftSteeringVal, // RF_Dist_mm, RC_Dist_mm, RR_Dist_mm, Rear_Dist_mm, RightSteeringVal); //DEBUG!! //left side I2C_writeAnything(LF_Dist_mm); I2C_writeAnything(LC_Dist_mm); I2C_writeAnything(LR_Dist_mm); I2C_writeAnything(LeftSteeringVal); //DEBUG!! //data_size = 3 * sizeof(uint16_t) + sizeof(float); //Serial.printf("Sending %d bytes RF/RC/RR/RS vals = %d, %d, %d, %3.2f to master\n", // data_size, RF_Dist_mm, RC_Dist_mm, RR_Dist_mm, LeftSteeringVal); //Serial.printf(" right %d\t%d\t%d\t%d\t%2.3f\n", //RF_Dist_mm, RC_Dist_mm, RR_Dist_mm); //DEBUG!! //right side I2C_writeAnything(RF_Dist_mm); I2C_writeAnything(RC_Dist_mm); I2C_writeAnything(RR_Dist_mm); I2C_writeAnything(Rear_Dist_mm); I2C_writeAnything(RightSteeringVal); break; case VL53L0X_REAR_ONLY: //DEBUG!! //data_size = sizeof(uint16_t); //Serial.printf("Sending %d bytes Rear_Dist_mm = %d to master\n", data_size, Rear_Dist_mm); //DEBUG!! I2C_writeAnything(Rear_Dist_mm); break; default: break; } digitalWrite(REQUEST_EVENT_DURATION_OUTPUT_PIN, LOW); digitalWrite(LED_BUILTIN, LOW);//added 01/22/22 } // // handle Rx Event (incoming I2C data) // void receiveEvent(int numBytes) { //Purpose: capture data request type from Mega I2C master //Inputs: // numBytes = int value denoting number of bytes received from master //Outputs: // uint8_t request_type filled with request type value from master //DEBUG!! //Serial.printf("receiveEvent(%d)\n", numBytes); //DEBUG!! I2C_readAnything(request_type); //DEBUG!! //Serial.printf("receiveEvent: Got %d from master\n", request_type); //DEBUG!! } |

And here’s the working main processor code. Note that the code as it is presented here has the ‘DISTANCES_ONLY’ define enabled, and all non-existent hardware modules ‘#defined’ out so I could concentrate on just the VL53L0X array connection.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 494 495 496 497 498 499 500 501 502 503 504 505 506 507 508 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 526 527 528 529 530 531 532 533 534 535 536 537 538 539 540 541 542 543 544 545 546 547 548 549 550 551 552 553 554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 570 571 572 573 574 575 576 577 578 579 580 581 582 583 584 585 586 587 588 589 590 591 592 593 594 595 596 597 598 599 600 601 602 603 604 605 606 607 608 609 610 611 612 613 614 615 616 617 618 619 620 621 622 623 624 625 626 627 628 629 630 631 632 633 634 635 636 637 638 639 640 641 642 643 644 645 646 647 648 649 650 651 652 653 654 655 656 657 658 659 660 661 662 663 664 665 666 667 668 669 670 671 672 673 674 675 676 677 678 679 680 681 682 683 684 685 686 687 688 689 690 691 692 693 694 695 696 697 698 699 700 701 702 703 704 705 706 707 708 709 710 711 712 713 714 715 716 717 718 719 720 721 722 723 724 725 726 727 728 729 730 731 732 733 734 735 736 737 738 739 740 741 742 743 744 745 746 747 748 749 750 751 752 753 754 755 756 757 758 759 760 761 762 763 764 765 766 767 768 769 770 771 772 773 774 775 776 777 778 779 780 781 782 783 784 785 786 787 788 789 790 791 792 793 794 795 796 797 798 799 800 801 802 803 804 805 806 807 808 809 810 811 812 813 814 815 816 817 818 819 820 821 822 823 824 825 826 827 828 829 830 831 832 833 834 835 836 837 838 839 840 841 842 843 844 845 846 847 848 849 850 851 852 853 854 855 856 857 858 859 860 861 862 863 864 865 866 867 868 869 870 871 872 873 874 875 876 877 878 879 880 881 882 883 884 885 886 887 888 889 890 891 892 893 894 895 896 897 898 899 900 901 902 903 904 905 906 907 908 909 910 911 912 913 914 915 916 917 918 919 920 921 922 923 924 925 926 927 928 929 930 931 932 933 934 935 936 937 938 939 940 941 942 943 944 945 946 947 948 949 950 951 952 953 954 955 956 957 958 959 960 961 962 963 964 965 966 967 968 969 970 971 972 973 974 975 976 977 978 979 980 981 982 983 984 985 986 987 988 989 990 991 992 993 994 995 996 997 998 999 1000 1001 1002 1003 1004 1005 1006 1007 1008 1009 1010 1011 1012 1013 1014 1015 1016 1017 1018 1019 1020 1021 1022 1023 1024 1025 1026 1027 1028 1029 1030 1031 1032 1033 1034 1035 1036 1037 1038 1039 1040 1041 1042 1043 1044 1045 1046 1047 1048 1049 1050 1051 1052 1053 1054 1055 1056 1057 1058 1059 1060 1061 1062 1063 1064 1065 1066 1067 1068 1069 1070 1071 1072 1073 1074 1075 1076 1077 1078 1079 1080 1081 1082 1083 1084 1085 1086 1087 1088 1089 1090 1091 1092 1093 1094 1095 1096 1097 1098 1099 1100 1101 1102 1103 1104 1105 1106 1107 1108 1109 1110 1111 1112 1113 1114 1115 1116 1117 1118 1119 1120 1121 1122 1123 1124 1125 1126 1127 1128 1129 1130 1131 1132 1133 1134 1135 1136 1137 1138 1139 1140 1141 1142 1143 1144 1145 1146 1147 1148 1149 1150 1151 1152 1153 1154 1155 1156 1157 1158 1159 1160 1161 1162 1163 1164 1165 1166 1167 1168 1169 1170 1171 1172 1173 1174 1175 1176 1177 1178 1179 1180 1181 1182 1183 1184 1185 1186 1187 1188 1189 1190 1191 1192 1193 1194 1195 1196 1197 1198 1199 1200 1201 1202 1203 1204 1205 1206 1207 1208 1209 1210 1211 1212 1213 1214 1215 1216 1217 1218 1219 1220 1221 1222 1223 1224 1225 1226 1227 1228 1229 1230 1231 1232 1233 1234 1235 1236 1237 1238 1239 1240 1241 1242 1243 1244 1245 1246 1247 1248 1249 1250 1251 1252 1253 1254 1255 1256 1257 1258 1259 1260 1261 1262 1263 1264 1265 1266 1267 1268 1269 1270 1271 1272 1273 1274 1275 1276 1277 1278 1279 1280 1281 1282 1283 1284 1285 1286 1287 1288 1289 1290 1291 1292 1293 1294 1295 1296 1297 1298 1299 1300 1301 1302 1303 1304 1305 1306 1307 1308 1309 1310 1311 1312 1313 1314 1315 1316 1317 1318 1319 1320 1321 1322 1323 1324 1325 1326 1327 1328 1329 1330 1331 1332 1333 1334 1335 1336 1337 1338 1339 1340 1341 1342 1343 1344 1345 1346 1347 1348 1349 1350 1351 1352 1353 1354 1355 1356 1357 1358 1359 1360 1361 1362 1363 1364 1365 1366 1367 1368 1369 1370 1371 1372 1373 1374 1375 1376 1377 1378 1379 1380 1381 1382 1383 1384 1385 1386 1387 1388 1389 1390 1391 1392 1393 1394 1395 1396 1397 1398 1399 1400 1401 1402 1403 1404 1405 1406 1407 1408 1409 1410 1411 1412 1413 1414 1415 1416 1417 1418 1419 1420 1421 1422 1423 1424 1425 1426 1427 1428 1429 1430 1431 1432 1433 1434 1435 1436 1437 1438 1439 1440 1441 1442 1443 1444 1445 1446 1447 1448 1449 1450 1451 1452 1453 1454 1455 1456 1457 1458 1459 1460 1461 1462 1463 1464 1465 1466 1467 1468 1469 1470 1471 1472 1473 1474 1475 1476 1477 1478 1479 1480 1481 1482 1483 1484 1485 1486 1487 1488 1489 1490 1491 1492 1493 1494 1495 1496 1497 1498 1499 1500 1501 1502 1503 1504 1505 1506 1507 1508 1509 1510 1511 1512 1513 1514 1515 1516 1517 1518 1519 1520 1521 1522 1523 1524 1525 1526 1527 1528 1529 1530 1531 1532 1533 1534 1535 1536 1537 1538 1539 1540 1541 1542 1543 1544 1545 1546 1547 1548 1549 1550 1551 1552 1553 1554 1555 1556 1557 1558 1559 1560 1561 1562 1563 1564 1565 1566 1567 1568 1569 1570 1571 1572 1573 1574 1575 1576 1577 1578 1579 1580 1581 1582 1583 1584 1585 1586 1587 1588 1589 1590 1591 1592 1593 1594 1595 1596 1597 1598 1599 1600 1601 1602 1603 1604 1605 1606 1607 1608 1609 1610 1611 1612 1613 1614 1615 1616 1617 1618 1619 1620 1621 1622 1623 1624 1625 1626 1627 1628 1629 1630 1631 1632 1633 1634 1635 1636 1637 1638 1639 1640 1641 1642 1643 1644 1645 1646 1647 1648 1649 1650 1651 1652 1653 1654 1655 1656 1657 1658 1659 1660 1661 1662 1663 1664 1665 1666 1667 1668 1669 1670 1671 1672 1673 1674 1675 1676 1677 1678 1679 1680 1681 1682 1683 1684 1685 1686 1687 1688 1689 1690 1691 1692 1693 1694 1695 1696 1697 1698 1699 1700 1701 1702 1703 1704 1705 1706 1707 1708 1709 1710 1711 1712 1713 1714 1715 1716 1717 1718 1719 1720 1721 1722 1723 1724 1725 1726 1727 1728 1729 1730 1731 1732 1733 1734 1735 1736 1737 1738 1739 1740 1741 1742 1743 1744 1745 1746 1747 1748 1749 1750 1751 1752 1753 1754 1755 1756 1757 1758 1759 1760 1761 1762 1763 1764 1765 1766 1767 1768 1769 1770 1771 1772 1773 1774 1775 1776 1777 1778 1779 1780 1781 1782 1783 1784 1785 1786 1787 1788 1789 1790 1791 1792 1793 1794 1795 1796 1797 1798 1799 1800 1801 1802 1803 1804 1805 1806 1807 1808 1809 1810 1811 1812 1813 1814 1815 1816 1817 1818 1819 1820 1821 1822 1823 1824 1825 1826 1827 1828 1829 1830 1831 1832 1833 1834 1835 1836 1837 1838 1839 1840 1841 1842 1843 1844 1845 1846 1847 1848 1849 1850 1851 1852 1853 1854 1855 1856 1857 1858 1859 1860 1861 1862 1863 1864 1865 1866 1867 1868 1869 1870 1871 1872 1873 1874 1875 1876 1877 1878 1879 1880 1881 1882 1883 1884 1885 1886 1887 1888 1889 1890 1891 1892 1893 1894 1895 1896 1897 1898 1899 1900 1901 1902 1903 1904 1905 1906 1907 1908 1909 1910 1911 1912 1913 1914 1915 1916 1917 1918 1919 1920 1921 1922 1923 1924 1925 1926 1927 1928 1929 1930 1931 1932 1933 1934 1935 1936 1937 1938 1939 1940 1941 1942 1943 1944 1945 1946 1947 1948 1949 1950 1951 1952 1953 1954 1955 1956 1957 1958 1959 1960 1961 1962 1963 1964 1965 1966 1967 1968 1969 1970 1971 1972 1973 1974 1975 1976 1977 1978 1979 1980 1981 1982 1983 1984 1985 1986 1987 1988 1989 1990 1991 1992 1993 1994 1995 1996 1997 1998 1999 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 2010 2011 2012 2013 2014 2015 2016 2017 2018 2019 2020 2021 2022 2023 2024 2025 2026 2027 2028 2029 2030 2031 2032 2033 2034 2035 2036 2037 2038 2039 2040 2041 2042 2043 2044 2045 2046 2047 2048 2049 2050 2051 2052 2053 2054 2055 2056 2057 2058 2059 2060 2061 2062 2063 2064 2065 2066 2067 2068 2069 2070 2071 2072 2073 2074 2075 2076 2077 2078 2079 2080 2081 2082 2083 2084 2085 2086 2087 2088 2089 2090 2091 2092 2093 2094 2095 2096 2097 2098 2099 2100 2101 2102 2103 2104 2105 2106 2107 2108 2109 2110 2111 2112 2113 2114 2115 2116 2117 2118 2119 2120 2121 2122 2123 2124 2125 2126 2127 2128 2129 2130 2131 2132 2133 2134 2135 2136 2137 2138 2139 2140 2141 2142 2143 2144 2145 2146 2147 2148 2149 2150 2151 2152 2153 2154 2155 2156 2157 2158 2159 2160 2161 2162 2163 2164 2165 2166 2167 2168 2169 2170 2171 2172 2173 2174 2175 2176 2177 2178 2179 2180 2181 2182 2183 2184 2185 2186 2187 2188 2189 2190 2191 2192 2193 2194 2195 2196 2197 2198 2199 2200 2201 2202 2203 2204 2205 2206 2207 2208 2209 2210 2211 2212 2213 2214 2215 2216 2217 2218 2219 2220 2221 2222 2223 2224 2225 2226 2227 2228 2229 2230 2231 2232 2233 2234 2235 2236 2237 2238 2239 2240 2241 2242 2243 2244 2245 2246 2247 2248 2249 2250 2251 2252 2253 2254 2255 2256 2257 2258 2259 2260 2261 2262 2263 2264 2265 2266 2267 2268 2269 2270 2271 2272 2273 2274 2275 2276 2277 2278 2279 2280 2281 2282 2283 2284 2285 2286 2287 2288 2289 2290 2291 2292 2293 2294 2295 2296 2297 2298 2299 2300 2301 2302 2303 2304 2305 2306 2307 2308 2309 2310 2311 2312 2313 2314 2315 2316 2317 2318 2319 2320 2321 2322 2323 2324 2325 2326 2327 2328 2329 2330 2331 2332 2333 2334 2335 2336 2337 2338 2339 2340 2341 2342 2343 2344 2345 2346 2347 2348 2349 2350 2351 2352 2353 2354 2355 2356 2357 2358 2359 2360 2361 2362 2363 2364 2365 2366 2367 2368 2369 2370 2371 2372 2373 2374 2375 2376 2377 2378 2379 2380 2381 2382 2383 2384 2385 2386 2387 2388 2389 2390 2391 2392 2393 2394 2395 2396 2397 2398 2399 2400 2401 2402 2403 2404 2405 2406 2407 2408 2409 2410 2411 2412 2413 2414 2415 2416 2417 2418 2419 2420 2421 2422 2423 2424 2425 2426 2427 2428 2429 2430 2431 2432 2433 2434 2435 2436 2437 2438 2439 2440 2441 2442 2443 2444 2445 2446 2447 2448 2449 2450 2451 2452 2453 2454 2455 2456 2457 2458 2459 2460 2461 2462 2463 2464 2465 2466 2467 2468 2469 2470 2471 2472 2473 2474 2475 2476 2477 2478 2479 2480 2481 2482 2483 2484 2485 2486 2487 2488 2489 2490 2491 2492 2493 2494 2495 2496 2497 2498 2499 2500 2501 2502 2503 2504 2505 2506 2507 2508 2509 2510 2511 2512 2513 2514 2515 2516 2517 2518 2519 2520 2521 2522 2523 2524 2525 2526 2527 2528 2529 2530 2531 2532 2533 2534 2535 2536 2537 2538 2539 2540 2541 2542 2543 2544 2545 2546 2547 2548 2549 2550 2551 2552 2553 2554 2555 2556 2557 2558 2559 2560 2561 2562 2563 2564 2565 2566 2567 2568 2569 2570 2571 2572 2573 2574 2575 2576 2577 2578 2579 2580 2581 2582 2583 2584 2585 2586 2587 2588 2589 2590 2591 2592 2593 2594 2595 2596 2597 2598 2599 2600 2601 2602 2603 2604 2605 2606 2607 2608 2609 2610 2611 2612 2613 2614 2615 2616 2617 2618 2619 2620 2621 2622 2623 2624 2625 2626 2627 2628 2629 2630 2631 2632 2633 2634 2635 2636 2637 2638 2639 2640 2641 2642 2643 2644 2645 2646 2647 2648 2649 2650 2651 2652 2653 2654 2655 2656 2657 2658 2659 2660 2661 2662 2663 2664 2665 2666 2667 2668 2669 2670 2671 2672 2673 2674 2675 2676 2677 2678 2679 2680 2681 2682 2683 2684 2685 2686 2687 2688 2689 2690 2691 2692 2693 2694 2695 2696 2697 2698 2699 2700 2701 2702 2703 2704 2705 2706 2707 2708 2709 2710 2711 2712 2713 2714 2715 2716 2717 2718 2719 2720 2721 2722 2723 2724 2725 2726 2727 2728 2729 2730 2731 2732 2733 2734 2735 2736 2737 2738 2739 2740 2741 2742 2743 2744 2745 2746 2747 2748 2749 2750 2751 2752 2753 2754 2755 2756 2757 2758 2759 2760 2761 2762 2763 2764 2765 2766 2767 2768 2769 2770 2771 2772 2773 2774 2775 2776 2777 2778 2779 2780 2781 2782 2783 2784 2785 2786 2787 2788 2789 2790 2791 2792 2793 2794 2795 2796 2797 2798 2799 2800 2801 2802 2803 2804 2805 2806 2807 2808 2809 2810 2811 2812 2813 2814 2815 2816 2817 2818 2819 2820 2821 2822 2823 2824 2825 2826 2827 2828 2829 2830 2831 2832 2833 2834 2835 2836 2837 2838 2839 2840 2841 2842 2843 2844 2845 2846 2847 2848 2849 2850 2851 2852 2853 2854 2855 2856 2857 2858 2859 2860 2861 2862 2863 2864 2865 2866 2867 2868 2869 2870 2871 2872 2873 2874 2875 2876 2877 2878 2879 2880 2881 2882 2883 2884 2885 2886 2887 2888 2889 2890 2891 2892 2893 2894 2895 2896 2897 2898 2899 2900 2901 2902 2903 2904 2905 2906 2907 2908 2909 2910 2911 2912 2913 2914 2915 2916 2917 2918 2919 2920 2921 2922 2923 2924 2925 2926 2927 2928 2929 2930 2931 2932 2933 2934 2935 2936 2937 2938 2939 2940 2941 2942 2943 2944 2945 2946 2947 2948 2949 2950 2951 2952 2953 2954 2955 2956 2957 2958 2959 2960 2961 2962 2963 2964 2965 2966 2967 2968 2969 2970 2971 2972 2973 2974 2975 2976 2977 2978 2979 2980 2981 2982 2983 2984 2985 2986 2987 2988 2989 2990 2991 2992 2993 2994 2995 2996 2997 2998 2999 3000 3001 3002 3003 3004 3005 3006 3007 3008 3009 3010 3011 3012 3013 3014 3015 3016 3017 3018 3019 3020 3021 3022 3023 3024 3025 3026 3027 3028 3029 3030 3031 3032 3033 3034 3035 3036 3037 3038 3039 3040 3041 3042 3043 3044 3045 3046 3047 3048 3049 3050 3051 3052 3053 3054 3055 3056 3057 3058 3059 3060 3061 3062 3063 3064 3065 3066 3067 3068 3069 3070 3071 3072 3073 3074 3075 3076 3077 3078 3079 3080 3081 3082 3083 3084 3085 3086 3087 3088 3089 3090 3091 3092 3093 3094 3095 3096 3097 3098 3099 3100 3101 3102 3103 3104 3105 3106 3107 3108 3109 3110 3111 3112 3113 3114 3115 3116 3117 3118 3119 3120 3121 3122 3123 3124 3125 3126 3127 3128 3129 3130 3131 3132 3133 3134 3135 3136 3137 3138 3139 3140 3141 3142 3143 3144 3145 3146 3147 3148 3149 3150 3151 3152 3153 3154 3155 3156 3157 3158 3159 3160 3161 3162 3163 3164 3165 3166 3167 3168 3169 3170 3171 3172 3173 3174 3175 3176 3177 3178 3179 3180 3181 3182 3183 3184 3185 3186 3187 3188 3189 3190 3191 3192 3193 3194 3195 3196 3197 3198 3199 3200 3201 3202 3203 3204 3205 3206 3207 3208 3209 3210 3211 3212 3213 3214 3215 3216 3217 3218 3219 3220 3221 3222 3223 3224 3225 3226 3227 3228 3229 3230 3231 3232 3233 3234 3235 3236 3237 3238 3239 3240 3241 3242 3243 3244 3245 3246 3247 3248 3249 3250 3251 3252 3253 3254 3255 3256 3257 3258 3259 3260 3261 3262 3263 3264 3265 3266 3267 3268 3269 3270 3271 3272 3273 3274 3275 3276 3277 3278 3279 3280 3281 3282 3283 3284 3285 3286 3287 3288 3289 3290 3291 3292 3293 3294 3295 3296 3297 3298 3299 3300 3301 3302 3303 3304 3305 3306 3307 3308 3309 3310 3311 3312 3313 3314 3315 3316 3317 3318 3319 3320 3321 3322 3323 3324 3325 3326 3327 3328 3329 3330 3331 3332 3333 3334 3335 3336 3337 3338 3339 3340 3341 3342 3343 3344 3345 3346 3347 3348 3349 3350 3351 3352 3353 3354 3355 3356 3357 3358 3359 3360 3361 3362 3363 3364 3365 3366 3367 3368 3369 3370 3371 3372 3373 3374 3375 3376 3377 3378 3379 3380 3381 3382 3383 3384 3385 3386 3387 3388 3389 3390 3391 3392 3393 3394 3395 3396 3397 3398 3399 3400 3401 3402 3403 3404 3405 3406 3407 3408 3409 3410 3411 3412 3413 3414 3415 3416 3417 3418 3419 3420 3421 3422 3423 3424 3425 3426 3427 3428 3429 3430 3431 3432 3433 3434 3435 3436 3437 3438 3439 3440 3441 3442 3443 3444 3445 3446 3447 3448 3449 3450 3451 3452 3453 3454 3455 3456 3457 3458 3459 3460 3461 3462 3463 3464 3465 3466 3467 3468 3469 3470 3471 3472 3473 3474 3475 3476 3477 3478 3479 3480 3481 3482 3483 3484 3485 3486 3487 3488 3489 3490 3491 3492 3493 3494 3495 3496 3497 3498 3499 3500 3501 3502 3503 3504 3505 3506 3507 3508 3509 3510 3511 3512 3513 3514 3515 3516 3517 3518 3519 3520 3521 3522 3523 3524 3525 3526 3527 3528 3529 3530 3531 3532 3533 3534 3535 3536 3537 3538 3539 3540 3541 3542 3543 3544 3545 3546 3547 3548 3549 3550 3551 3552 3553 3554 3555 3556 3557 3558 3559 3560 3561 3562 3563 3564 3565 3566 3567 3568 3569 3570 3571 3572 3573 3574 3575 3576 3577 3578 3579 3580 3581 3582 3583 3584 3585 3586 3587 3588 3589 3590 3591 3592 3593 3594 3595 3596 3597 3598 3599 3600 3601 3602 3603 3604 3605 3606 3607 3608 3609 3610 3611 3612 3613 3614 3615 3616 3617 3618 3619 3620 3621 3622 3623 3624 3625 3626 3627 3628 |