Posted 14 May 2021,

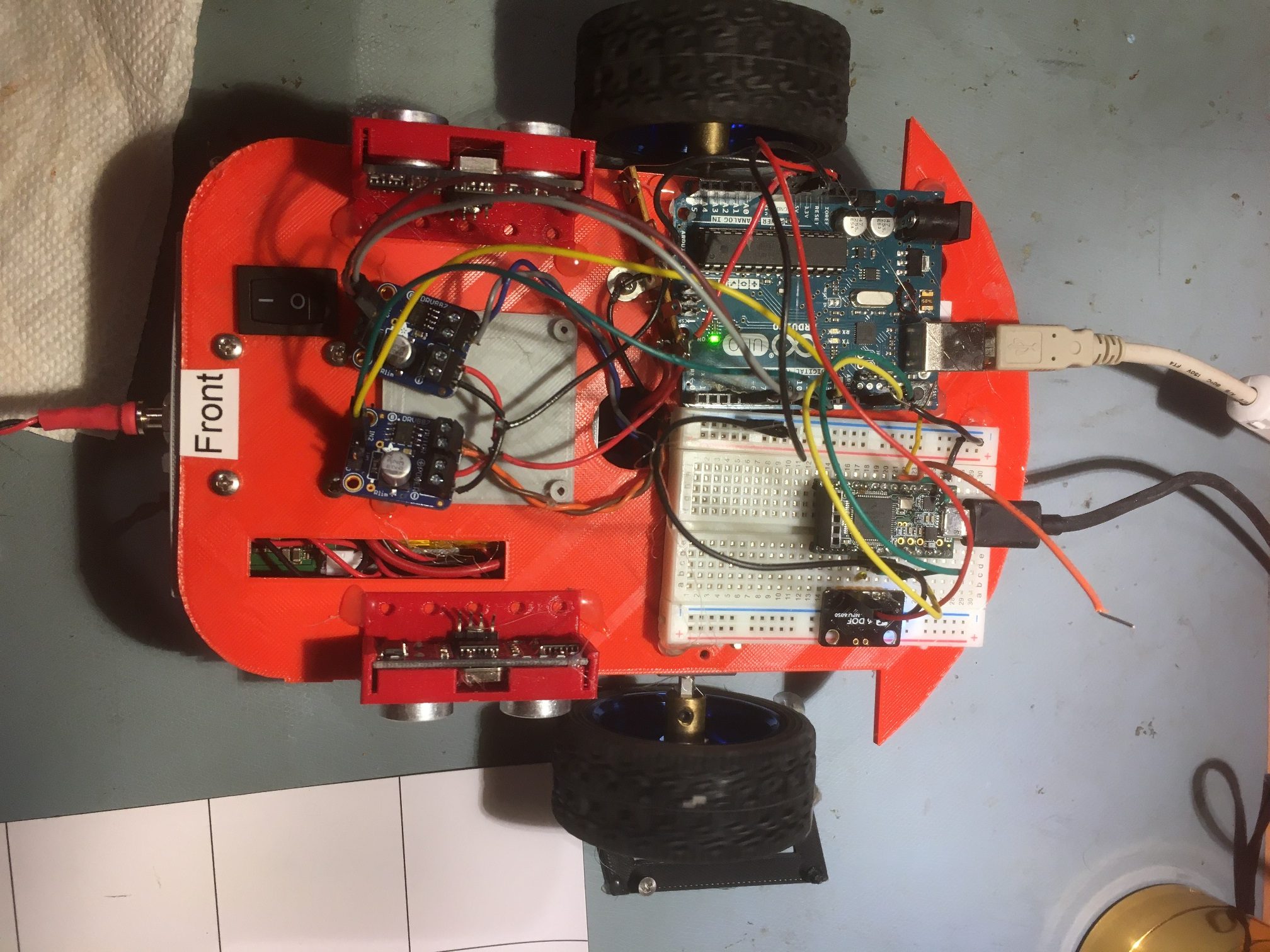

In my previous post on this topic, I described my efforts to use the Arduino PID library to manage turns with Wall-E2, my autonomous wall following robot. This post talks about a problem I encountered with the PID library when used in a system that uses an external timing source, like the TIMER5 ISR in my system and a PID input that depends on accurate timing, such as my turn-rate input.

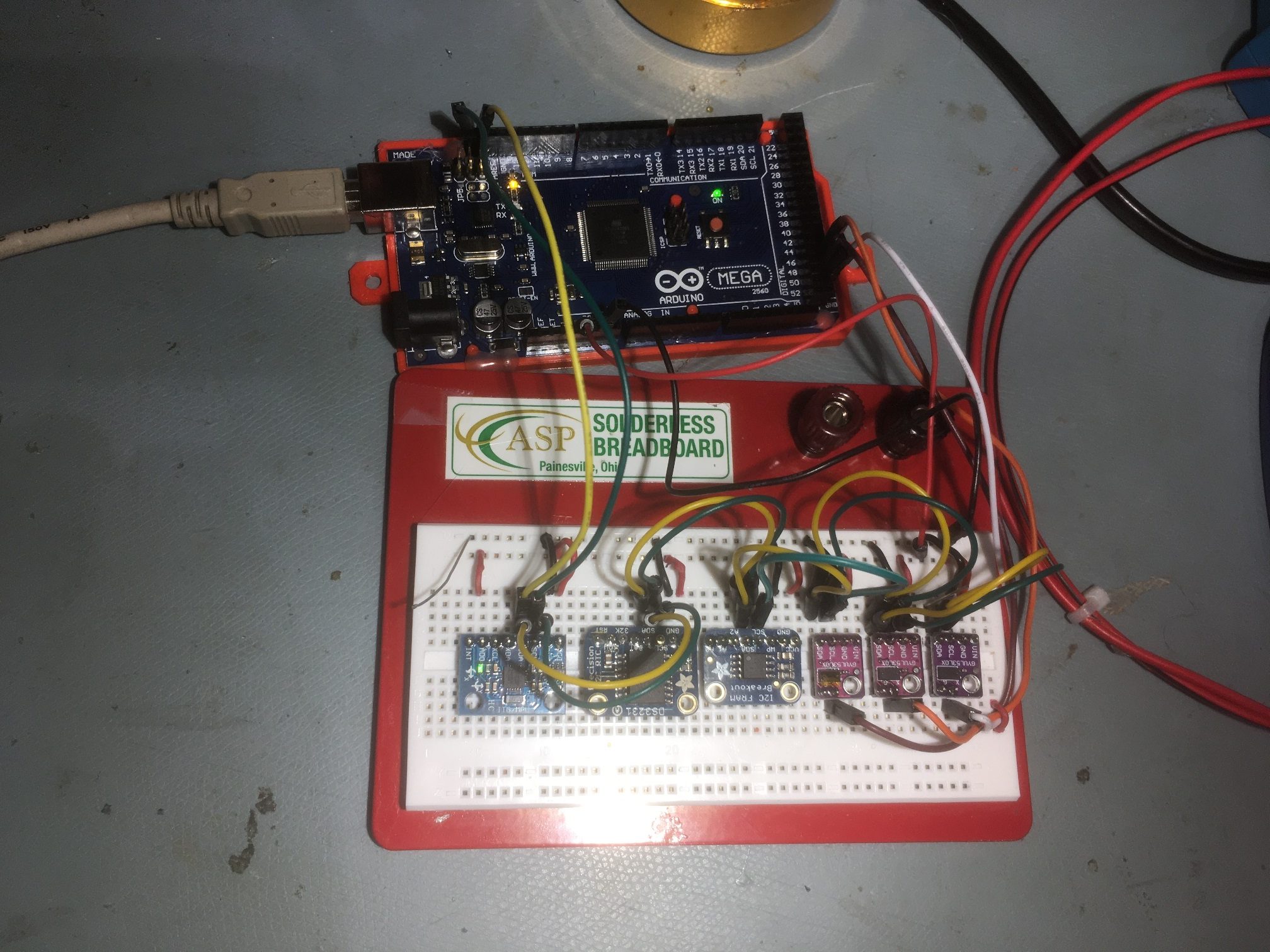

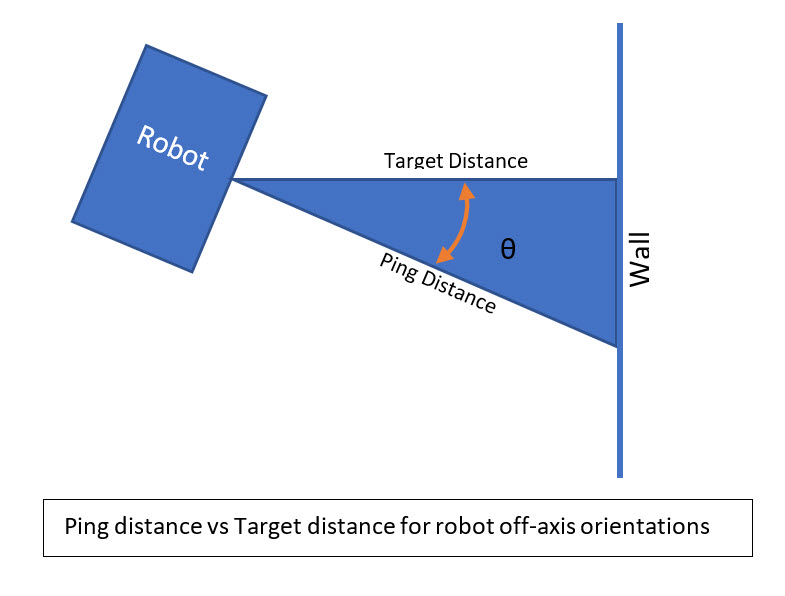

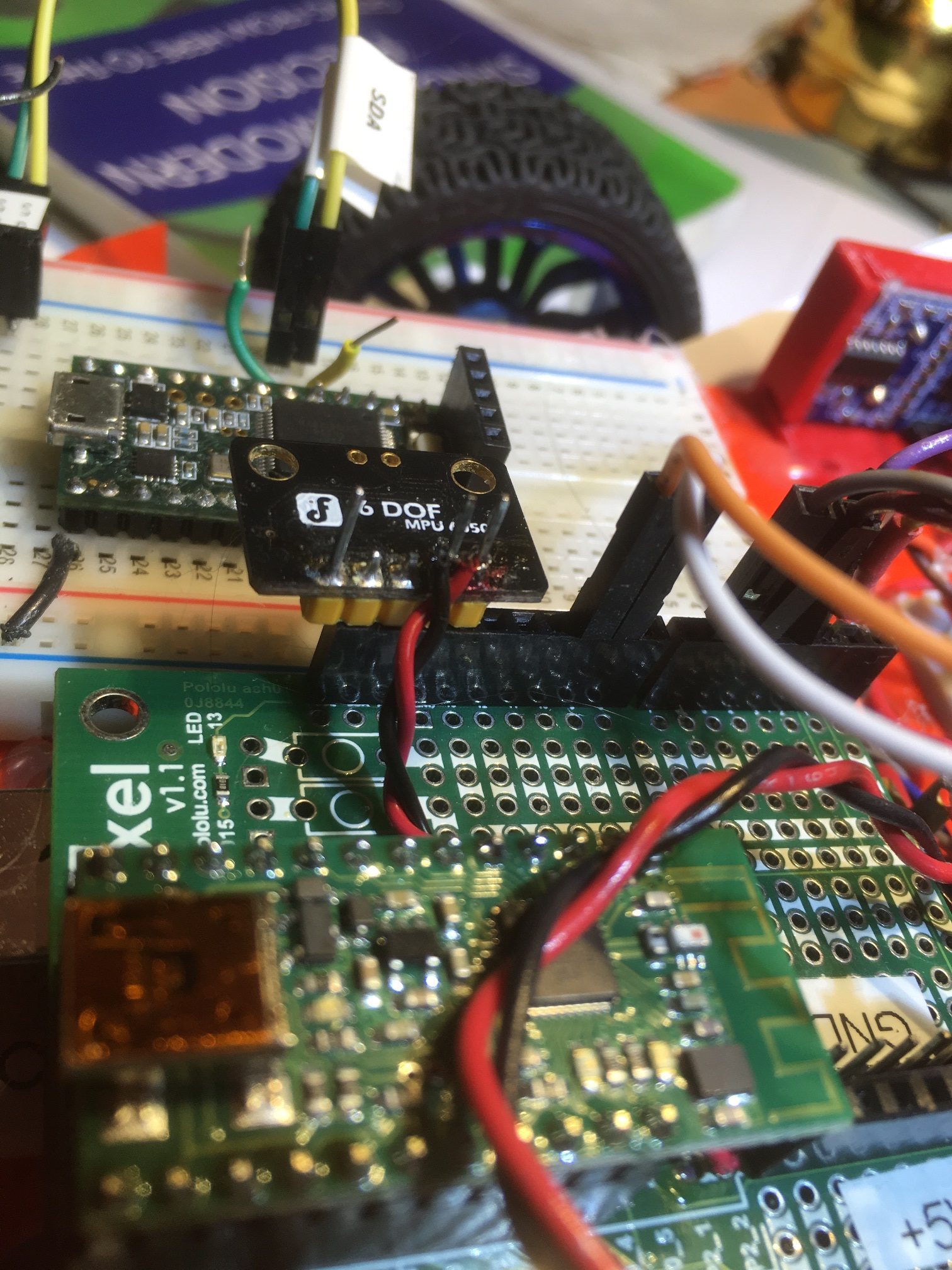

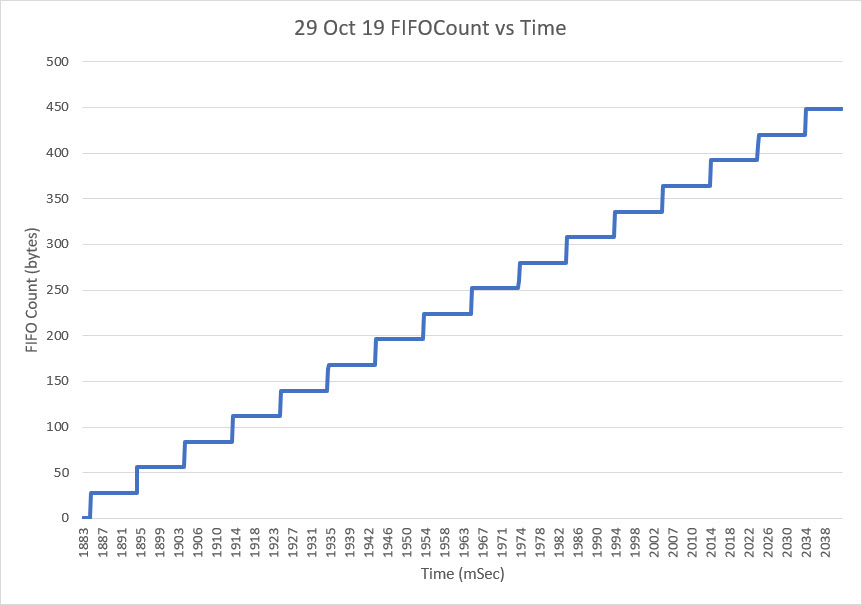

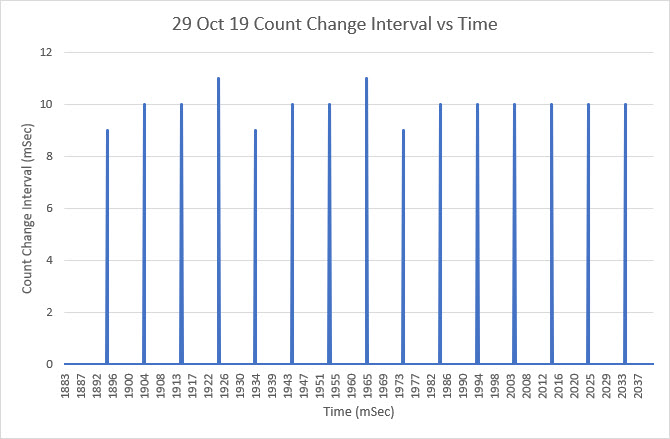

In my autonomous wall-following robot project, I use TIMER5 on the Arduino Mega 2560 to generate an interrupt ever 100 mSec, and update all time-sensitive parameters in the ISR. These include results from all seven VL53L0X ToF distance sensors, the front-mounted LIDAR, and heading information from a MP6050 IMU. This simplifies the software immensely, as now the latest information is available throughout the code, and encapsulates all sensor-related calls to a single routine.

In my initial efforts at turn-rate tuning using the Arduino PID library, I computed the turn rate in the ISR by simply using

|

1 |

turn_rate = 10*curr_hdg - prev_hdg //appropriately adjusted for 0-180 transitions, etc. |

This actually worked because, the ISR frequency and the PID::Compute() frequency were more or less the same. However, since the two time intervals are independent of each other there could be a phase shift, which might drift slowly over time. Also, if either timer interval is changed sometime down the road, the system behavior could change dramatically. I thought I had figured out how to handle this issue by moving the turn-rate computation inside the PID::Compute() function block, as shown below

In a typical PID use case, you see code like the following:

|

1 2 3 4 5 6 7 |

if(myPID.Compute()) { //compute the new turn rate //use the PID output term to control something ... ... } |

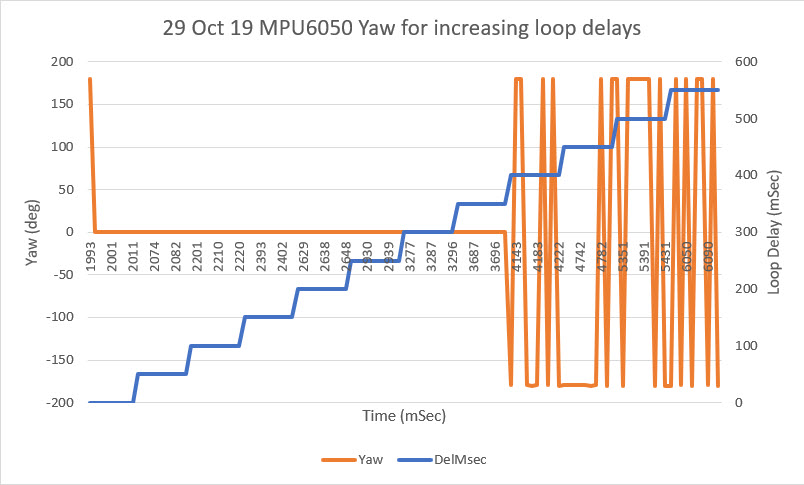

After making the above change, I started getting really weird behavior, and all my efforts at PID tuning failed miserably. After a LOT of troubleshooting and head-scratching, I finally figured out what was happening. In the above code configuration, the PID generates a new output value BEFORE the new turn rate is computed, so the PID is always operating on information that is at least 100mSec old – not a good way to run a railroad!

Some of the PID documentation I researched said (or at least implied) that by setting the PID’s sample time to zero using PID::SetSampleTime(0), that Compute() would actually produce a new output value every time it was called. This meant that I could do something like the following:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

if (bTimeForNavUpdate) //set true in ISR { bTimeForNavUpdate = false; //4/28/21 now time interval is constant at ~100mSec //11/14/20 need to handle -179 to +179 transition float deltaDeg = IMUHdgValDeg - prev_hdg; deltaDeg = (deltaDeg > 180) ? deltaDeg - 360 : deltaDeg; deltaDeg = (deltaDeg < -180) ? deltaDeg + 360 : deltaDeg; TurnRateVal = 10 * abs(deltaDeg); //now time interval is constant 1/10 sec TurnRatePID.Compute();//04/10/21 SampleTime == 0 so now this updates every time SetLeftMotorDirAndSpeed(!b_ccw, TurnRateOutput + MOTOR_SPEED_HALF); SetRightMotorDirAndSpeed(b_ccw, TurnRateOutput + MOTOR_SPEED_HALF); prev_hdg = IMUHdgValDeg; } |

Great idea, but it didn’t work! After some more troubleshooting and head-scratching, I finally realized that the PID::SetSampleTime() function specifically disallows a value of zero, as it would cause the ‘D’ term to go to infinity – oops! Here’s the relevant code

|

1 2 3 4 5 6 7 8 9 10 11 |

void PID::SetSampleTime(int NewSampleTime) { if (NewSampleTime > 0) { double ratio = (double)NewSampleTime / (double)SampleTime; ki *= ratio; kd /= ratio; SampleTime = (unsigned long)NewSampleTime; } } |

As can be seen from the above, an argument of zero is simply ignored, and the sample time remains unchanged. When I pointed this out to the developer, he said this was by design, as the ‘ratio’ calculation above would be undefined for an input argument of zero. This is certainly a valid point, but makes it impossible to synch the PID to an external master clock – bummer!

After some more thought, I modified my copy of PID.cpp as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

void PID::SetSampleTime(int NewSampleTime) { Serial.println("In PID::SetSampleTime with NewSampleTime = "); Serial.println(NewSampleTime); if (NewSampleTime > 0) { double ratio = (double)NewSampleTime / (double)SampleTime; ki *= ratio; kd /= ratio; //SampleTime = (unsigned long)NewSampleTime; } SampleTime = (unsigned long)NewSampleTime; } |

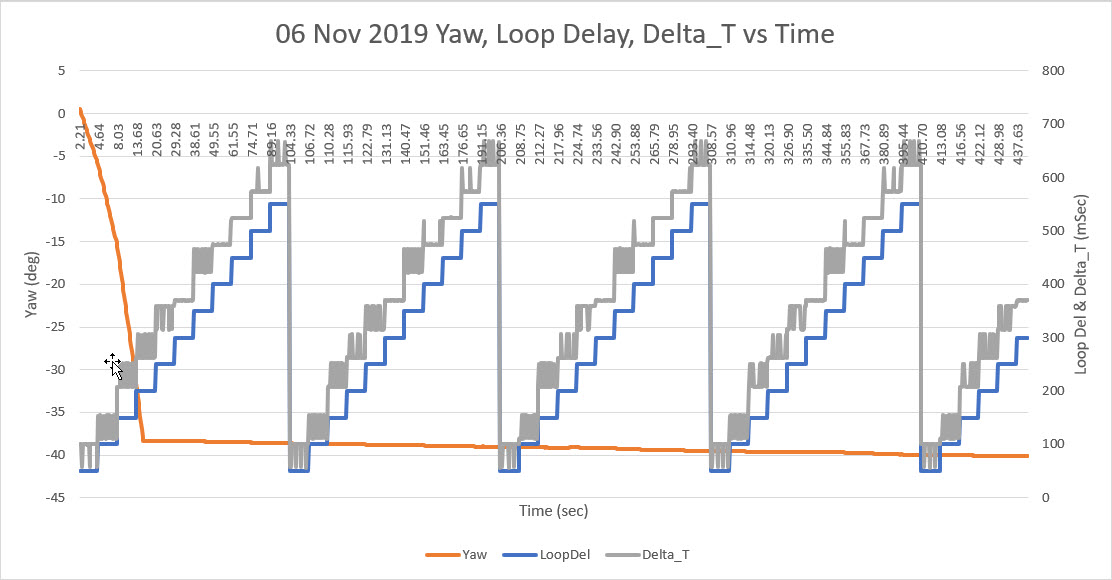

By moving the SampleTime = (unsigned long)NewSampleTime; line out of the ‘if’ block, I can now set the sample time to zero without causing problems with the value of ‘ratio’. Now PID::Compute() will generate a new output value every time it is called, which synchs the PID engine with the program’s master timing source – yay!

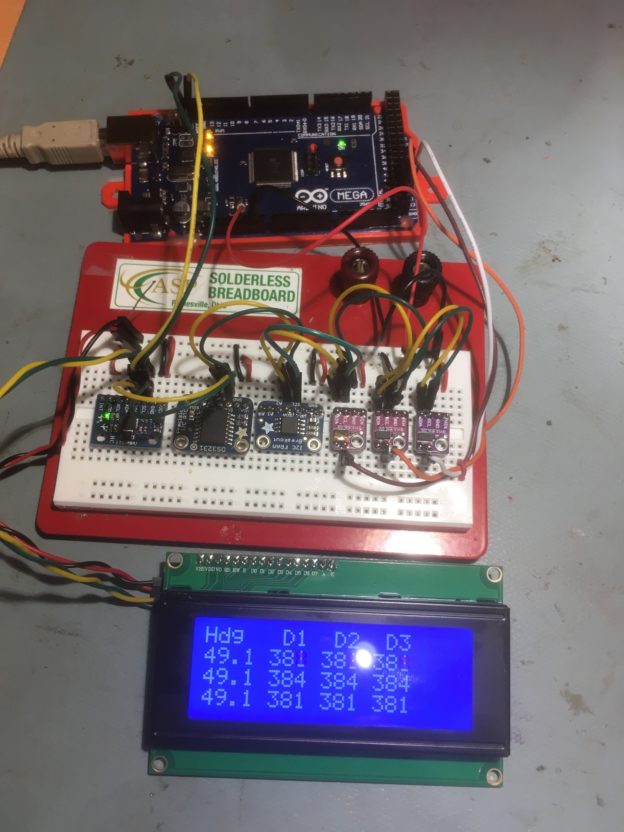

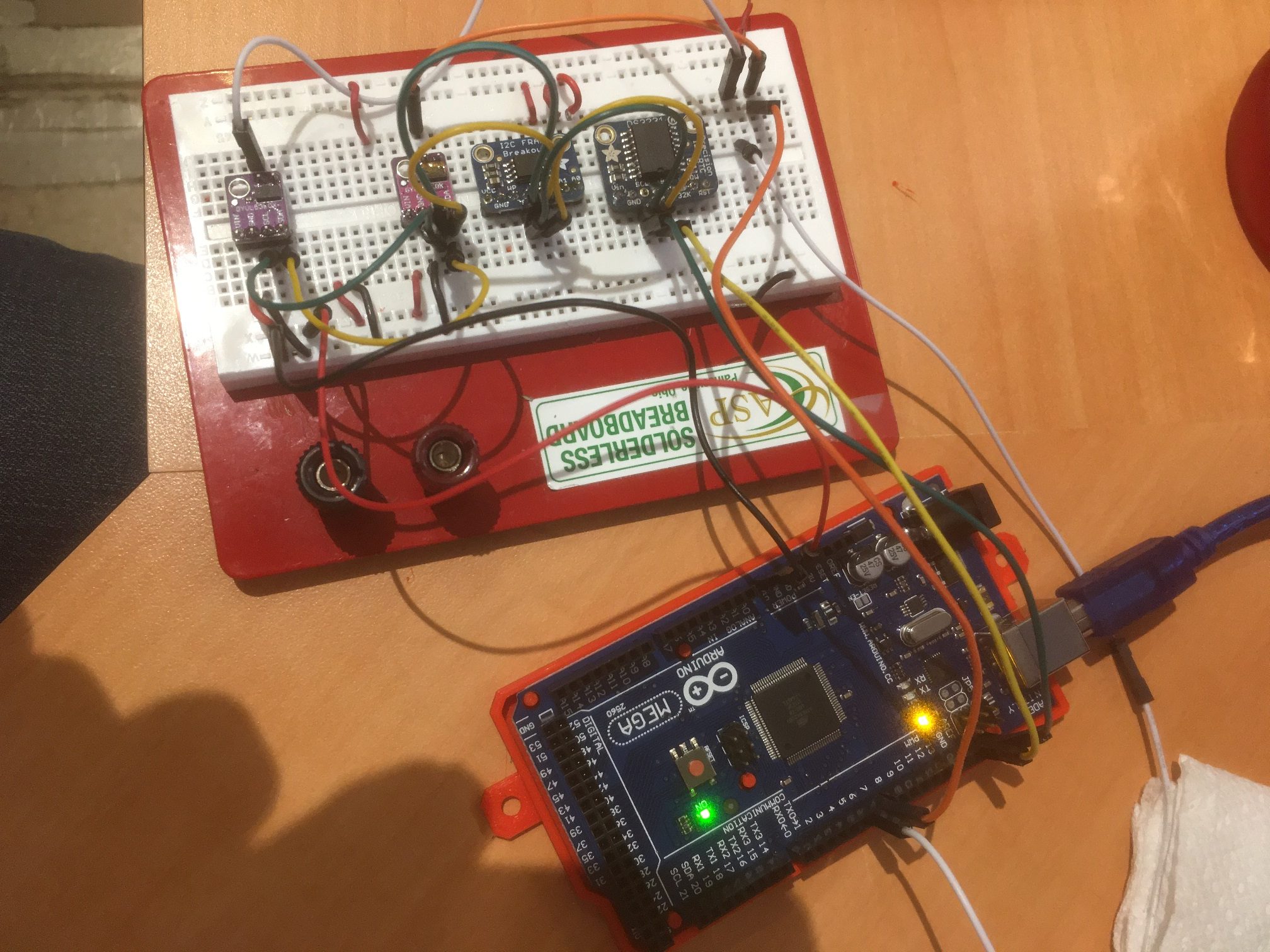

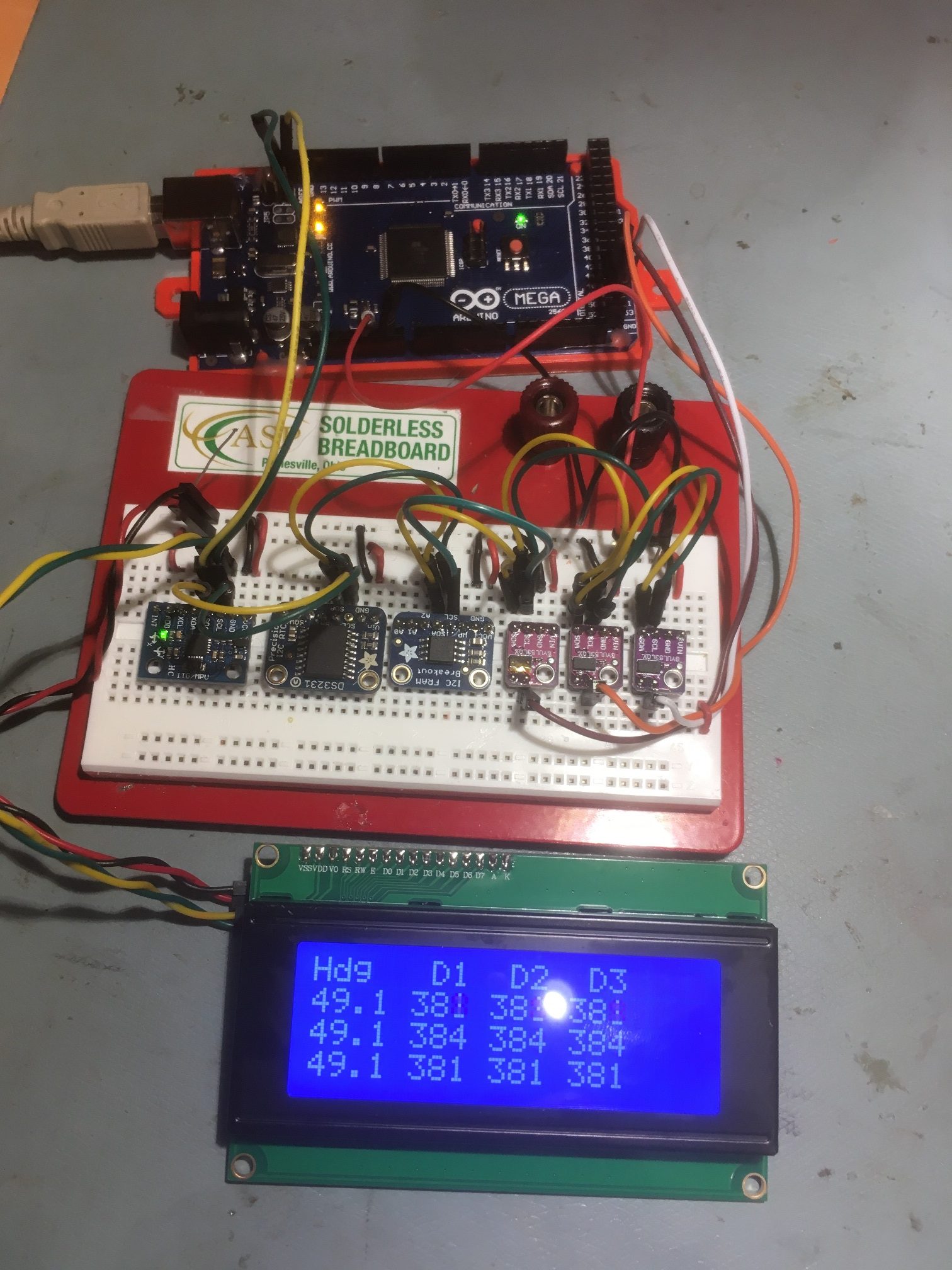

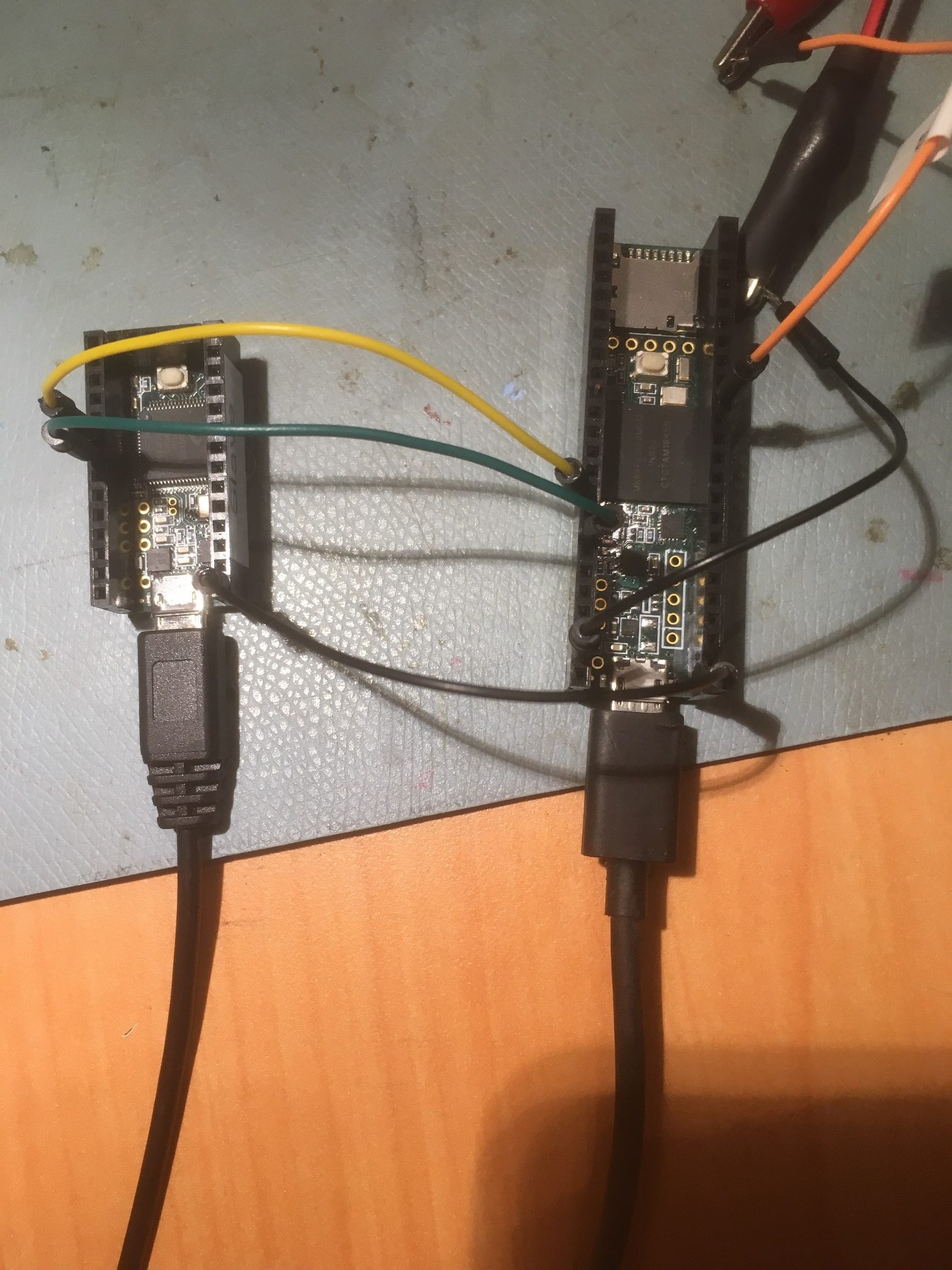

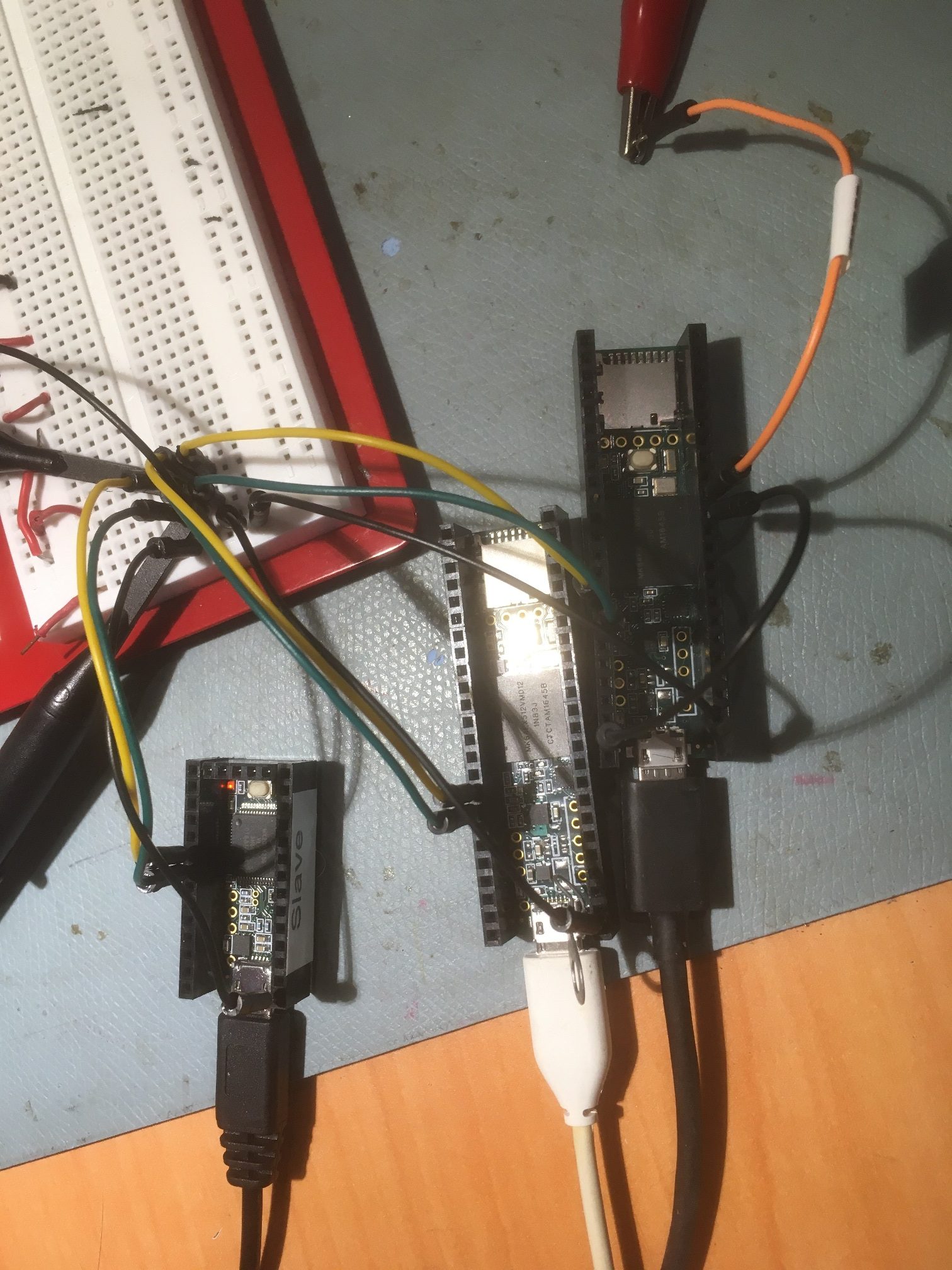

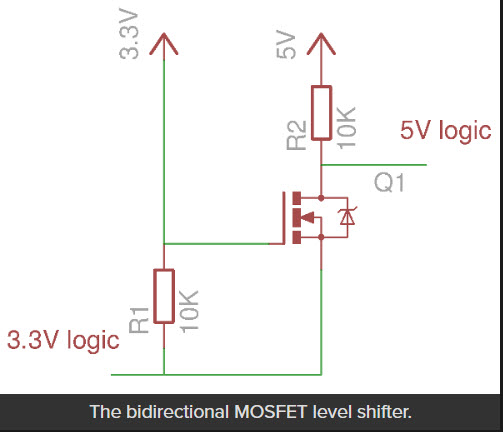

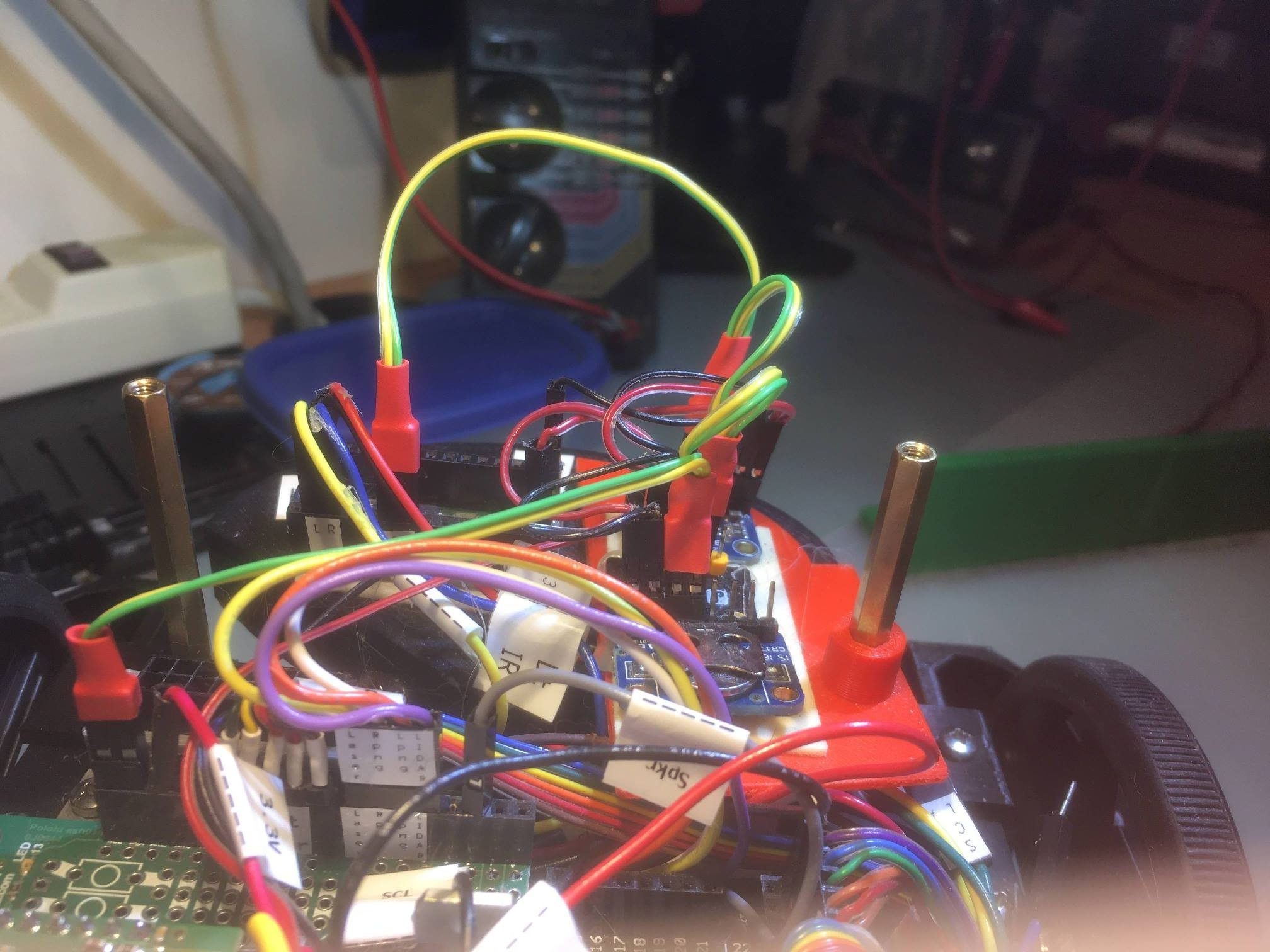

I tried out a slightly modified version of this technique on my small 2-wheel robot. The two-wheeler uses an Arduino Uno instead of a Mega, so I didn’t use a TIMER interrupt. Instead I used the ‘elapsedMillisecond’ library and set up an elapsed time of 100 mSec, and also modified the program to turn indefinitely at the desired turn rate in deg/sec.

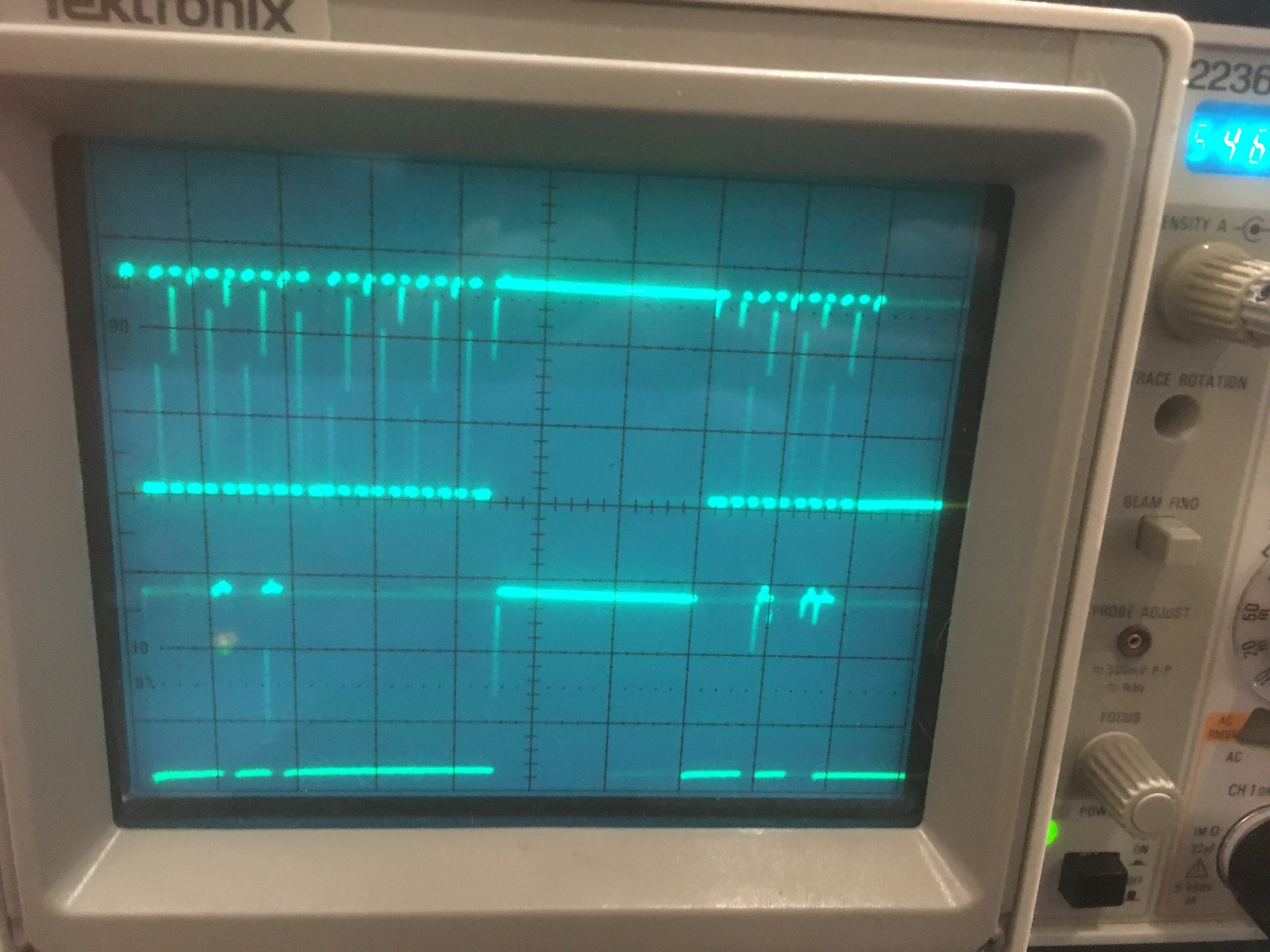

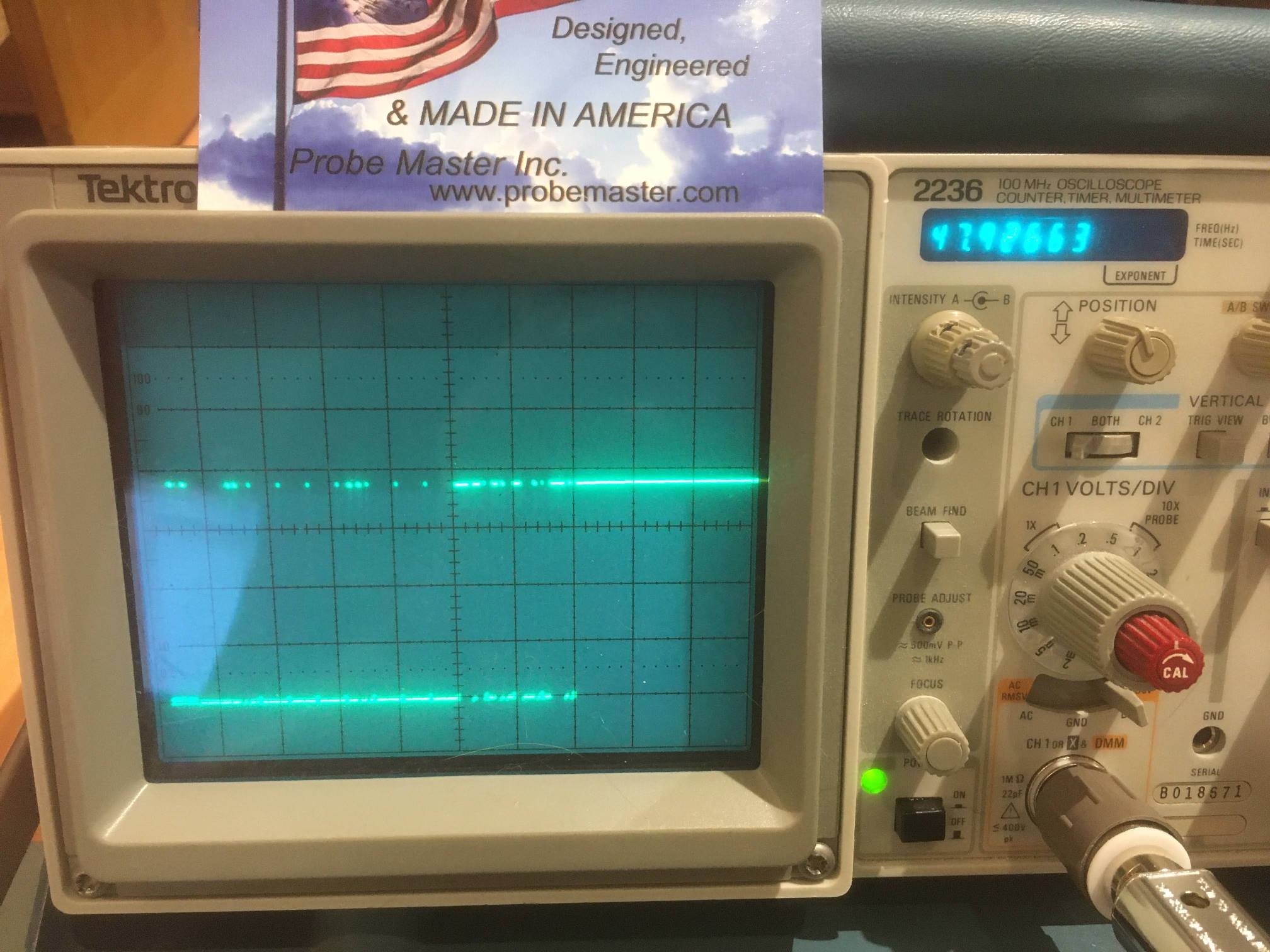

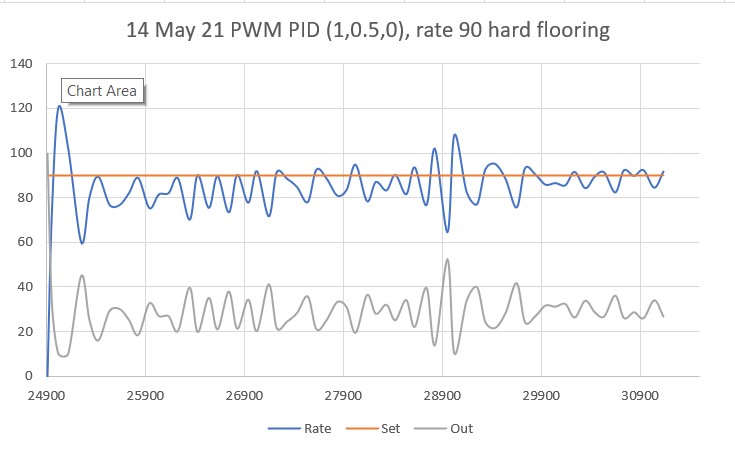

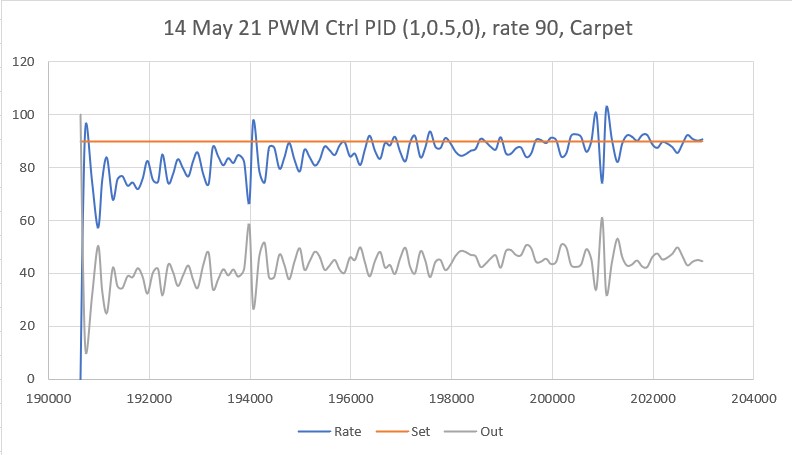

I experimented with two different methods for controlling the turn rate – a ‘PWM’ method where the wheel motors are pulsed at full speed for a variable pulse width, and a ‘direct’ method where the wheel motor speeds are varied directly to achieve the desired turn rate. I thought the PWM method might work better on a heavier robot for smaller angle turns as there is quite a bit of inertia to overcome, but the ‘direct’ method might be more accurate.

Here’s the code for the ‘direct’ method, where the wheel speeds are varied with

|

1 |

SetLeftMotorDirAndSpeed |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 |

void SpinTurnForever(bool b_ccw, float kp, float ki, float kd, float degPersec) { float tgt_deg; float timeout_sec; bool bDoneTurning = false; bool bTimedOut = false; bool bResult = true; //04/21/20 added so will be only one exit point double prev_hdg = 0; unsigned long prev_uSec; //added 09/02/20 //DEBUG!! mySerial.printf("SpinTurnForever TurnRatePID parameters Kp/Ki/Kd/Setpoint = %2.2f/%2.2f/%2.2f/%2.2f\n", kp, ki, kd, degPersec); //DEBUG!! //Step3: 11/14/20 activate turn rate PID TurnRateOutput = 0; TurnRatePID.SetMode(AUTOMATIC); TurnRatePID.SetTunings(kp, ki, kd); TurnRateSetPoint = degPersec; //set the limits //TurnRatePID.SetOutputLimits(10, 100); TurnRatePID.SetOutputLimits(MOTOR_SPEED_LOW, MOTOR_SPEED_MAX); TurnRatePID.SetSampleTime(0); //Compute() runs every time it is called UpdateIMUHdgValDeg(); prev_hdg = IMUHdgValDeg; //11/06/20 now IMUHdgValDeg updated in ISR mySerial.printf("Msec\tHdg\tPrevHdg\tRate\tSet\tOut\n"); MsecSinceLastTurnRateUpdate = 0; while (true) { CheckForUserInput(); //5/12/21 now time interval is constant at ~100mSec if (MsecSinceLastTurnRateUpdate >= 100) { MsecSinceLastTurnRateUpdate -= 100; UpdateIMUHdgValDeg(); float deltaDeg = IMUHdgValDeg - prev_hdg; prev_hdg = IMUHdgValDeg; //11/14/20 need to handle -179 to +179 transition deltaDeg = (deltaDeg > 180) ? deltaDeg - 360 : deltaDeg; deltaDeg = (deltaDeg < -180) ? deltaDeg + 360 : deltaDeg; TurnRateVal = 10 * abs(deltaDeg); //now time interval is constant 1/10 sec TurnRatePID.Compute(); //this is where everything is computed SetLeftMotorDirAndSpeed(!b_ccw, TurnRateOutput); SetRightMotorDirAndSpeed(b_ccw, TurnRateOutput); //DEBUG!! mySerial.printf("%lu\t%4.2f\t%4.2f\t%2.1f\t%2.1f\t%2.1f\n", millis(), IMUHdgValDeg, prev_hdg, TurnRateVal, degPersec, TurnRateOutput); //DEBUG prev_hdg = IMUHdgValDeg; } } } |

Here’s the code for the PWM method: the only difference is that is the duration of the pulse that is varied, not the wheel speed.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 |

void PulseSpinTurnForever(bool b_ccw, float kp, float ki, float kd, float degPersec) { float tgt_deg; float timeout_sec; bool bDoneTurning = false; bool bTimedOut = false; bool bResult = true; //04/21/20 added so will be only one exit point double prev_hdg = 0; unsigned long prev_uSec; //added 09/02/20 //DEBUG!! mySerial.printf("PulseSpinTurnForever TurnRatePID parameters Kp/Ki/Kd/Setpoint = %2.2f/%2.2f/%2.2f/%2.2f\n", kp, ki, kd, degPersec); //DEBUG!! //Step3: 11/14/20 activate turn rate PID TurnRateOutput = 0; TurnRatePID.SetMode(AUTOMATIC); TurnRatePID.SetTunings(kp, ki, kd); TurnRateSetPoint = degPersec; //set the limits TurnRatePID.SetOutputLimits(10, 100); TurnRatePID.SetSampleTime(0); //Compute() runs every time it is called UpdateIMUHdgValDeg(); prev_hdg = IMUHdgValDeg; //11/06/20 now IMUHdgValDeg updated in ISR mySerial.printf("Msec\tHdg\tPrevHdg\tRate\tSet\tOut\n"); uint32_t prevUsec = micros();//initialize uint32_t nowUsec = prevUsec; MsecSinceLastTurnRateUpdate = 0; while (true) { CheckForUserInput(); //5/12/21 now time interval is constant at ~100mSec if (MsecSinceLastTurnRateUpdate >= 100) { MsecSinceLastTurnRateUpdate -= 100; UpdateIMUHdgValDeg(); float deltaDeg = IMUHdgValDeg - prev_hdg; prev_hdg = IMUHdgValDeg; //11/14/20 need to handle -179 to +179 transition deltaDeg = (deltaDeg > 180) ? deltaDeg - 360 : deltaDeg; deltaDeg = (deltaDeg < -180) ? deltaDeg + 360 : deltaDeg; TurnRateVal = 10 * abs(deltaDeg); //now time interval is constant 1/10 sec TurnRatePID.Compute(); //this is where everything is computed //05/03/21 - back to the PWM technique //Step4: Pulse the motors to full speed for the duration specified by the PID output digitalWrite(CHG_CONNECT_LED_PIN, HIGH); SetLeftMotorDirAndSpeed(!b_ccw, MOTOR_SPEED_FULL); SetRightMotorDirAndSpeed(b_ccw, MOTOR_SPEED_FULL); delay(TurnRateOutput); StopBothMotors(); digitalWrite(CHG_CONNECT_LED_PIN, LOW); //DEBUG!! mySerial.printf("%lu\t%4.2f\t%4.2f\t%2.1f\t%2.1f\t%2.1f\n", millis(), IMUHdgValDeg, prev_hdg, TurnRateVal, degPersec, TurnRateOutput); //DEBUG StopBothMotors(); prev_hdg = IMUHdgValDeg; } } } |

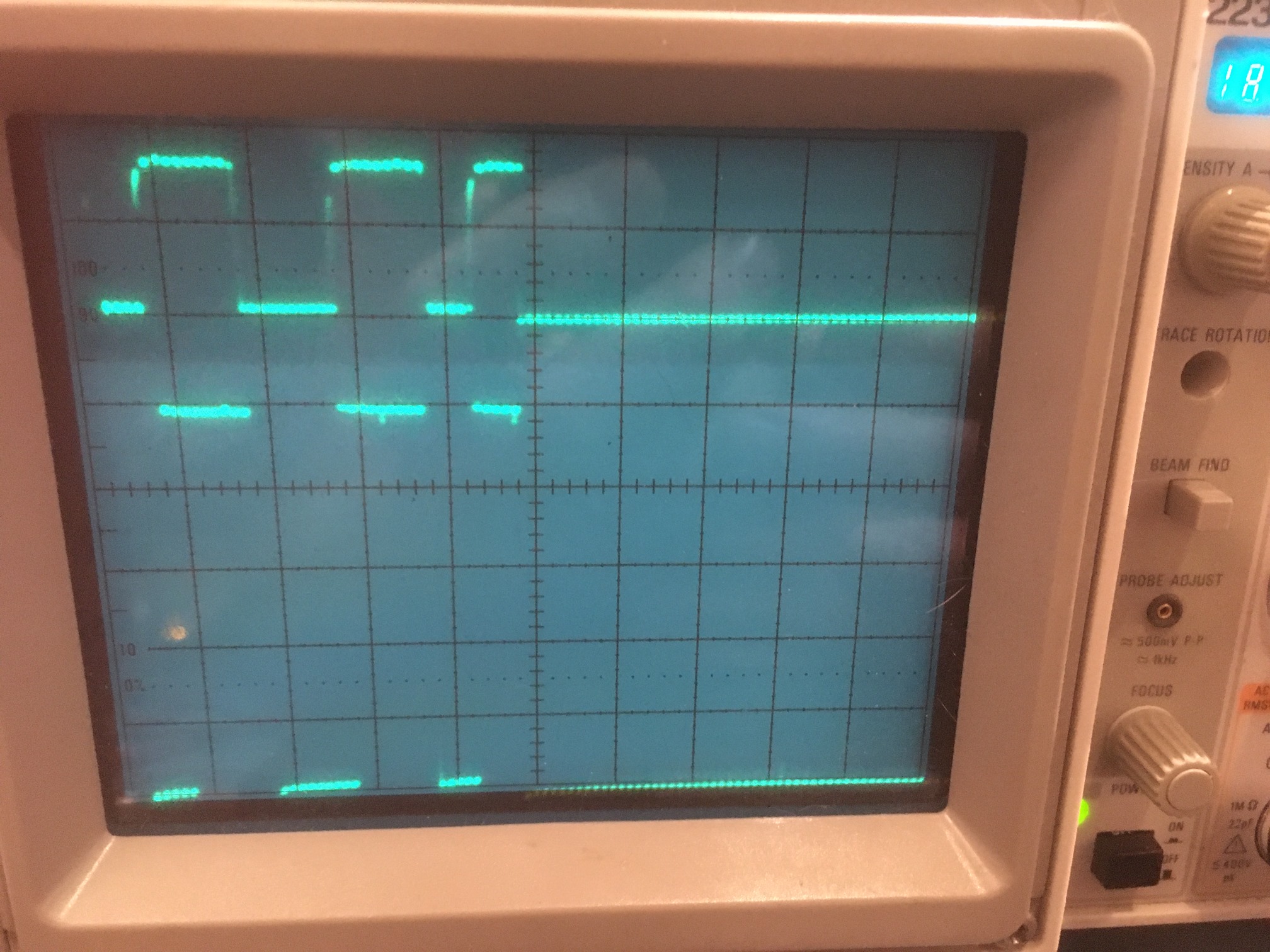

Here’s a short video showing the two-wheel robot doing a spin turn using the PWM technique with a desired turn rate of 90 deg/sec, using PID = (1,0.5,0).

Here’s another run, this time on carpet:

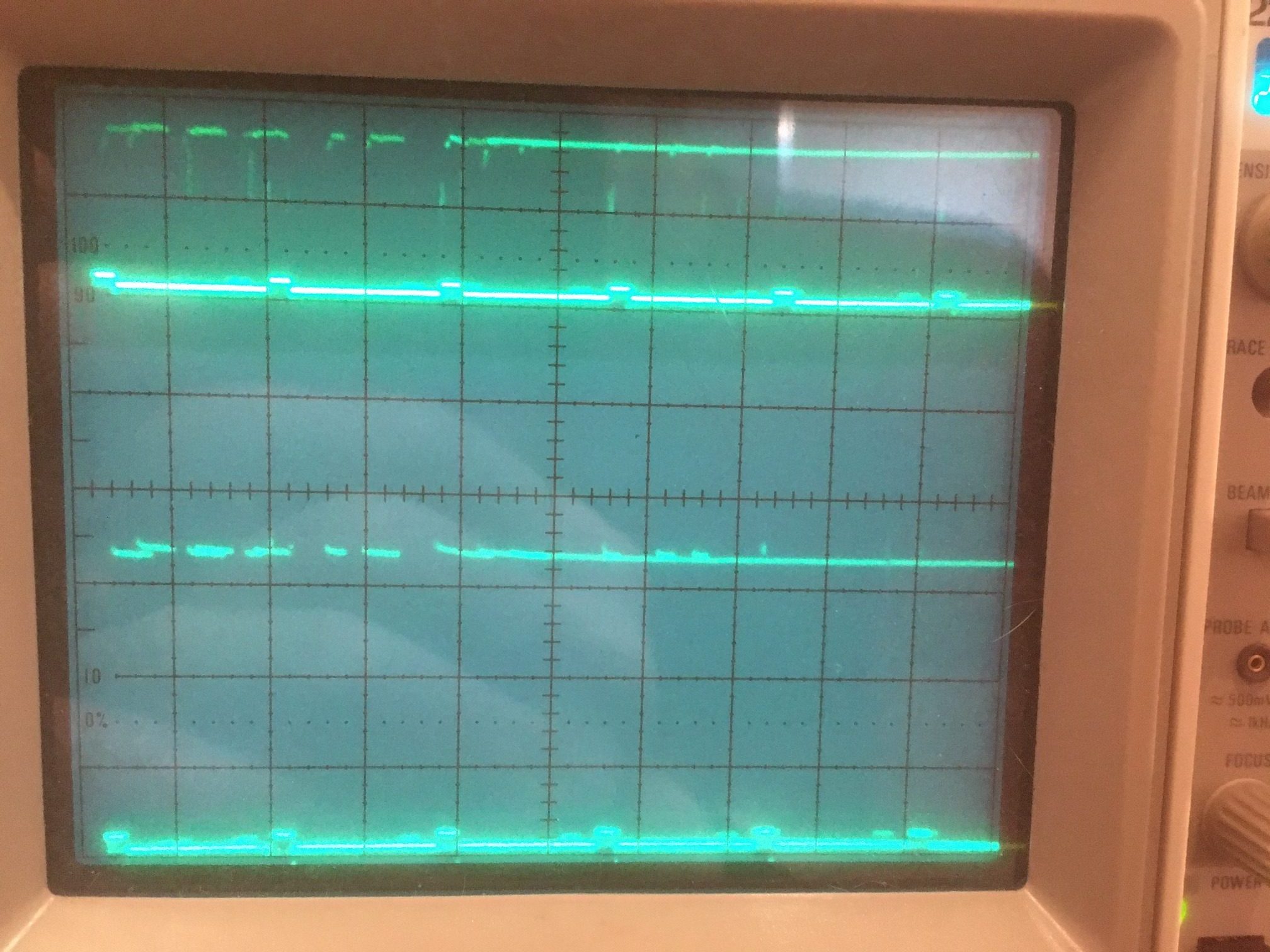

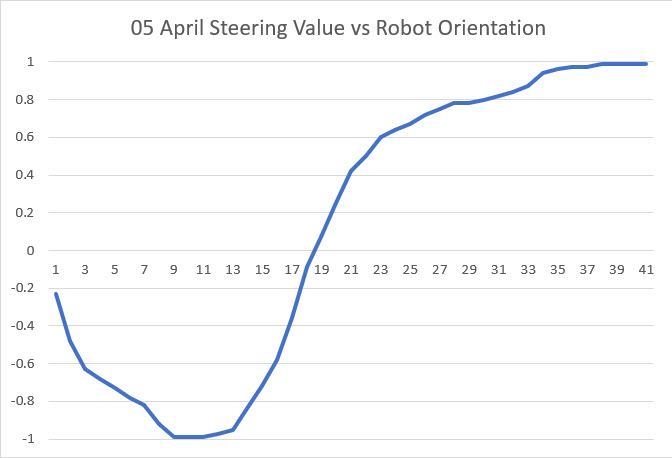

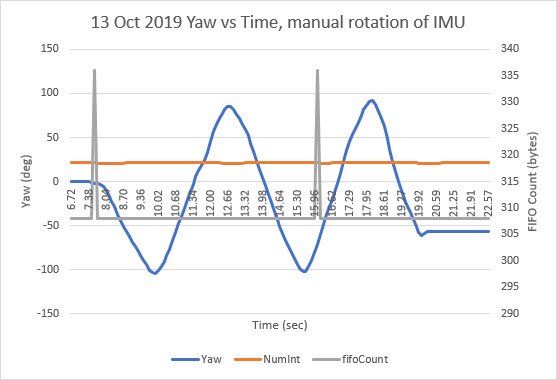

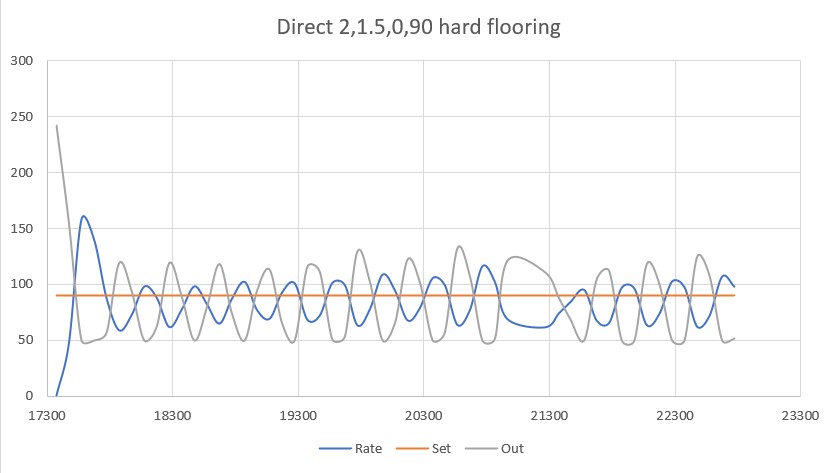

Here’s some data from the ‘direct’ method, on hard flooring

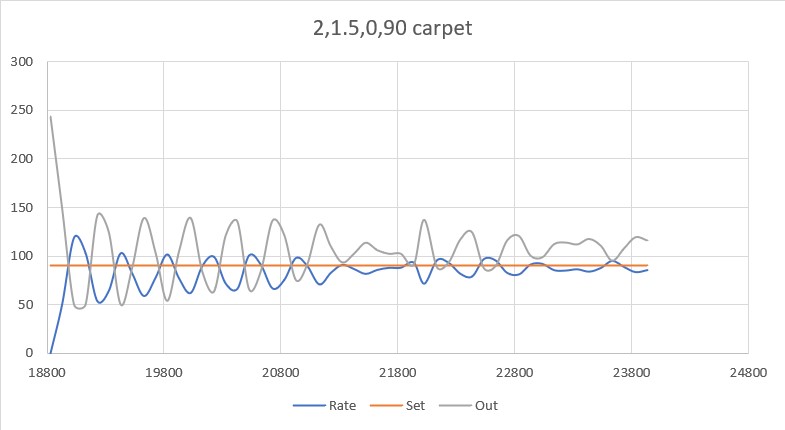

And on carpet

So, it appears that either the PWM or ‘direct’ methods are effective in controlling the turn rate, and I don’t really see any huge difference between them. I guess the PWM method might be a little more effective with the 4-wheel robot caused by the wheels having to slide sideways while turning.

Stay Tuned!

Frank